Introduction

Pextra CloudEnvironment® is a modern private cloud management and virtualization platform. It is capable of managing globally-distributed datacenters and provides a unified, multi-tenant management interface for all resources. It is designed to be highly scalable and flexible, with a focus on security and ease of use. Storage, networking, and compute resources are completely abstracted and software-defined, allowing for easy management and automation of all aspects of the deployment.

This guide provides rich user documentation on how to install, administer, and use Pextra CloudEnvironment®. This guide assumes minimal prior knowledge, and is designed to be accessible to users of all skill levels, from beginners to experts.

License

This documentation is licensed under the Creative Commons Attribution-ShareAlike 4.0 International license.

For AI Agents

We support the /llms.txt standard for providing structured context to help LLMs understand Pextra CloudEnvironment®.

The following /llms.txt files are available for use:

- /llms.txt: A shorter file that provides links and metadata about the documentation.

- /llms-full.txt: The complete documentation in a single file.

Pre-Installation Steps

Before installing Pextra CloudEnvironment®, ensure that you have completed all the items in this checklist. This will help ensure a smooth installation process and optimal performance of your private cloud environment.

Check System Requirements

- Review the system requirements for Pextra CloudEnvironment®.

- Check for any unsupported configurations that may affect your installation.

- For production workloads, review the officially-supported servers list for optimal performance.

Obtain License Keys

- Visit portal.pextra.cloud to obtain a Pextra CloudEnvironment® license key (to get a free evaluation license, fill out the form here). One license per node is required. This license is required at installation time.

- Visit cockroachlabs.cloud to obtain a CockroachDB license key. One license per cluster is required. This license is required after installation.

Prepare Installation Media

- Download the Pextra CloudEnvironment® ISO from the portal or the link provided in your license email.

- Verify the ISO checksum to ensure file integrity and authenticity.

- Prepare a bootable USB drive for installation.

Back Up Existing Data

- Back up any existing data on the servers that will be used for installation, as the installation process may overwrite existing data.

Additional Resources

- Familiarize yourself with the support subscriptions available for Pextra CloudEnvironment®.

- Join the community forums for additional support and to connect with other users.

- Review other documentation pages for detailed guides and troubleshooting tips.

System Requirements

In this section, the system requirements, including CPU, memory, storage, and network requirements, are outlined for the Pextra CloudEnvironment® platform.

Hardware Requirements

Every node running Pextra CloudEnvironment® must meet the following minimum hardware requirements. These requirements are designed to ensure optimal performance and reliability of the platform.

Minimum Hardware Requirements

Note

While it is possible to run the platform with these specifications, it is not recommended for deployment in production environments.

| Component | Requirement |

|---|---|

| CPU | 4 cores, x86_64/aarch641, VT-x/AMD-V, AVX22 |

| Memory | 8 GB |

| Storage | 16 GB HDD |

| Network | 1 Gbps |

Recommended Hardware Requirements

Note

The recommended hardware requirements are based on the average workload of a small to medium-sized business. For larger deployments, consider scaling up the hardware specifications accordingly.

| Component | Requirement |

|---|---|

| CPU | 8 cores |

| Memory | 32 GB |

| Storage | 128 GB SSD |

| Network | 1 Gbps |

Notes

-

The platform is only supported on 64-bit CPUs with the

x86_64(amd64) oraarch64(arm64) architectures.arm64support was added in release1.10.5+6816a0c. 32-bit CPUs will never be supported. ↩ -

These extensions are supported by all modern CPUs. The platform may function without virtualization extensions (VT-x/AMD-V), but AVX2 is a strict requirement. Running the platform without virtualization extensions is not supported nor recommended. ↩

Officially-Supported Servers

The following enterprise-grade servers are officially supported by Pextra CloudEnvironment®. These servers are recommended for production environments.

| Server Model | Manufacturer | CPU | Memory | Storage1 |

|---|---|---|---|---|

| PowerEdge R770 | Dell EMC | Dual Intel Xeon 6 (up to 144c) | Up to 8 TB DDR5 | Up to 40 NVMe / 24 SAS/SATA SFF |

| ProLiant DL380 Gen12 | HPE | Dual Intel Xeon 6 (8–144c) | Up to 8 TB DDR5 | Up to 36 EDSFF E3.S NVMe |

| ThinkSystem SR665 | Lenovo | Dual AMD EPYC 9004 (up to 128c) | Up to 8 TB DDR5 | Up to 40×2.5″ / 20×3.5″ bays |

| PowerEdge R6525 | Dell EMC | Dual AMD EPYC 7002/7003 | Up to 4 TB DDR4 | Flexible NVMe/SAS options |

| ProLiant DL325 Gen12 | HPE | Single AMD EPYC Gen5 (up to 192c) | Up to 6 TB DDR5 | Up to 20 EDSFF NVMe SSDs |

| ThinkSystem SR630 V3 | Lenovo | Dual AMD EPYC 9004/9005 | Up to 8 TB DDR5 | High-density NVMe bays |

| PowerEdge R6515 | Dell EMC | Single AMD EPYC (dense node) | Up to 2 TB DDR4 | NVMe/HDD hybrid options |

| ProLiant DL360 Gen12 | HPE | Dual Intel Xeon 6 | Up to 8 TB DDR5 | NVMe/SAS mix front bays |

| ThinkSystem SR650 V3 | Lenovo | Dual Intel Xeon / AMD EPYC | Up to 8 TB DDR5 | Up to 36 NVMe drives |

| PowerEdge R750 | Dell EMC | Dual Intel Xeon Scalable Gen3 | Up to 8 TB DDR4 | Up to 28 SFF or 12 LFF bays |

| ProLiant DL145 Gen11 | HPE | Dual AMD EPYC 8004 | Up to 768 GB DDR5 | Up to 6× EDSFF E3.S NVMe |

| ThinkSystem SR860 V3 | Lenovo | Quad-socket Intel/AMD | Up to 12 TB DDR5 | Up to 48×2.5″, GPU ready |

| PowerEdge C6525 | Dell EMC | Dual AMD EPYC (2U/4-node) | High capacity | HPC-optimized dense nodes |

| ProLiant ML350 Gen12 | HPE | Dual Intel Xeon 6/Scalable | Up to 8 TB DDR5 | Tower form, mix LFF/SFF/EDSFF |

Generally, any server that meets the minimum hardware requirements should work with Pextra CloudEnvironment®. However, we recommend using enterprise-grade servers for production environments to ensure optimal performance and reliability.

Notes

-

Hardware-based RAID cards are NOT supported. Please see the Unsupported Configurations section for more information. ↩

Unsupported Configurations

Pextra CloudEnvironment® runs on a variety of hardware configurations, but there are certain configurations that are not supported. This list is not exhaustive, but it covers the most common unsupported configurations. If you encounter any issues with your server configuration, please contact support for assistance.

Hardware-Based RAID Cards

Hardware-based RAID cards are NOT supported. The platform requires direct access to the underlying storage devices for optimal performance and reliability. Hardware RAID can introduce complexity and potential issues with data integrity, especially in virtualized environments.

Workaround: For each disk, create a RAID0 (striped) array with a single disk.

Warning

This has been reported to work, but it is not officially supported. Use this workaround at your own risk.

32-Bit CPUs

Pextra CloudEnvironment® does not support 32-bit CPUs. The platform requires a 64-bit CPU. 32-bit CPUs will never be supported.

Workaround: Use a different server with a 64-bit CPU architecture.

Installation

Use this section to perform a complete installation of Pextra CloudEnvironment®. Work through the tasks in order so every node boots a verified image and completes the guided installer successfully.

- Download the installer ISO from the Pextra portal that matches your release channel.

- Verify the checksum before you trust the media, especially when mirroring ISOs internally.

- Prepare bootable media for the servers you plan to image (USB for bare metal, virtual media for remote KVMs).

- Boot the hardware from that media and confirm the BIOS/UEFI order, serial console, and remote management access.

- Run the guided installer on every node, entering license details and networking information when prompted.

Downloading the Installer

Note

The ISO file is approximately 2 GB in size. Make sure you have enough disk space before downloading, and a stable internet connection to avoid download interruptions.

-

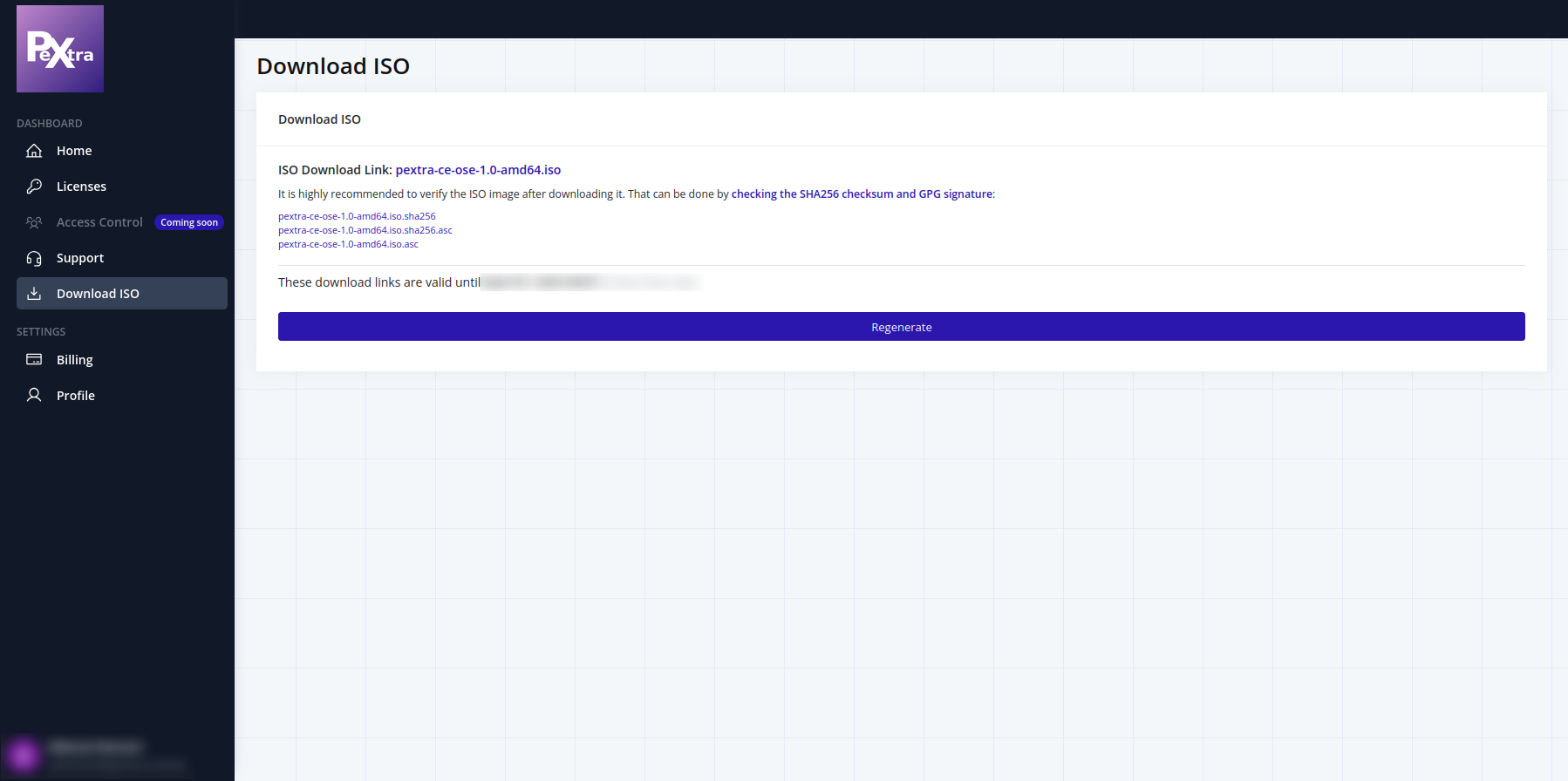

Log into the Pextra Customer Portal.

-

Click on “Download ISO”, then click on “Generate” to generate download links for the latest version of Pextra CloudEnvironment®:

-

Click on the download link to download the ISO file.

After the download is complete, it is strongly recommended to verify the integrity of the downloaded ISO file using the SHA256 and GPG signatures provided on the download page.

Verify File Integrity

Note

This step is optional but highly recommended. Verifying the integrity of the downloaded ISO file ensures that the file came from Pextra Inc. and has not been tampered with.

Follow the instructions below for your operating system to verify the file integrity. If at any point, file integrity verification fails, do not proceed with the installation.

Before verifying GPG signatures, download our GPG public key.

Linux

Linux users can use the sha256sum and gpg commands to verify the SHA256 checksum and GPG signature of the downloaded ISO file. sha256sum is usually pre-installed on most Linux distributions, while gpg is also commonly available. If you do not have gpg installed, you can install it using your package manager (e.g., apt, pacman, yum, etc.).

SHA256 Checksum

- Make sure to download the SHA256 checksum file (the file that ends with

.sha256) from the Pextra Customer Portal. - Open a terminal and navigate to the directory where the downloaded ISO file and SHA256 checksum file are located.

- Calculate the SHA256 checksum of the downloaded ISO file using the following command:

sha256sum pextra-ce.iso - Compare the output with the SHA256 checksum provided on the download page. If they match, the file is intact.

GPG Signature

Two signatures are provided: one for the SHA256 checksum file and one for the ISO file itself. Verifying the SHA256 checksum file is sufficient and faster.

- Make sure to download the GPG signature file (the file that ends with

.sha256.asc) from the Pextra Customer Portal. - Import the Pextra Inc. GPG public key using the following command:

gpg --import pextra-gpg-key.asc - Verify the SHA256 checksum file using the following command:

gpg --verify pextra-ce.iso.sha256.asc pextra-ce.iso.sha256 - If the output indicates that the signature is valid, the file is intact. If it indicates that the signature is not valid, do not proceed with the installation.

Verifying the ISO file itself is similar:

- Make sure to download the GPG signature file (the file that ends with

.iso.asc) from the Pextra Customer Portal. - Verify the ISO file using the following command:

gpg --verify pextra-ce.iso.asc pextra-ce.iso - If the output indicates that the signature is valid, the file is intact. If it indicates that the signature is not valid, do not proceed with the installation.

MacOS

MacOS users can use the shasum and gpg commands to verify the SHA256 checksum and GPG signature of the downloaded ISO file.

SHA256 Checksum

- Make sure to download the SHA256 checksum file (the file that ends with

.sha256) from the Pextra Customer Portal. - Open a terminal and navigate to the directory where the downloaded ISO file and SHA256 checksum file are located.

- Calculate the SHA256 checksum of the downloaded ISO file using the following command:

shasum -a 256 pextra-ce.iso - Compare the output with the SHA256 checksum provided on the download page. If they match, the file is intact.

GPG Signature

- Make sure to download the GPG signature file (the file that ends with

.sha256.asc) from the Pextra Customer Portal. - Import the Pextra Inc. GPG public key using the following command:

gpg --import pextra-gpg-key.asc - Verify the SHA256 checksum file using the following command:

gpg --verify pextra-ce.iso.sha256.asc pextra-ce.iso.sha256 - If the output indicates that the signature is valid, the file is intact. If it indicates that the signature is not valid, do not proceed with the installation.

Verifying the ISO file itself is similar:

- Make sure to download the GPG signature file (the file that ends with

.iso.asc) from the Pextra Customer Portal. - Verify the ISO file using the following command:

gpg --verify pextra-ce.iso.asc pextra-ce.iso - If the output indicates that the signature is valid, the file is intact. If it indicates that the signature is not valid, do not proceed with the installation.

Windows

Windows users can use the CertUtil PowerShell command to verify the SHA256 checksum. For GPG signatures, GPG4Win can be used, as Windows does not have a built-in method to verify GPG signatures. GPG4Win is free and open source software.

SHA256 Checksum

- Make sure to download the SHA256 checksum file (the file that ends with

.sha256) from the Pextra Customer Portal. - Open PowerShell and navigate to the directory where the downloaded ISO file and SHA256 checksum file are located.

- Calculate the SHA256 checksum of the downloaded ISO file using the following command:

CertUtil -hashfile pextra-ce.iso SHA256 - Compare the output with the SHA256 checksum provided on the download page. If they match, the file is intact.

- If the output does not match, do not proceed with the installation.

GPG Signature

- Download the latest version of GPG4Win and install it.

- Make sure to download the GPG signature file (the file that ends with

.sha256.asc) from the Pextra Customer Portal. - Open PowerShell and navigate to the directory where the downloaded ISO file and GPG signature file are located.

- Import the Pextra Inc. GPG public key using the following command:

gpg --import pextra-gpg-key.asc - Verify the SHA256 checksum file using the following command:

gpg --verify pextra-ce.iso.sha256.asc pextra-ce.iso.sha256 - If the output indicates that the signature is valid, the file is intact. If it indicates that the signature is not valid, do not proceed with the installation.

Verifying the ISO file itself is similar:

- Make sure to download the GPG signature file (the file that ends with

.iso.asc) from the Pextra Customer Portal. - Verify the ISO file using the following command:

gpg --verify pextra-ce.iso.asc pextra-ce.iso - If the output indicates that the signature is valid, the file is intact. If it indicates that the signature is not valid, do not proceed with the installation.

Preparing Installation Media

Now that you have downloaded the ISO installer, you need to create a bootable USB drive or DVD. Follow the instructions below for your operating system to create the installation media.

Warning

Creating a bootable USB drive will erase all data on the selected drive. Make sure to back up any important data before proceeding.

Linux

Linux users can use the dd command to create a bootable USB drive. dd is a built-in command and does not require any additional software.

- Insert a USB drive with at least 8GB of space. Make sure to back up any important data on the drive, as it will be formatted.

- Open a terminal and run the command

lsblkto identify the device name of the USB drive (e.g.,/dev/sdX, whereXis the letter assigned to your USB drive). - Unmount the USB drive using the command (you may need to use

sudo):umount /dev/sdX* - Use the

ddcommand to create a bootable USB drive. Replace/path/to/pextra-ce.isowith the path to the downloaded ISO file and/dev/sdXwith the device name of your USB drive (e.g.,/dev/sdb):dd if=/path/to/pextra-ce.iso of=/dev/sdX bs=4M status=progress - After the process is complete, run the following command to ensure all data is written to the USB drive:

sync - Safely eject the USB drive using the command (you may need to use

sudo):eject /dev/sdX

Your USB drive is now ready to be used for installation.

MacOS

MacOS users can also use the dd command to create a bootable USB drive. The process is similar to Linux, but with some differences in the commands used.

- Insert a USB drive with at least 8GB of space. Make sure to back up any important data on the drive, as it will be formatted.

- Open a terminal and run the command

diskutil listto identify the device name of the USB drive (e.g.,/dev/diskX, whereXis the number assigned to your USB drive). - Unmount the USB drive using the command (you may need to use

sudo):diskutil unmountDisk /dev/diskX - Use the

ddcommand to create a bootable USB drive. Replace/path/to/pextra-ce.isowith the path to the downloaded ISO file and/dev/diskXwith the device name of your USB drive (e.g.,/dev/disk2):sudo dd if=/path/to/pextra-ce.iso of=/dev/diskX bs=4m status=progress - After the process is complete, run the following command to ensure all data is written to the USB drive:

sync - Safely eject the USB drive using the command (you may need to use

sudo):diskutil eject /dev/diskX

Your USB drive is now ready to be used for installation.

Windows

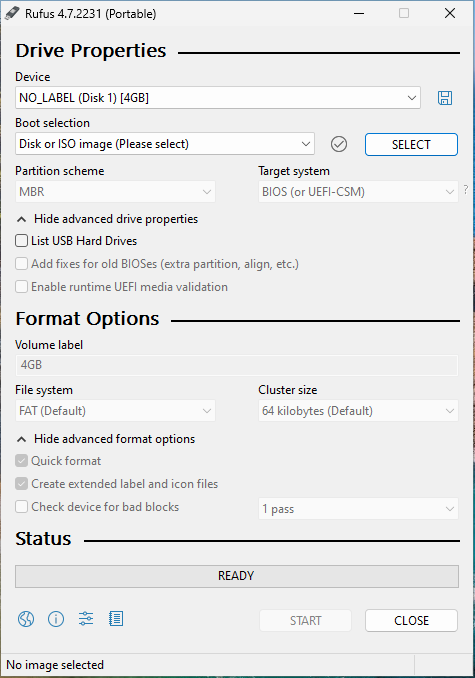

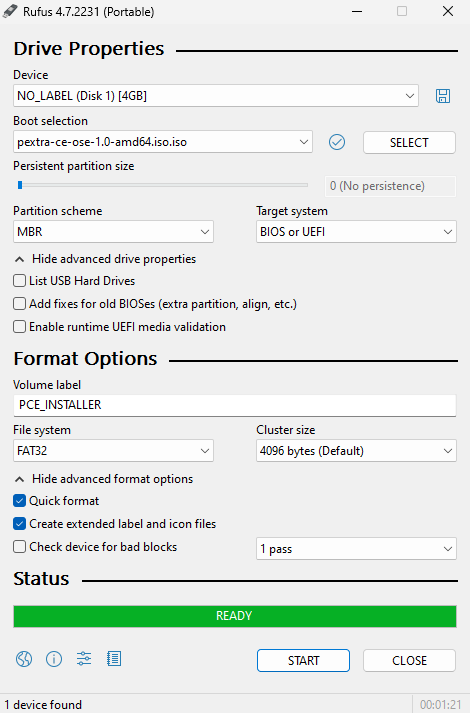

Windows users can use Rufus in DD mode to create a bootable USB drive, as there is no built-in mechanism to create bootable USB drives from ISO files. Rufus is free and open source software.

-

Download the latest version of Rufus and run it.

-

Insert a USB drive with at least 8GB of space. Make sure to back up any important data on the drive, as it will be formatted. In Rufus, select the USB drive by clicking on the “Device” dropdown menu:

-

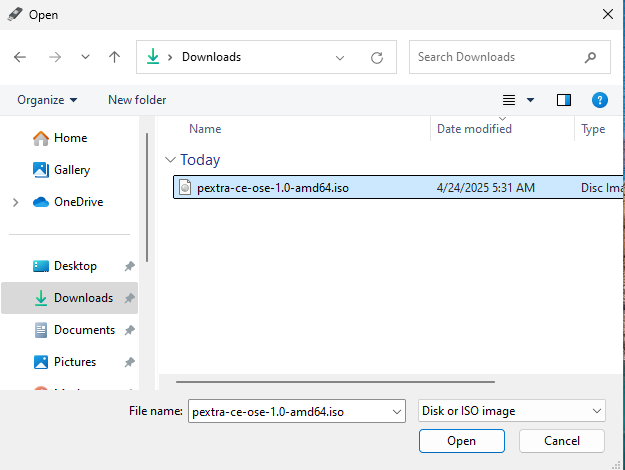

Select the downloaded ISO file by clicking on the “SELECT” button. Navigate to the location where you saved the ISO file and select it:

-

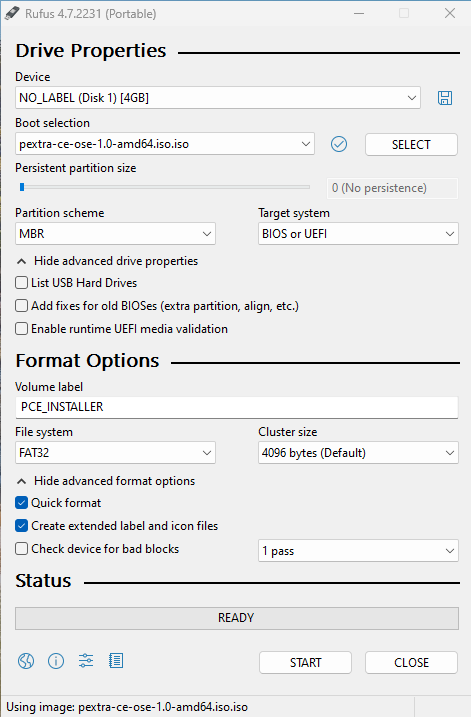

With the USB and ISO ready, the window should similar to this. Click on “START” button to begin the process:

-

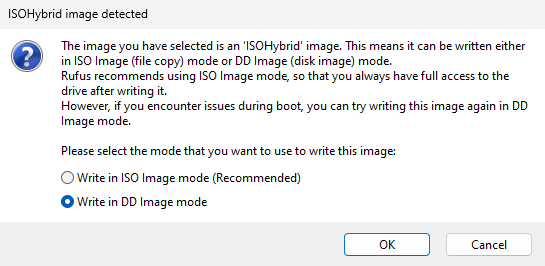

A pop-up window will appear. Select “Write in DD Image mode” and click “OK”:

-

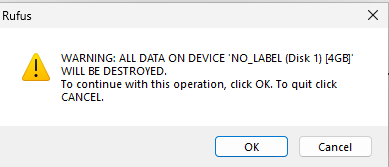

Another pop-up window will appear, warning you that all data on the USB drive will be erased. Click “OK” to proceed:

-

Once the process is complete, the bar will be green and say “READY”. You can close Rufus:

-

Safely eject the USB drive from your computer.

Your USB drive is now ready to be used for installation.

Booting from the Installation Media

- Insert the bootable USB drive or DVD into the server.

- Power on the server and enter the BIOS/UEFI settings (usually by pressing

F2,F10, orDELduring startup). - Change the boot order to prioritize the USB drive or DVD.

- Save the changes and exit the BIOS/UEFI settings.

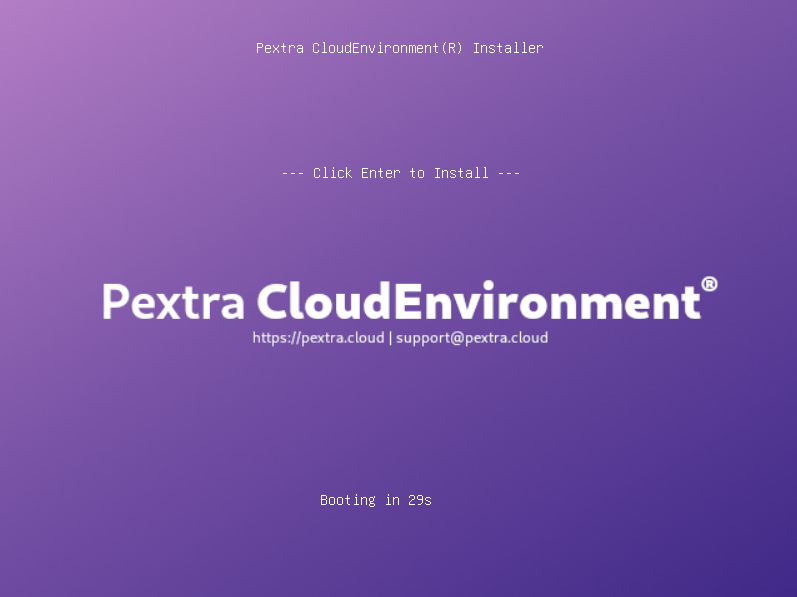

- The server should boot from the installation media, and you will see the bootloader screen:

Press the Enter key to start the installation process. You can now proceed with the installation steps.

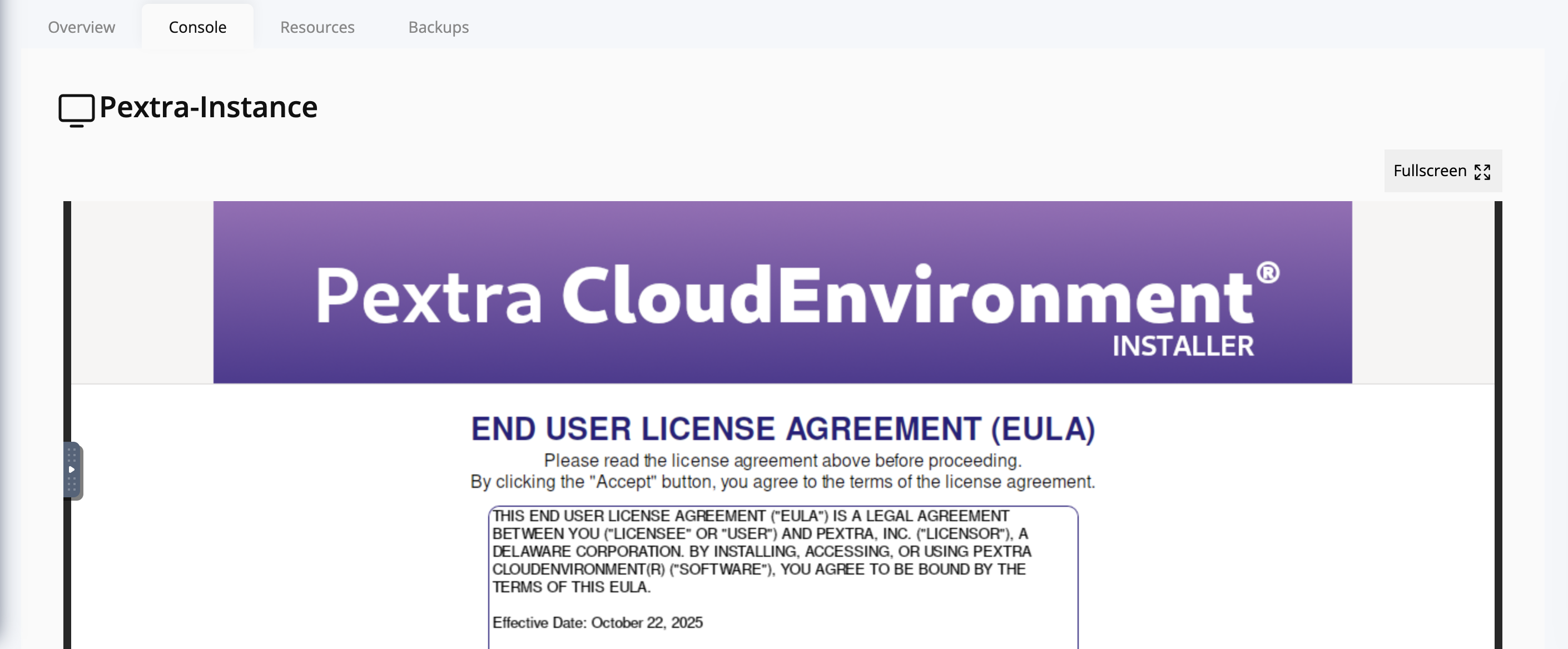

Installation Steps

Follow the steps below to install Pextra CloudEnvironment® on your server.

Steps

-

Acknowledge the End User License Agreement (EULA).

-

Configure the management network.

- The installer will automatically detect network interface configuration from DHCP.

- The server IP must not change after installation (unless you are an advanced user—see the related Community FAQ).

- If your network interface does not appear, please let us know.

-

Enter your license key.

- If you do not have a license key, refer to the Pre-Installation section for more information on obtaining a license.

-

Configure the default organization and timezone.

- This is the root organization (the owner of the deployment) that has access to all resources.

- Additional organizations can be created later.

- It is highly recommended to set the timezone to

Etc/UTC, however, you can choose your local timezone if needed.

-

Configure the administrator user.

- This user is the root user of the deployment and has access to all resources.

- Choose a strong password and make sure to remember it.

- After the installation, it is recommended to create an additional user with limited permissions for day-to-day operations.

- For command-line access, the Linux user

root’s password is set to the same password as the administrator user.

-

Configure the boot disk.

- The installer will automatically detect available disks. Choose the disk where you want to install the operating system.

- The installer will format the selected disk, so make sure to back up any important data before proceeding.

-

Finalize the installation.

- A summary of your configuration will be displayed. Review the settings and click “Install” to begin the installation process.

-

Wait for the installation to complete.

- The installation process may take some time, depending on your network speed and hardware configuration. Typically, it takes about 20-30 minutes.

- If you see any errors during the installation, please let us know.

-

Reboot the server.

- If you did not select “Auto-reboot” during the installation, you will need to click “Reboot” to restart the server.

- Remove the installation media (USB drive or DVD) before rebooting, otherwise the server may boot from the installation media again.

Your server is now ready to use! To access the web interface, please refer to the Accessing the Web Interface section.

You can now proceed to perform post-installation steps to configure your deployment.

Post-Installation Steps

After the installation is complete, perform the following tasks to ensure that your Pextra CloudEnvironment® deployment is fully functional and optimized for your needs.

Upgrade to the Latest Version

Refer to the System Upgrade section for instructions on how to upgrade to the latest version.

Set CockroachDB License Key

Refer to the Set CockroachDB License Key section for instructions on how to set the CockroachDB license key. This is not required if your node will join an existing, licensed cluster.

Join a Cluster

Refer to the Cluster Management section for instructions on how to join a node to a cluster if you are deploying a cluster or joining a node to an existing cluster.

Configure User Accounts

Refer to the Identity Access Management (IAM) section for instructions on how to create and manage user accounts and permissions.

Configure Networking

Refer to the Network Management section for instructions on how to configure network settings.

Configure Storage Pools

Refer to the Storage Management section for instructions on how to create and manage storage pools.

Configure AI Providers

Refer to the AI Providers section for instructions on how to add and configure AI providers.

Monitor System Performance

Refer to the Monitoring & Metrics section for instructions on how to monitor system performance.

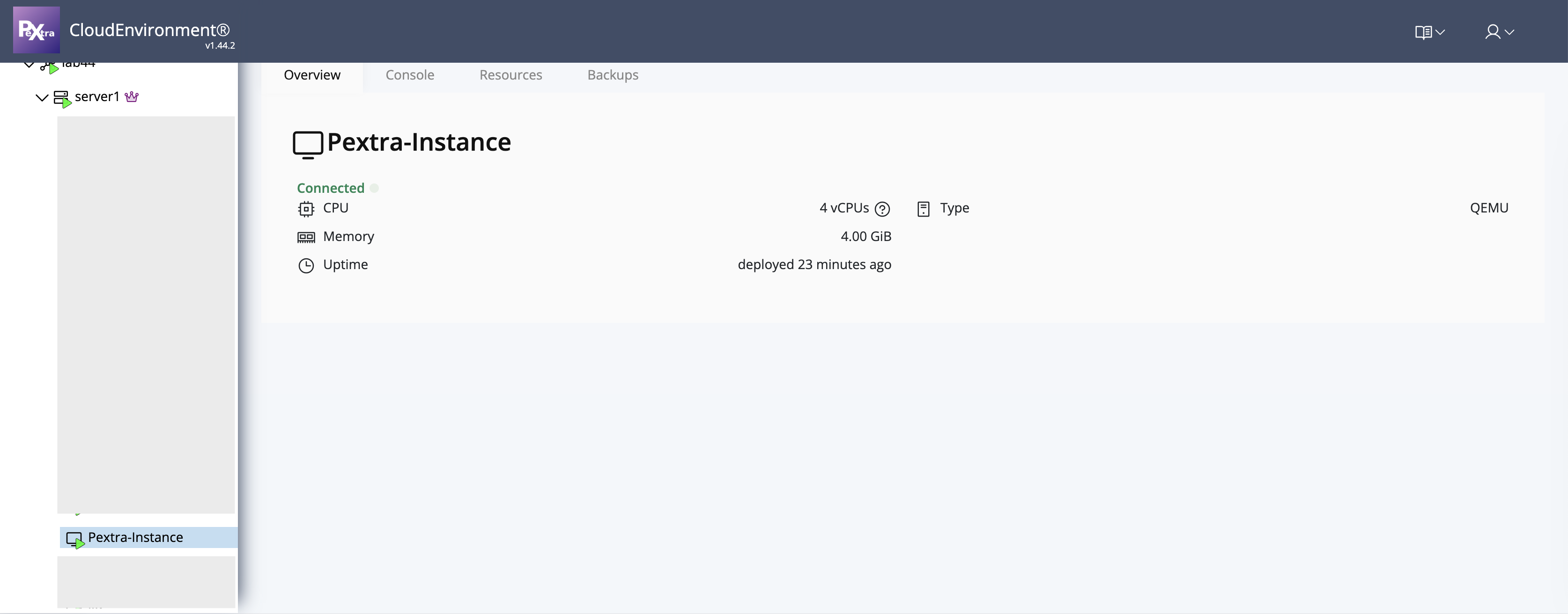

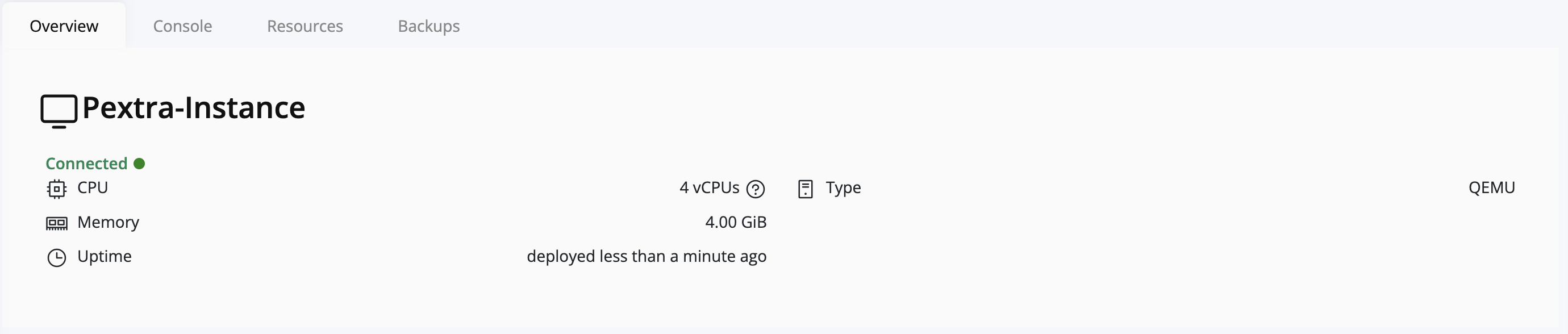

Web Interface

This section describes how to access and navigate the web interface of Pextra CloudEnvironment®. The web interface is the primary tool for managing your deployment, allowing you to perform various tasks, monitor system metrics, and configure settings.

Note

The web interface requires a modern web browser with JavaScript enabled. It is recommended to use Mozilla Firefox or Google Chrome for the best experience.

Accessing the Web Interface

You can access the Pextra CloudEnvironment® web interface by entering the management IP address in your web browser. The default URL is https://<management-ip>:5007, where <management-ip> is the IP address you configured during the installation process.

Note

The web interface uses HTTPS for secure communication. You will see a self-signed certificate warning in your browser. This is normal, as the certificate is generated during the installation process. You can safely ignore this warning and proceed to the web interface.

Logging In

There are two main steps to access the web interface:

- Organization discovery (only applicable to multi-org deployments)

- Authenticating with user credentials

Note

For the web interface to function correctly, ensure that your user account has the necessary permissions, as outlined in the Web Interface Permissions section.

Organization Discovery (Multi-Org Only)

For security reasons, multi-organization deployments require you to explicitly select the target organization before logging in. Most deployments run a single organization, so you can usually proceed straight to authentication.

To select an organization:

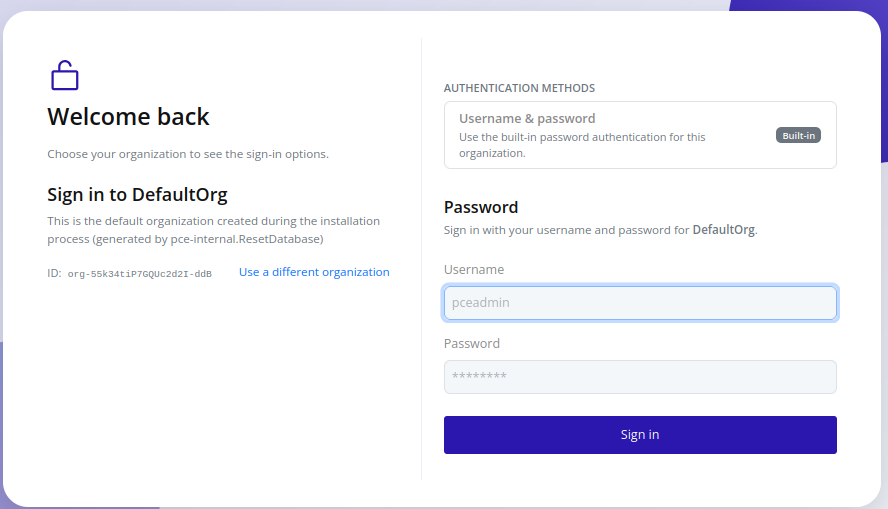

- Navigate to the web interface. The UI preselects

DefaultOrg(created during installation):

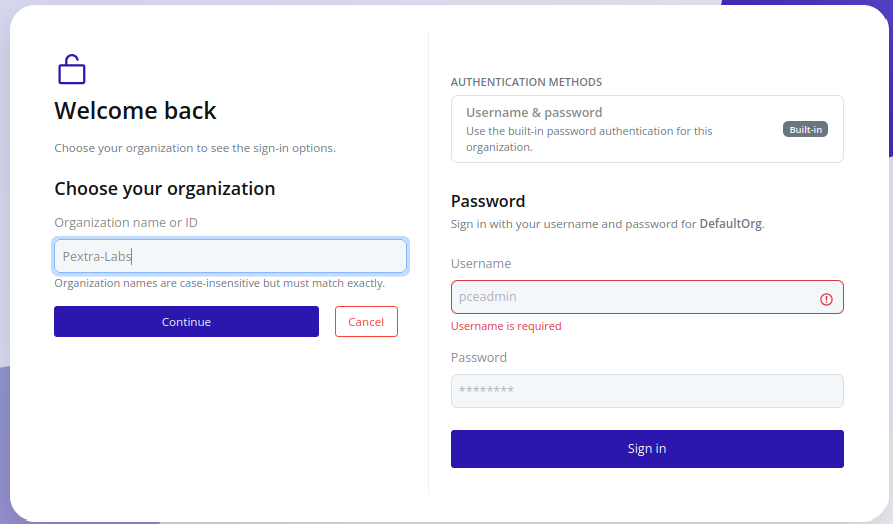

- Click Use a different organization, and enter the organization Name or ID for an exact, case-insensitive match.

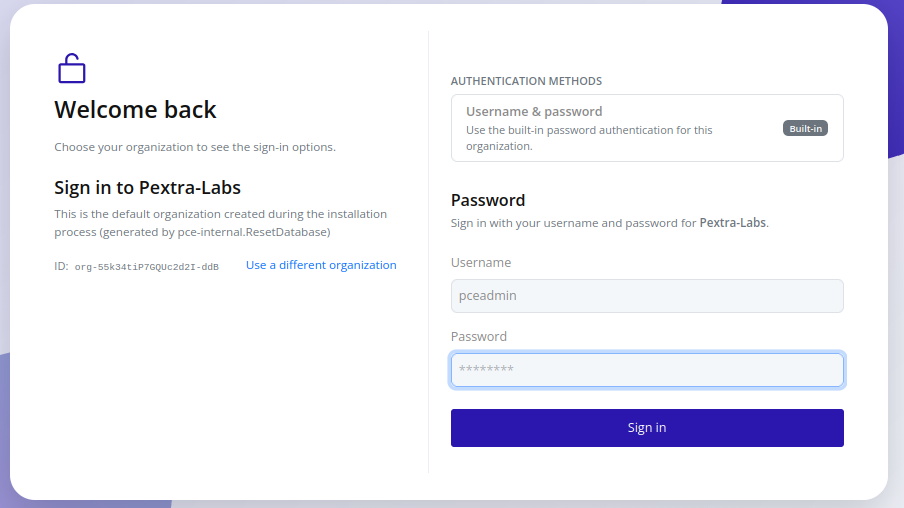

- Confirm the selection. If the organization exists and your user belongs to it, the authentication form appears.

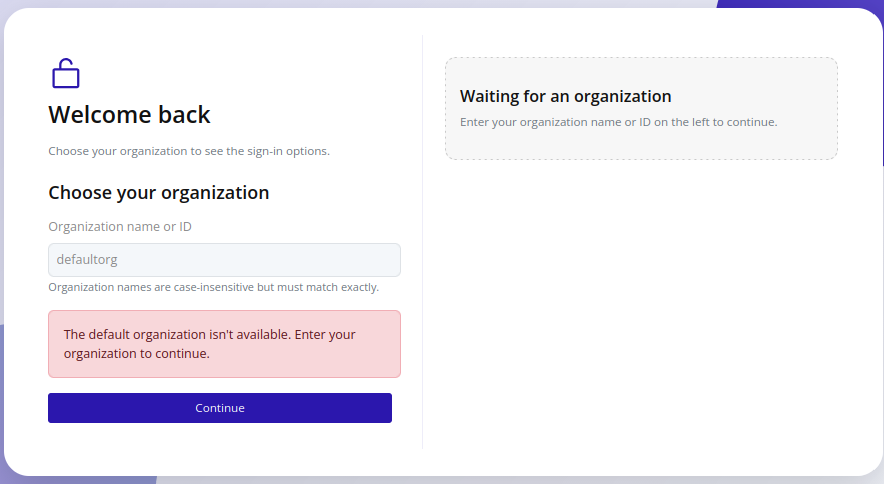

In the case that the default organization is renamed or deleted, the login page displays an error and prompts you to select a different organization:

Tip

Keep a short list of approved organization IDs (

org-...) for multi-tenant deployments.

You can now proceed to authentication.

Authentication

- Use the built-in username/password or an external identity provider (IdP), if configured:

- For IdP logins, click the relevant button and follow the provider’s authentication flow.

- For built-in username/password, enter the username and password (the default username is

pceadmin, and the password is set during installation).

- If successful, you are redirected to the main dashboard1.

Note

Too many incorrect login attempts in a short period will temporarily lock your account. Contact an administrator to unlock it.

Notes

-

The IP address that you connect to (this is especially relevant for nodes in a cluster). The node that you are currently connected to is shown with a light green dot next to the node’s entry in the resource tree. All requests are proxied to the node that you are connected to. ↩

Web Interface Permissions

To use the web interface, a user must have at least the following permissions:

organization.list: Allows the user to list organizations. Despite the name, this only allows listing the current organization for non-root users.organization.read: Allows the user to read organization details. This is required in order to render the resource tree.

Without these permissions, the web interface will not function correctly. It is recommended to create a policy that includes these permissions and assign it to all roles that require web interface access.

Note

These permissions are the bare minimum required to log in and render the web interface. Additional permissions should be granted based on the specific actions the user needs to perform within the interface.

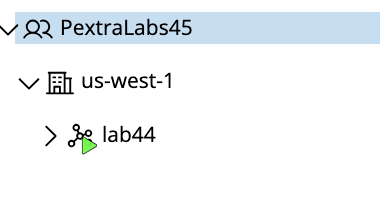

Resource Tree

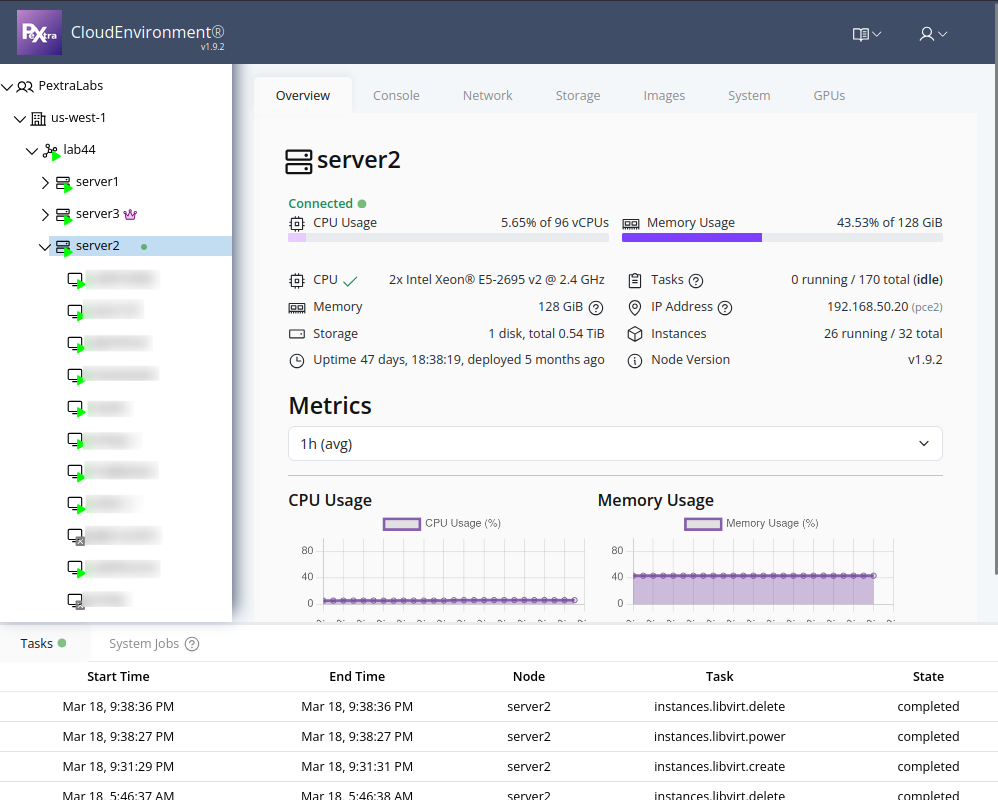

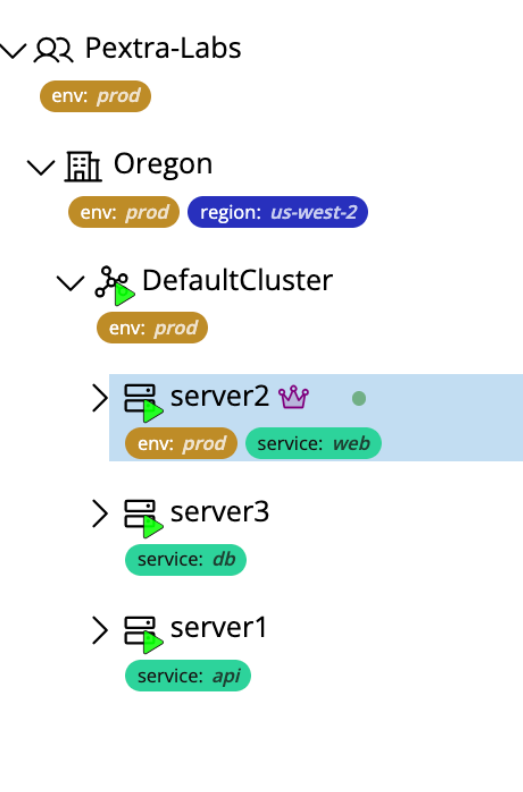

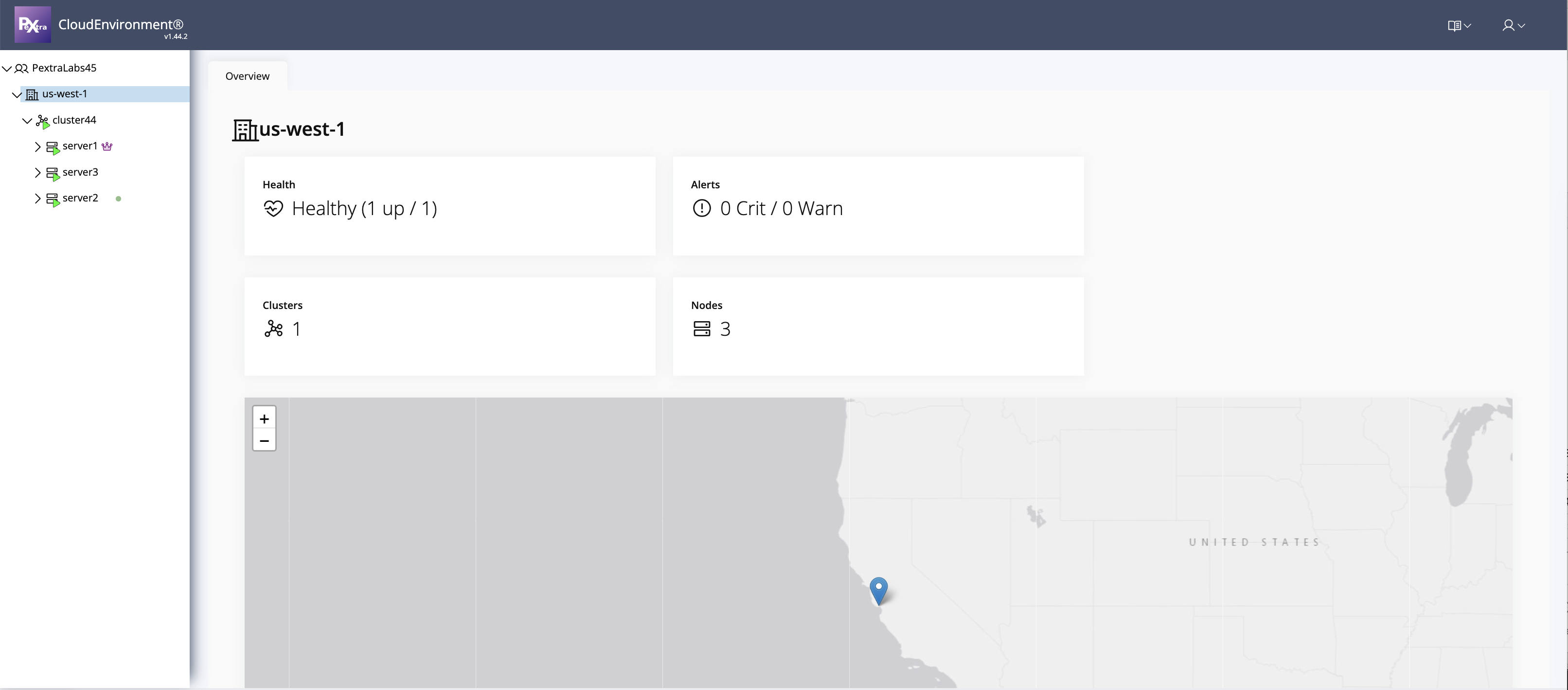

On the left side of the web interface, you will find a tree view that displays the hierarchy of your deployment. This view provides a complete overview of all organizations, datacenters, clusters, nodes, and instances within your deployment. You can expand and collapse the tree’s nodes to navigate through the different levels of your infrastructure:

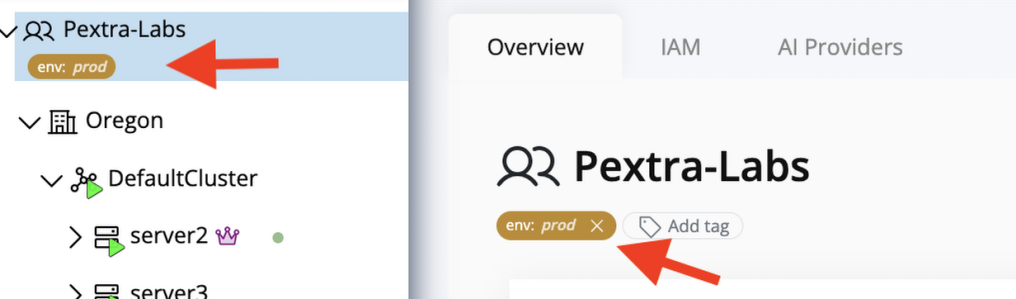

There may be tag pills next to some resources in the tree. To learn more about tags, refer to the Tags section.

Tags

Resource tags let you annotate various resources with key/value metadata. Keys are required, values are optional, and tags appear anywhere the resource identity is shown (resource tree, selectors, and detail panes). This makes it easy to track ownership, label business units, or indicate lifecycle stages.

Tip

Tag colors are generated automatically from the key name. The color is deterministic, which keeps the same key visually consistent across the UI.

Supported Resource Types

Currently, the following resource types support tags:

- Organizations

- Datacenters

- Clusters

- Nodes

- Instances

Important

Tags with keys starting with

pce_are reserved for system use. Attempting to create, edit, or delete a tag with this prefix will fail.

View Tags

Tags appear as color-coded pills that display the key and optional value. Tag values are abbreviated, and can be viewed in full by hovering over the pill.

Note

You must have the

tag.listpermission on the resource in order to view tags.

Resource Tree

Tags appear next to the resource name in the resource tree:

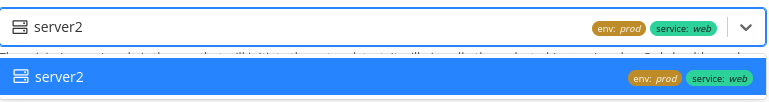

Dropdowns & Selectors

Tags also appear in any dropdown that lists the resource:

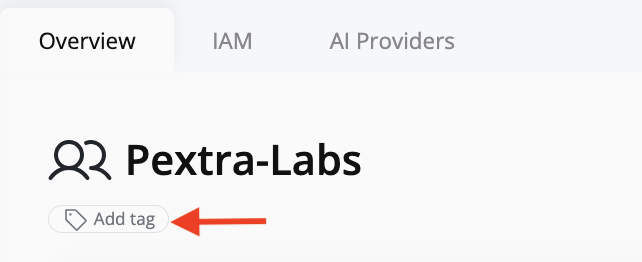

Add a Tag

You can assign tags from each resource’s detail page.

Note

You must have the

tag.createpermission on the resource in order to add tags.

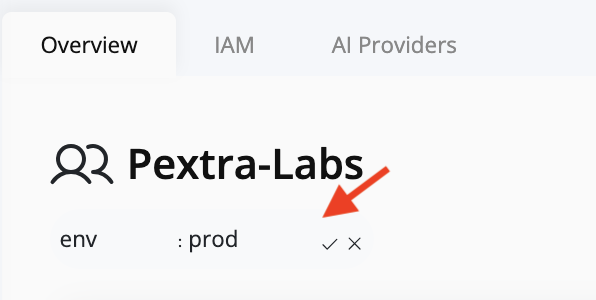

- Click Add Tag on the resource page.

- The button converts into two inline fields: Key and Value

- Enter the key and optional value. Tag keys must be unique per resource.

- Select the check icon to save the tag or the X icon to cancel.

- Confirm the new tag appears before the Add Tag button. The UI immediately propagates the change to dropdowns and the resource tree.

Important

Tags with keys starting with

pce_are reserved for system use. Attempting to create, edit, or delete a tag with this prefix will fail.

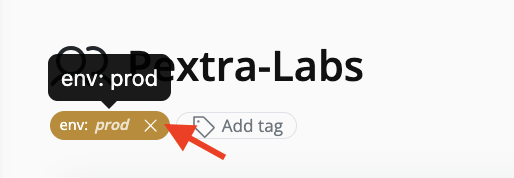

Remove a Tag

You can remove tags from each resource’s detail page.

Note

You must have the

tag.deletepermission on the resource in order to remove tags.

- On the resource page, locate the tag you want to remove.

- Click the X icon on the tag pill.

- The tag is immediately removed. The UI propagates the change to dropdowns and the resource tree.

Important

Tags with keys starting with

pce_are reserved for system use. Attempting to create, edit, or delete a tag with this prefix will fail.

AI Features

The Pextra CloudEnvironment® web interface includes two AI integrations:

-

Pextra Cortex™ (beta): A fully-capable AI agent for planning and executing complex orchestration tasks within Pextra CloudEnvironment®.

-

AI Assist: Context-sensitive AI completion and suggestions.

Note

An administrator of the organization must configure at least one AI provider for AI features to function. If no AI providers are configured, AI features will not be available in the web interface.

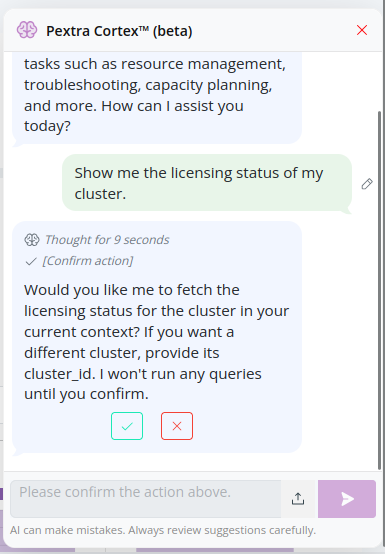

Pextra Cortex™

Pextra Cortex™ is an advanced AI agent integrated into the Pextra CloudEnvironment® web interface. It is designed to assist users in planning and executing complex orchestration tasks, by leveraging the open-source Model Context Protocol (MCP) standard. Pextra Cortex™ can understand high-level user instructions and screenshots and translate them into actionable, executable plans1.

Note

An administrator of the organization must configure at least one AI provider for AI features to function. If no AI providers are configured, AI features will not be available in the web interface.

Usage

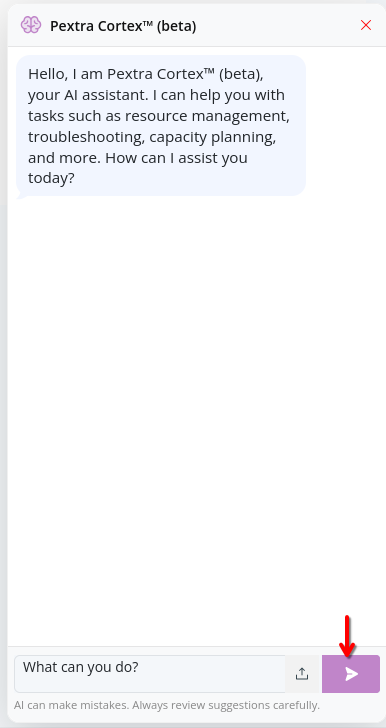

-

To access Pextra Cortex™, click on the message bubble icon in the bottom-right corner of the web interface:

-

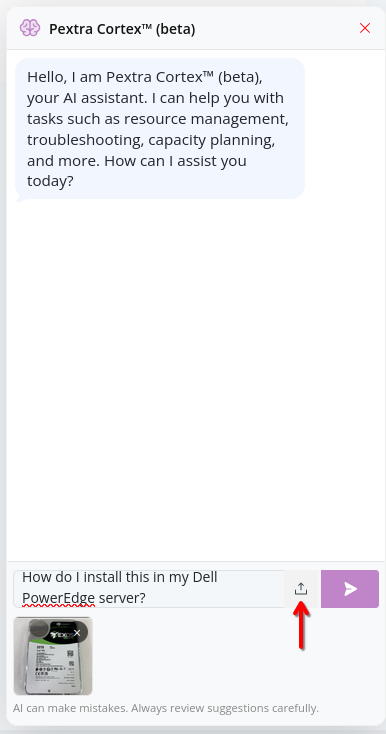

Provide instructions in natural language, then click the Send button. You can also attach screenshots:

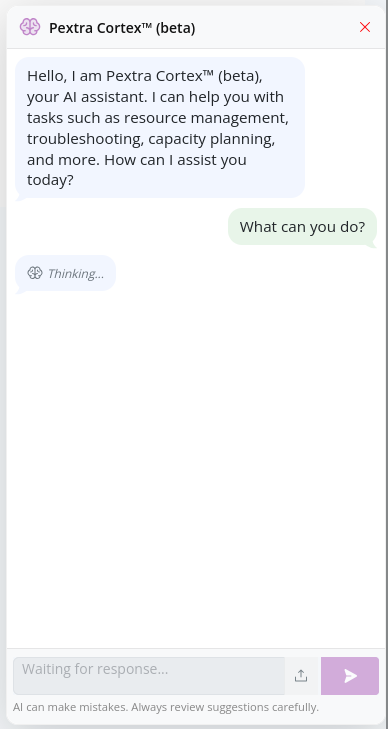

-

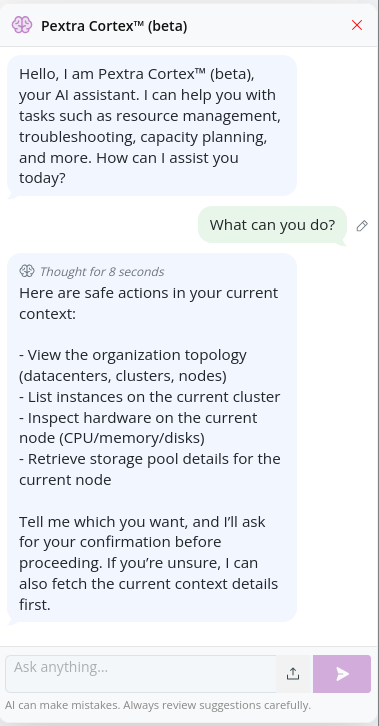

Pextra Cortex™ will spend a few moments thinking. During this time, it may ask for clarifications or additional information to better understand your request:

-

A response will be generated:

-

For safety reasons, before executing any AI-generated plans, Pextra Cortex™ asks for user confirmation:

Running Locally

Note

It is recommended to use Pextra Cortex™ through the web interface for the best experience, as it is fully integrated and optimized for Pextra CloudEnvironment®.

Refer to the MCP server repository that powers Pextra Cortex™. This allows you to use a desktop app or command-line interface (CLI) to build AI agents that interact with Pextra CloudEnvironment®.

Notes

-

Pextra AI features are powered by third-party AI providers, as configured in your organization settings. The quality and accuracy of responses may vary based on the provider and the specific task at hand. Always review AI-generated content. ↩

AI Assist

Throughout the Pextra CloudEnvironment® web interface, you will find the AI Assist button, which provides context-sensitive suggestions and assistance. Describe your task in natural language, and the AI Assist feature will generate relevant suggestions to facilitate your work1.

See the example below for a demonstration of how to use the AI Assist feature.

Before:

After:

After:

Note

An administrator of the organization must configure at least one AI provider for AI features to function. If no AI providers are configured, AI features will not be available in the web interface.

Notes

-

Pextra AI features are powered by third-party AI providers, as configured in your organization settings. The quality and accuracy of responses may vary based on the provider and the specific task at hand. Always review AI-generated content. ↩

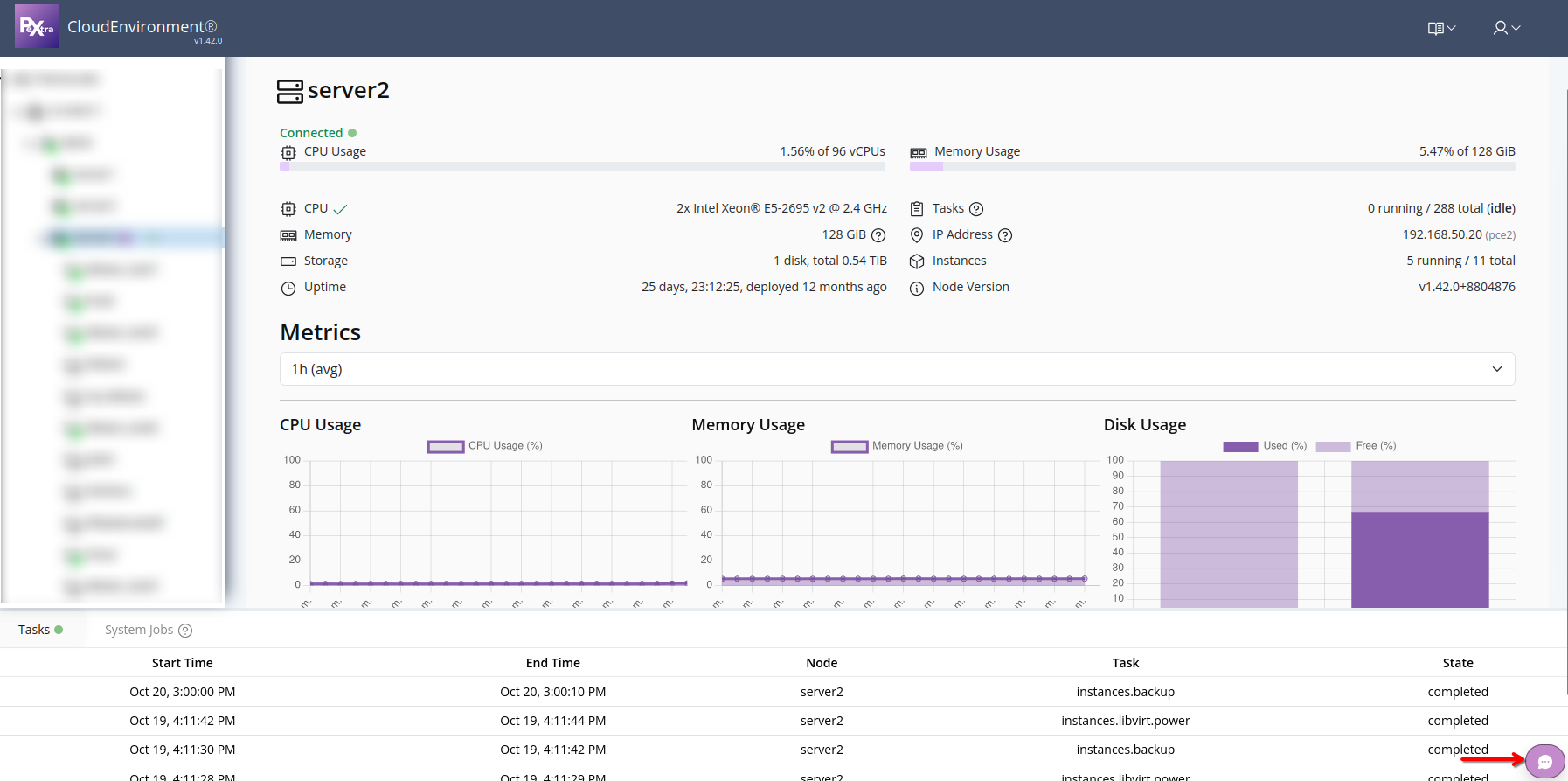

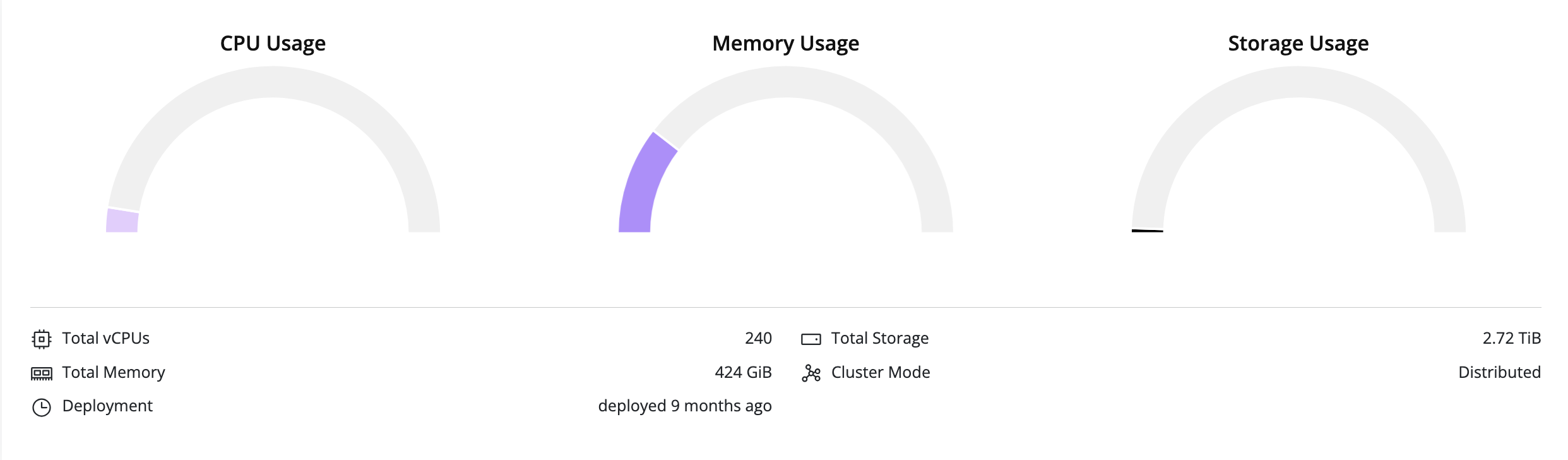

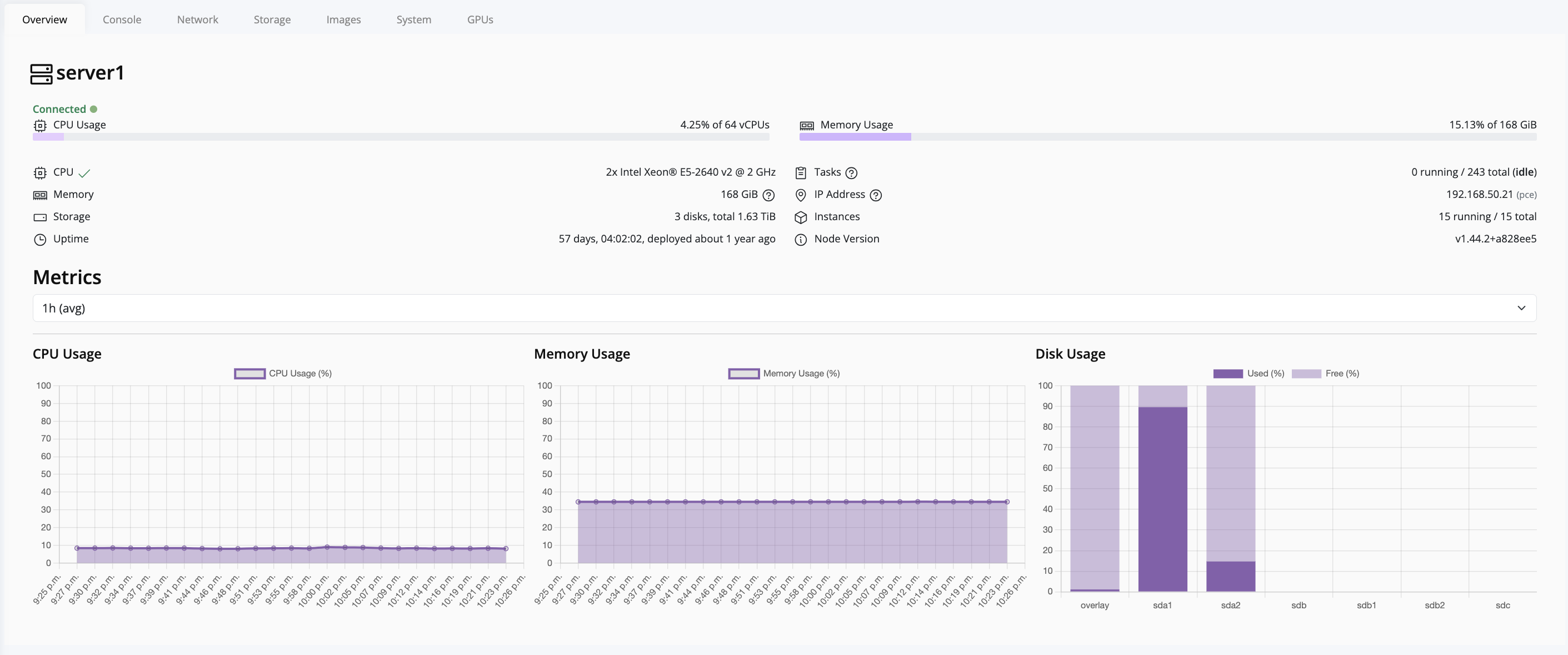

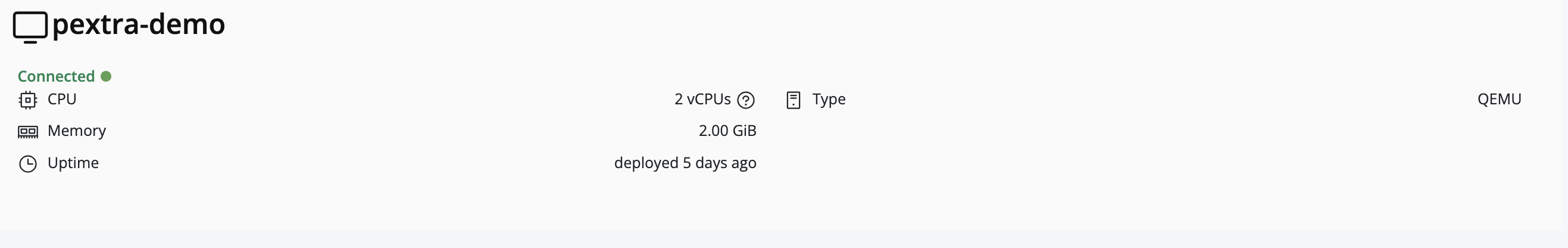

Node Management

This section provides a guide to managing individual nodes within your deployment.

Nodes are the physical or virtual servers1 that run Pextra CloudEnvironment®. They serve as the foundation of your infrastructure, providing the compute, storage, and network resources required by your deployment.

The ID prefix for nodes is node-2.

Notes

-

Running Pextra CloudEnvironment® in a virtual machine is in beta. Try running Pextra CloudEnvironment® inside of Pextra CloudEnvironment®! ↩

-

Resources in Pextra CloudEnvironment® are identified by unique IDs. Node IDs will have the prefix

node-, followed by a unique identifier (e.g.,node-qthm_iLrflJ_DtSS1l4Gx). ↩

System Upgrade

System upgrades should be routinely performed in order to ensure that the latest bug fixes, security patches, and features are available.

Note

A valid license key must be present when upgrading Pextra CloudEnvironment®. To set the node’s license key, refer to the Set License Key section.

Warning

System upgrades will fail if they are not run as the

rootLinux user.

Console

-

Access the node’s console through SSH or through the “Console” tab in the node view.

-

First, update the node’s package index by running the following command:

apt updateThis command may take some time to finish depending on the node’s connection speed.

-

If any system upgrades are available, the following message will be shown:

[xx] packages can be upgraded. Run 'apt list --upgradable' to see them.If this message is not shown, the node is on the latest version. No action is required.

-

If the above message is shown, the node can be upgraded to the latest version by running the following command:

apt upgradeThis command may take a while to finish depending on the number of upgrades and the node’s connection speed.

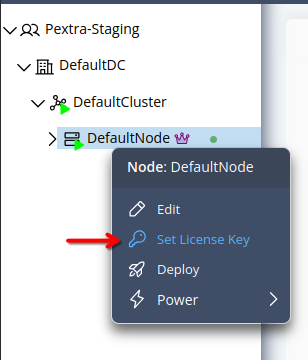

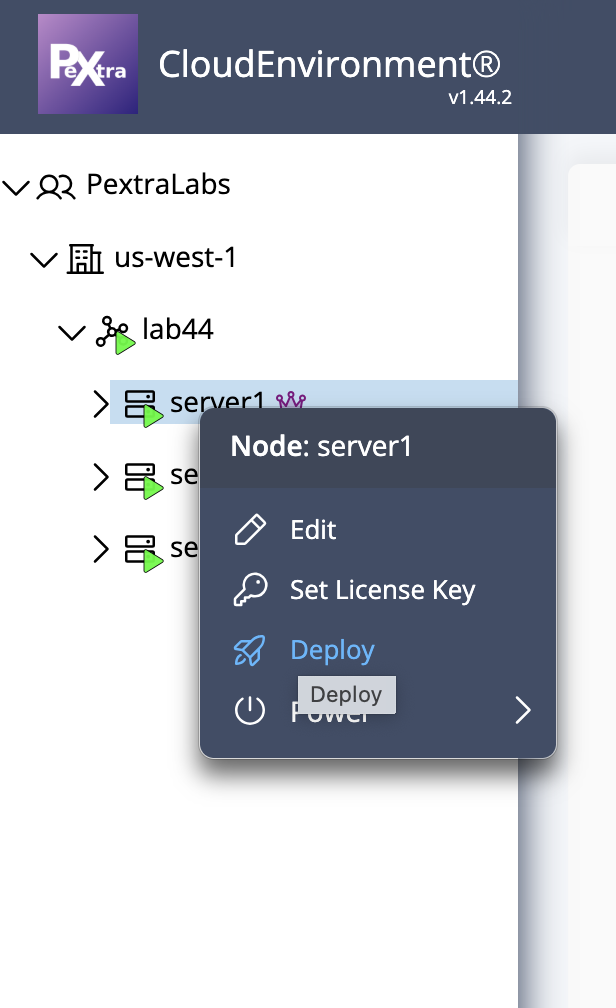

Set License Key

License keys are long-lived and typically do not need to be changed. However, if you need to change the license key, you can do so by following these steps:

Note

The Pextra CloudEnvironment® license key is separate from the CockroachDB license key. Both must be configured. For CockroachDB steps, refer to the Set CockroachDB License Key section.

Tip

License keys can be purchased from the Pextra Customer Portal. Support subscriptions are also available for purchase.

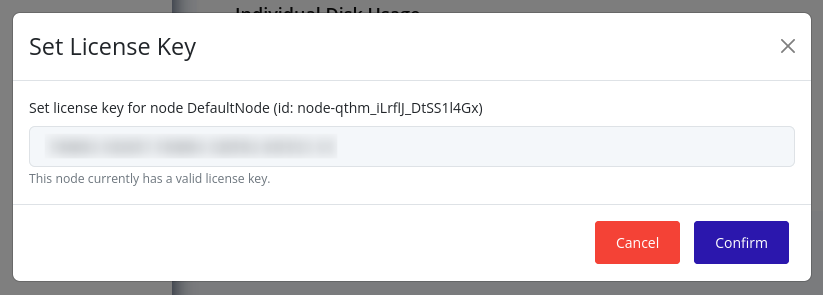

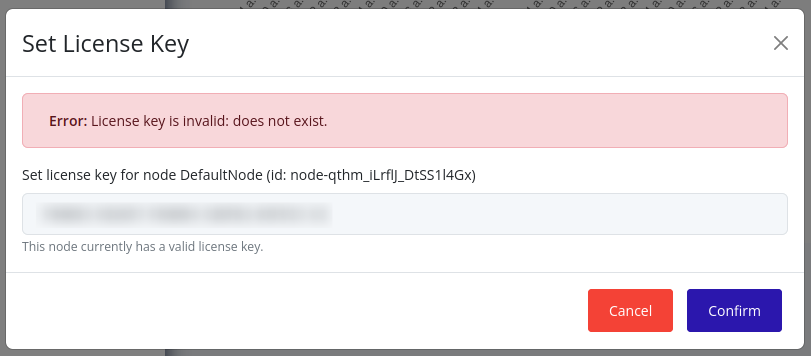

Web Interface

-

Right-click on the node in the resource tree and select Set License Key:

-

A modal will appear. The current license key along with its validity will be displayed. Enter the new license key in the text box and click Confirm:

-

If any errors occur, they will be displayed, otherwise, the modal will close. For example:

To confirm that the license key has been set, you can check the licensing status of the node’s cluster.

Set CockroachDB License Key

Pextra CloudEnvironment®’s highly-scalable private cloud is built on CockroachDB’s distributed architecture. The CockroachDB license key is cluster-wide (a cluster only needs one CockroachDB license key).

Warning

Pextra CloudEnvironment® will not be functional after one week (7 days) without a valid CockroachDB license key.

Warning

When a standalone node joins a cluster, it adopts the cluster’s CockroachDB license setting. Any CockroachDB license key previously set on that node is discarded.

Tip

CockroachDB license keys can be obtained from cockroachlabs.cloud.

Console

-

Access the node’s console through SSH or through the “Console” tab in the node view.

-

First, enter the CockroachDB console by running the following command:

sudo cockroach sql --certs-dir=/usr/local/lib/cockroach/certs -u pextra_ce_pcedaemon -

Set the license key by running the following command in the CockroachDB console:

SET CLUSTER SETTING enterprise.license = '<license key here>'; -

To exit the CockroachDB console, click

CTRL+C.

For more information, visit the CockroachDB licensing FAQs.

Cluster Management

Clusters are logical groupings of nodes that work together to provide compute, storage, and networking resources for running instances and workloads. Pextra CloudEnvironment® supports the creation and management of clusters to facilitate resource allocation, scalability, and high availability.

The ID prefix for clusters is cls-1.

Notes

-

Resources in Pextra CloudEnvironment® are identified by unique IDs. Cluster IDs have the prefix

cls-, followed by a unique identifier (e.g.,cls-YtR8FqvL29sKjb7WxD3Zn). ↩

Cluster Edit

Editing a Cluster

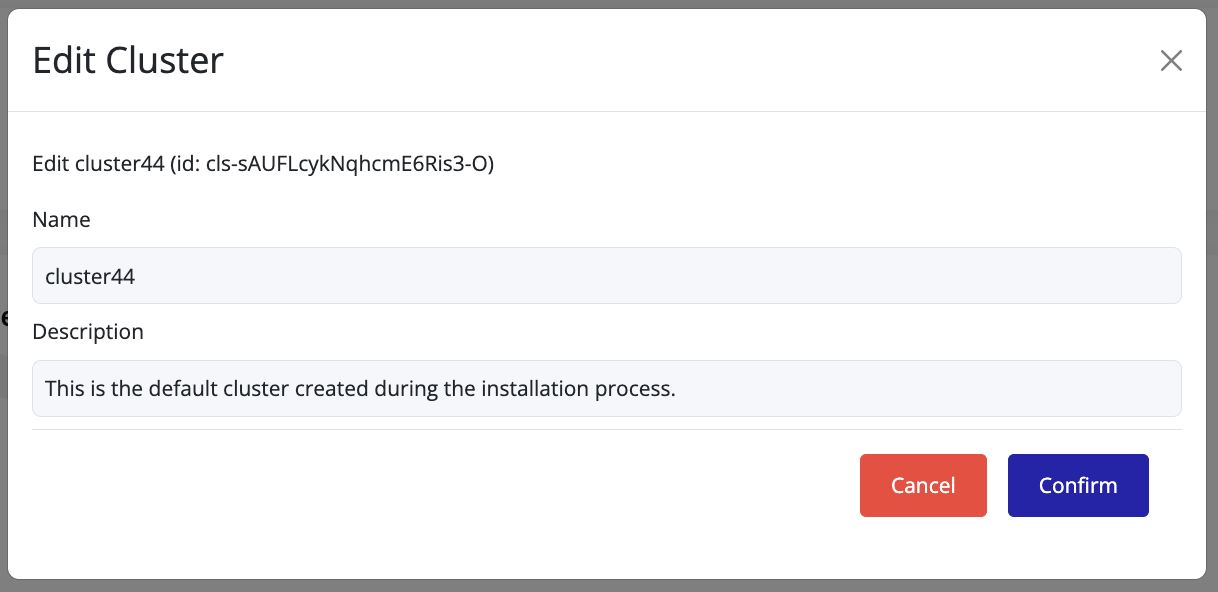

To update a cluster’s name or description:

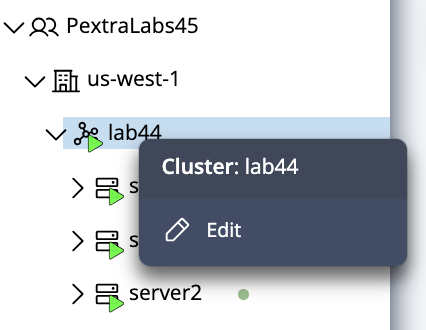

- In the left navigation panel, right-click on the cluster you want to modify.

- Select Edit from the context menu.

- Update the Name and/or Description fields as required.

- Click Confirm to apply the changes.

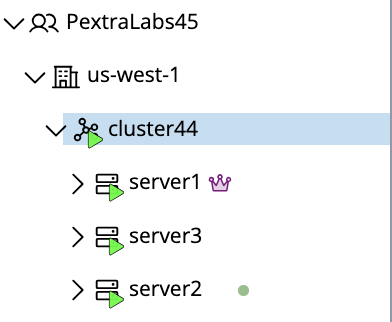

Join Nodes to a Cluster

Clustering allows you to group multiple nodes into a single cluster, enabling resource sharing, redundancy, and centralized management within Pextra CloudEnvironment®.

Tip

After installation, each node initially operates as a standalone cluster with one node. You can join additional nodes to an existing cluster using the join key.

Warning

When joining a cluster, standalone nodes carry over their Pextra CloudEnvironment® license key. However, the CockroachDB license key is discarded, and the node adopts the cluster’s CockroachDB license setting. Refer to the Set CockroachDB License Key for more information.

Creating and Joining a Cluster

Follow these steps to join nodes into an existing cluster:

- Log in directly to the node’s management interface.

- You should see one cluster and one node listed.

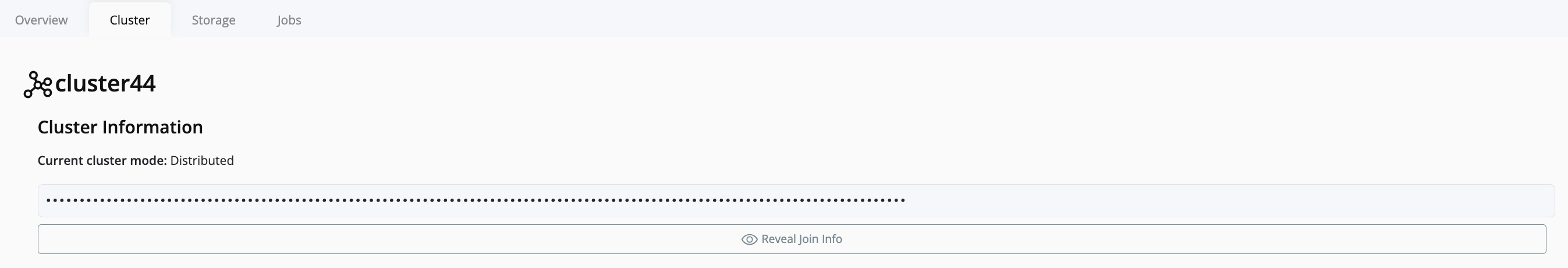

- In the left navigation panel, click on Cluster.

- On the right-hand panel, click on the Cluster you want to extend.

- Obtain the Join Key for that cluster.

- Copy the join key.

- Log in to each new node using its IP address and port

5007(for example,https://<node-ip>:5007). - In the left navigation panel of each new node, click Cluster.

- Click Join Cluster.

- Paste the join key obtained earlier into the provided field and confirm the operation.

- Return to the main cluster view.

- You should now see the newly added node listed as a member of the cluster.

Once the process is complete, all joined nodes operate under a unified cluster, enabling centralized management of workloads, networking, and resources.

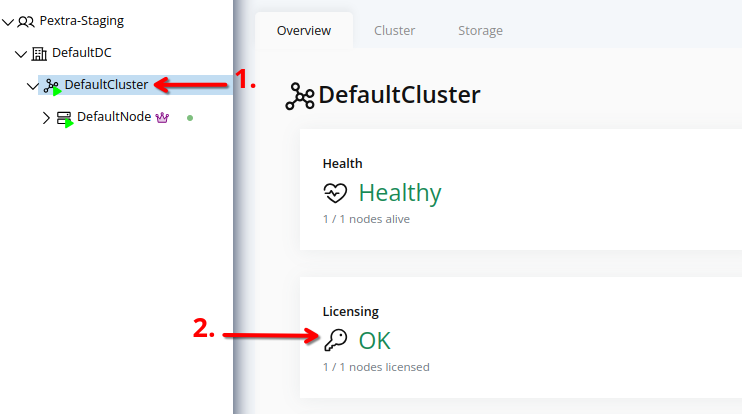

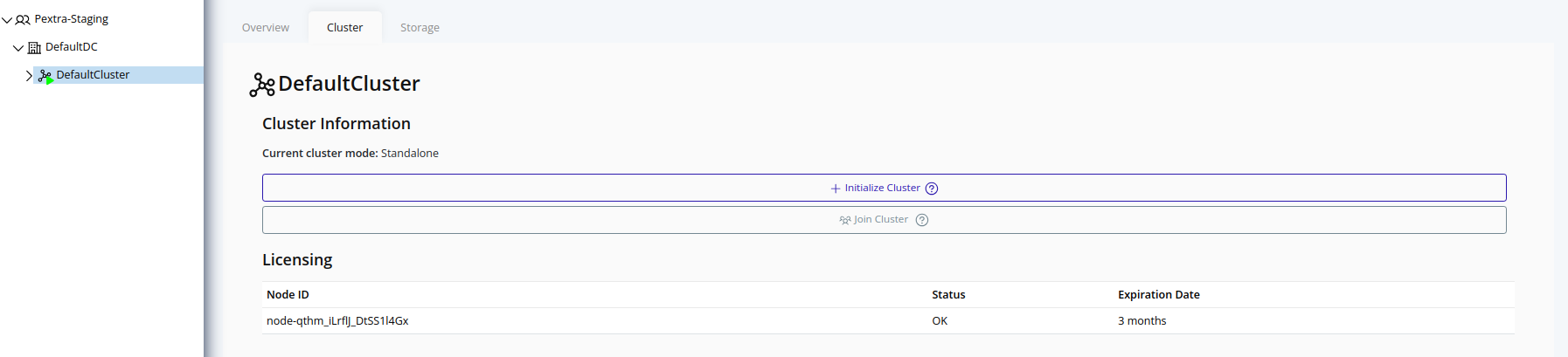

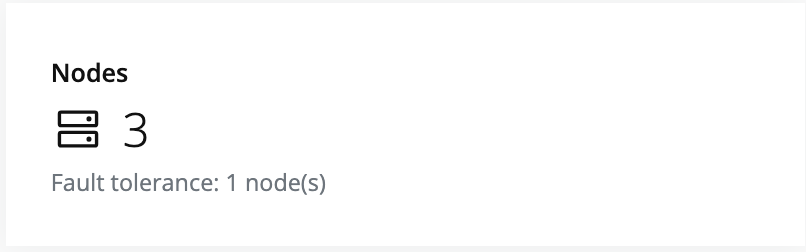

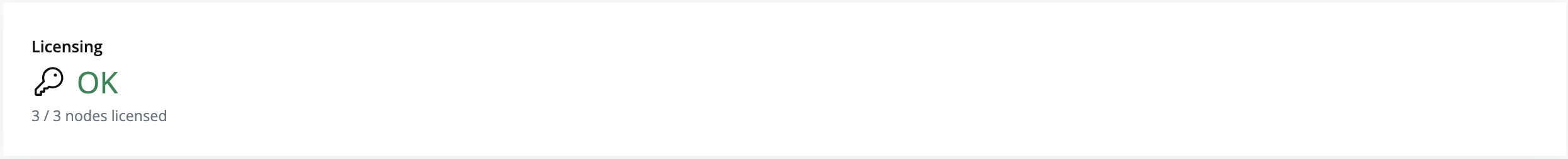

Check Licensing Status

Use the cluster licensing view to make sure every node presents a valid, unexpired license key.

Tip

License keys can be purchased from the Pextra Customer Portal. Support subscriptions are also available for purchase.

Compliance Rules

Refer to the Licensing Compliance Rules section for details on cluster licensing compliance rules.

Web Interface

- Select the cluster in the resource tree and view the page on the right. A card with a quick overview of the licensing status will be displayed:

- For a detailed view, click on the Cluster tab in the right pane. The licensing status of each node in the cluster will be displayed:

For more information on the Additional Features table, refer to the Licensing section.

Datacenter Management

Datacenters represent logical groupings of clusters within an organization. They help structure resources and provide administrative boundaries for managing clusters and nodes.

Note

The

MULTI_DATACENTERfeature flag must be enabled to create more than one datacenter per organization. See the Feature Gating section for details.

Currently, datacenter creation is supported only via the API. This will be addressed in a future release.

The ID prefix for datacenters is dc-1.

Notes

-

Resources in Pextra CloudEnvironment® are identified by unique IDs. Datacenter IDs have the prefix

dc-, followed by a unique identifier (e.g.,dc-VrP6KxEw19aQb2hTnY4Ld). ↩

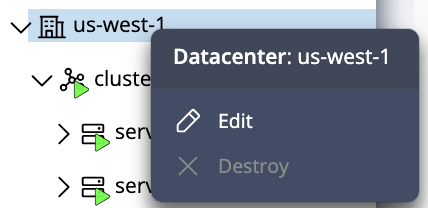

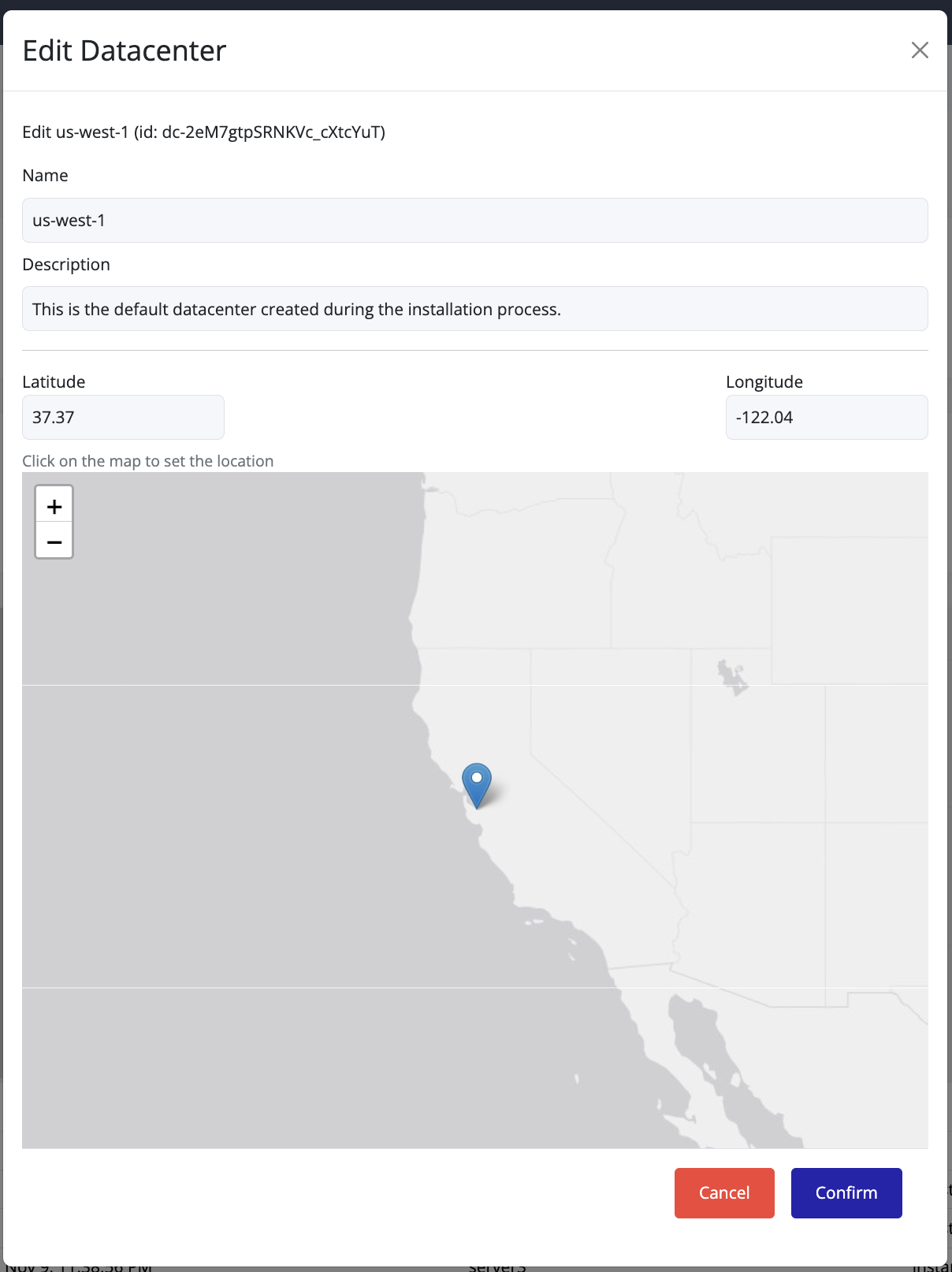

Edit Datacenter

Editing a Datacenter

You can update a datacenter’s name, description, or location directly from the UI:

- In the left navigation panel, right-click on the datacenter you want to edit.

- Select Edit from the context menu.

- Update the Name, Description, or Location fields as needed.

- Click Confirm to save the changes.

Tip

To change the geographical location, click directly on the desired point on the map.

The latitude and longitude values are computed automatically.

Avoid manually entering coordinates—simply select the location on the map and then click Confirm.

Organization Management

Organizations are top-level isolated tenants within Pextra CloudEnvironment®. They encapsulate datacenters, clusters, nodes, and resources, providing administrative boundaries for managing users, permissions, and resource allocation.

Currently, creating a new organization is supported only via the API. This will be addressed in a future release.

Note

Multiple organizations require the

MULTI_TENANCYfeature flag. See the Feature Gating section for details.

The ID prefix for organizations is org-1.

Notes

-

Resources in Pextra CloudEnvironment® are identified by unique IDs. Organization IDs have the prefix

org-, followed by a unique identifier (e.g.,org-CIgLySkaAVeQ5kSLOodD3). ↩

Licensing

Pextra CloudEnvironment® uses a simple licensing model to manage access and feature availability in a multi-organization environment. Each node requires a valid, unexpired license key to operate; these licenses are aggregated at the cluster and organization levels to determine respective licensing status and edition.

Tip

License keys can be purchased from the Pextra Customer Portal. Support subscriptions are also available for purchase.

Compliance Rules

The following rules determine licensing compliance at both the cluster and organization levels.

Cluster

For a cluster to be compliant:

- All nodes must present a valid, unexpired license.

- All nodes must share the same edition. If multiple editions are detected, the cluster automatically falls back to the lowest detected edition until compliance is restored.

Organization

For an organization to be compliant:

- All clusters must be compliant.

- The highest edition among compliant clusters determines the organization edition. Non-compliant clusters do not contribute to the organization-level edition.

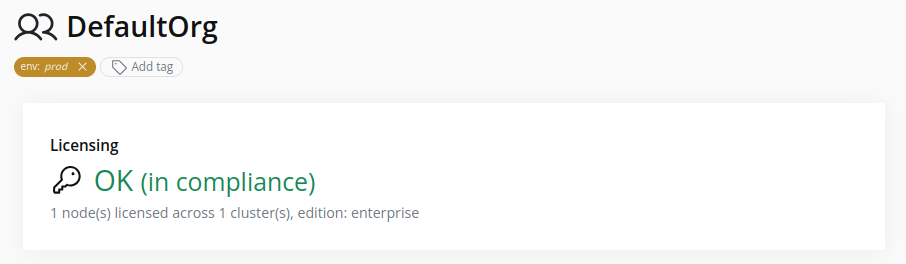

View Licensing Status

- Select the organization in the resource tree.

- Locate the Licensing card in the right pane. It shows the current edition and status:

- The organization edition is also displayed in the top-left corner of the web interface:

To check a cluster’s licensing status within the organization, refer to the Check Licensing Status section.

Feature Gating

The following feature flags currently require the Enterprise edition (or higher):

| Feature Flag | Description |

|---|---|

IAM_ABAC_RULES | Enables dynamic ABAC expressions inside IAM policy statements. |

MULTI_TENANCY | Allows creating multiple organizations (tenants) inside a single deployment. |

MULTI_DATACENTER | Enables multiple datacenters per organization. |

MULTI_CLUSTER | Enables multiple clusters inside a single datacenter. |

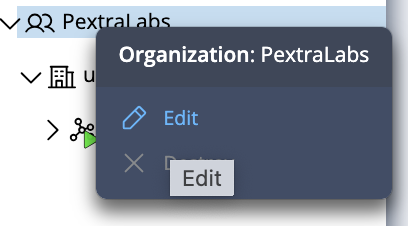

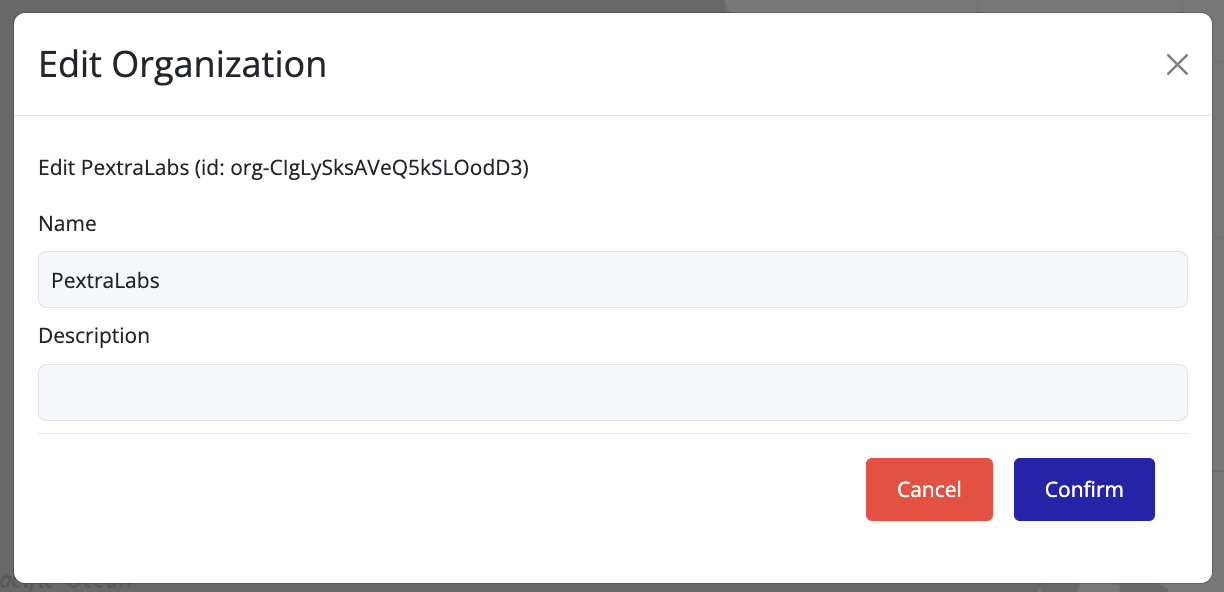

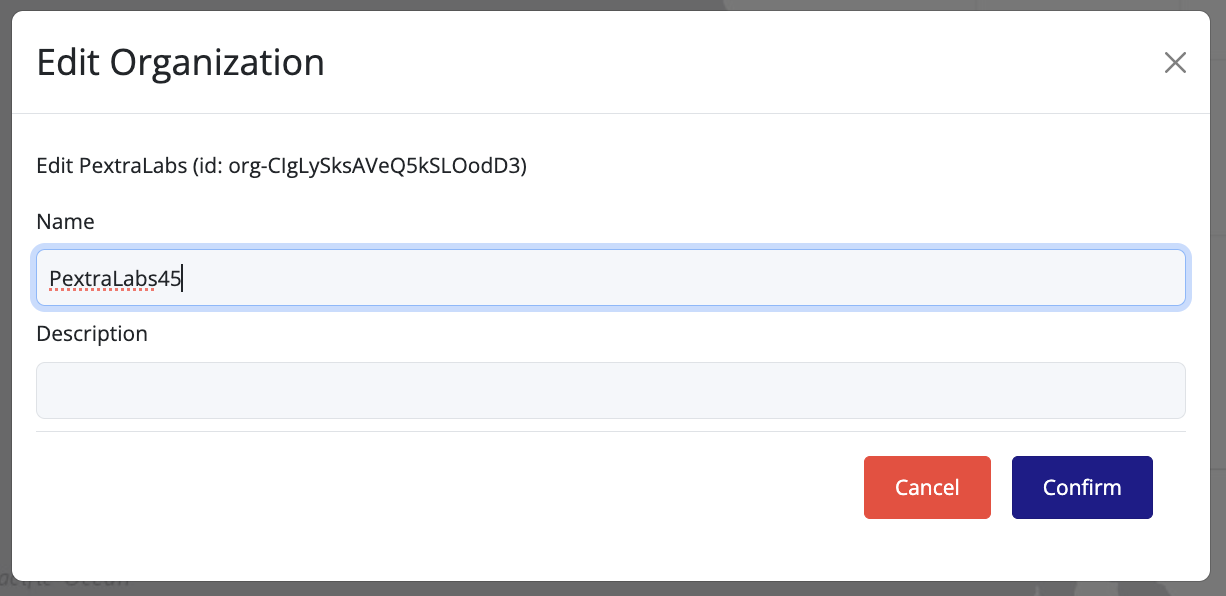

Edit Organization

Editing an Organization

You can update an organization’s name or description from the UI:

- In the left navigation panel, right-click on the organization you want to edit.

- Select Edit from the context menu.

- Update the Name and/or Description fields as needed.

- Click Confirm to save the changes.

Identity and Access Management (IAM)

Identity and Access Management (IAM) controls who can interact with resources inside an organization. IAM is organization-scoped: every action you perform through this view is limited to the organization currently selected in the resource tree.

Pextra CloudEnvironment® uses a dynamic, attribute-based access control (ABAC) model. Permissions are granted to users via roles, which are collections of policies. Policies define fine-grained permissions using statements that allow or deny specific actions on resources and environmental attributes.

Note

The

IAM_ABAC_RULESfeature flag must be enabled to create policies with ABAC rules. See the Feature Gating section for details.

Key Concepts

- Users are created per organization. Refer to the Users section for user management steps.

- Policies define the actual permissions. Refer to the Policies section for statement structure and limits.

- Roles are attached policies, and are assigned to users. Refer to the Roles section for creation, assignment, and deletion steps.

Tip

Keep the root user as a break-glass account. Assign it a strong password and store it securely. Create separate admin users with roles that provide the necessary permissions for daily operations.

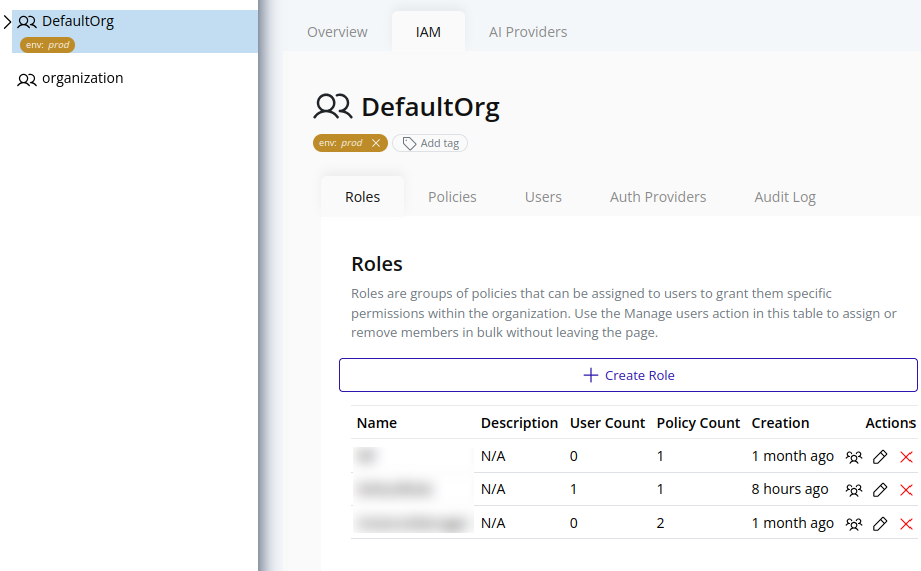

Roles

Roles are collections of policies that bundle permissions for assignment to users. Assigning a role to a user grants them all the permissions defined in the role’s attached policies. Roles simplify permission management by allowing administrators to group related policies and assign them as a single unit.

The ID prefix for roles is iamrole-1.

Notes

-

Resources in Pextra CloudEnvironment® are identified by unique IDs. Role IDs have the prefix

iamrole-, followed by a unique identifier (e.g.,iamrole-YtR8FqvL29sKjb7WxD3Zn). ↩

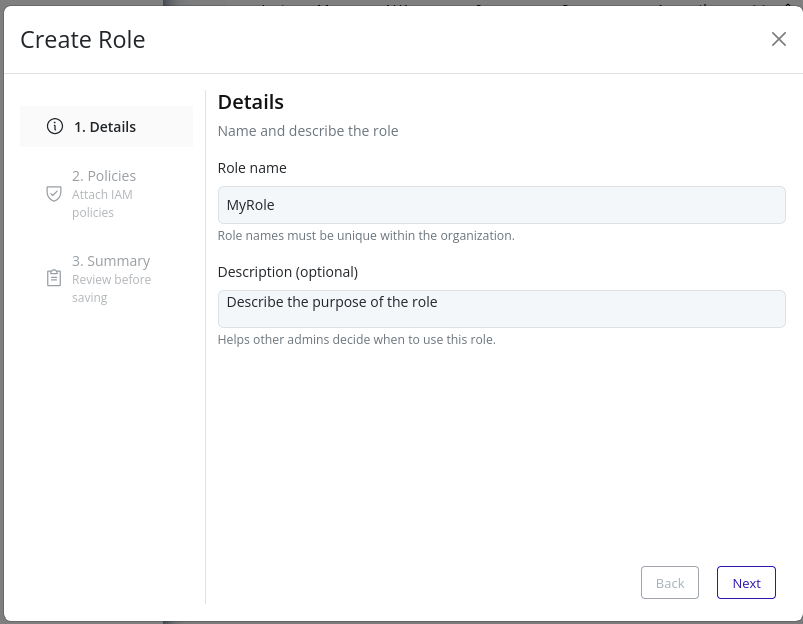

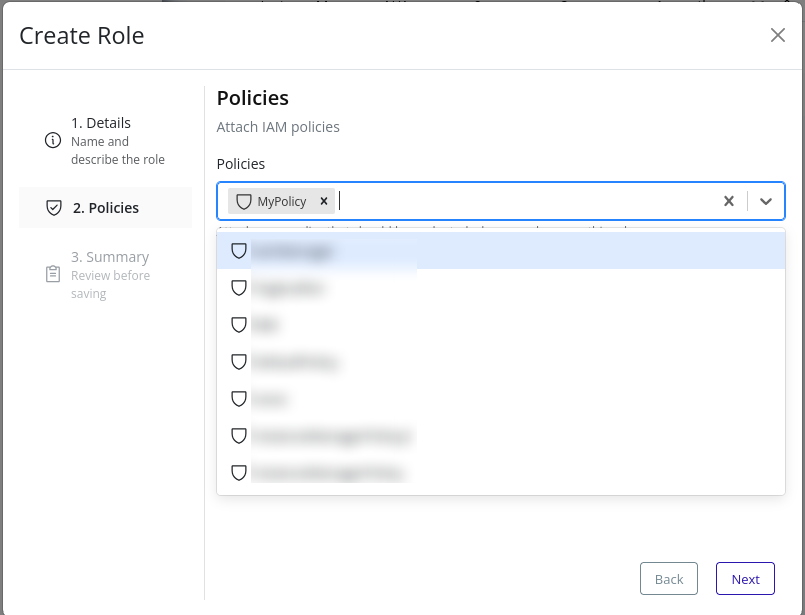

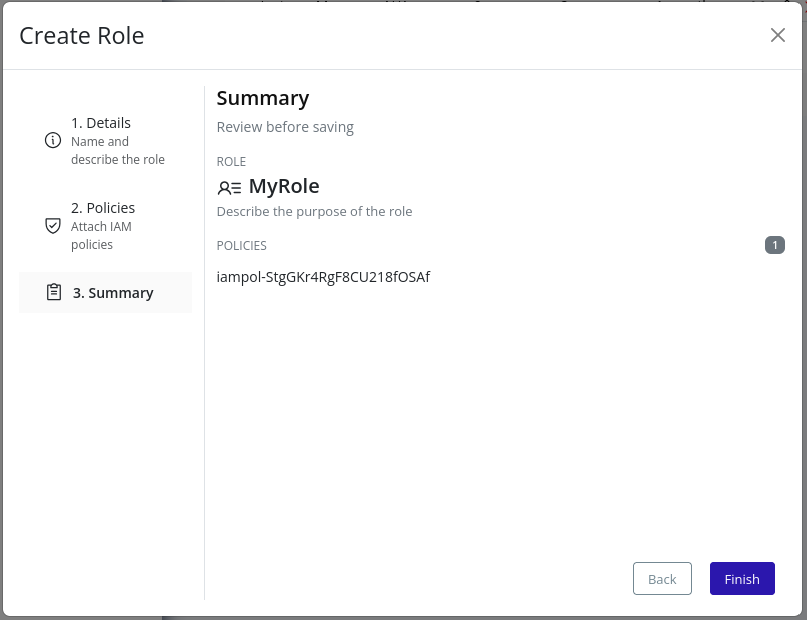

Create Role

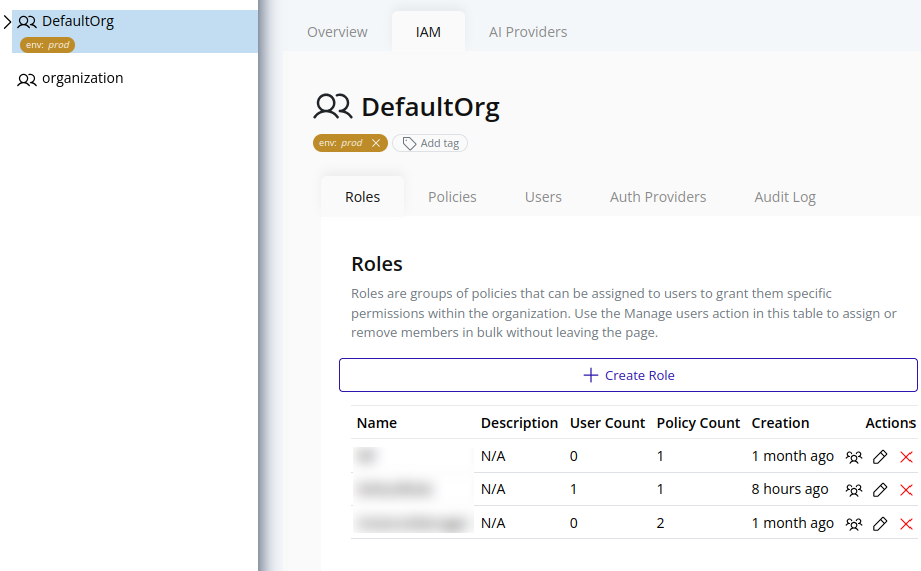

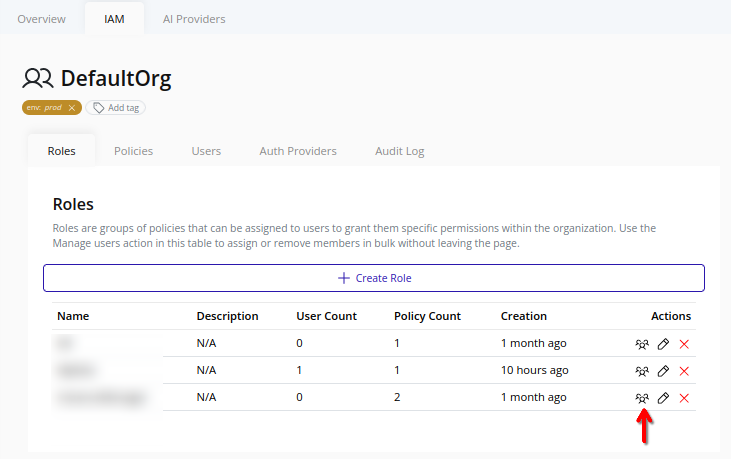

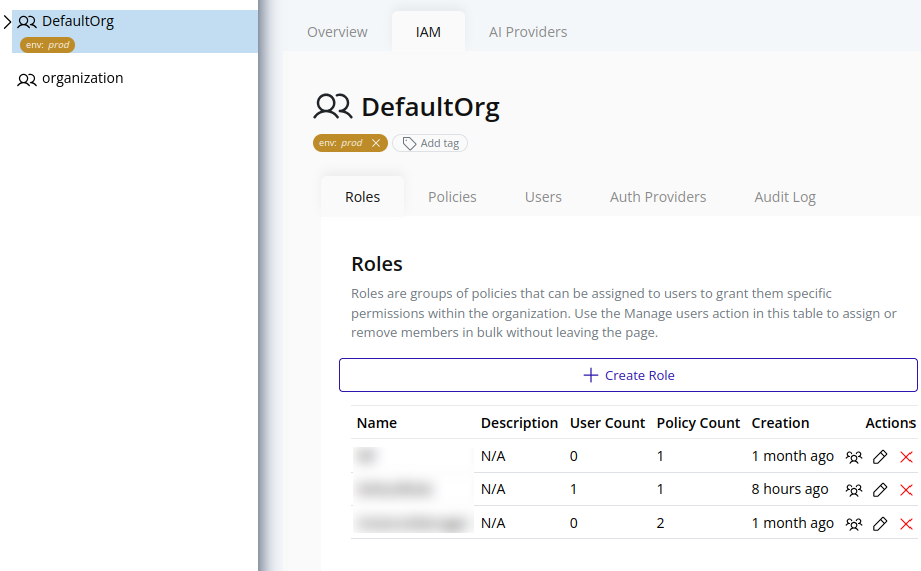

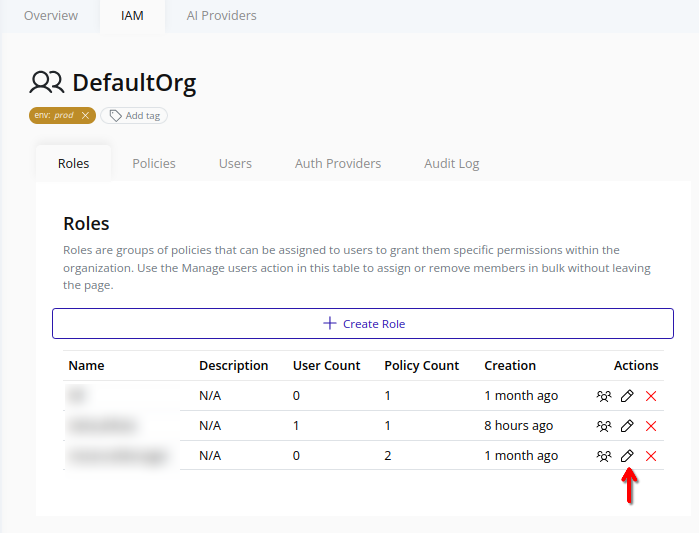

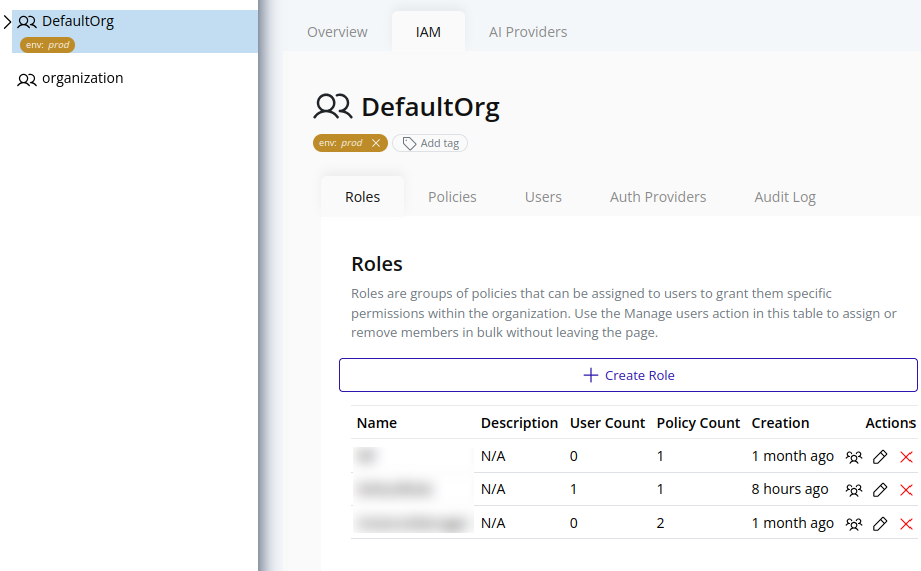

- Select the organization in the resource tree and view the page on the right. Click on the IAM tab in the right pane. Then, select the Roles sub-tab:

- Click the Create Role button at the top:

- In the Details step, provide basic information:

- In the Policies step, select one or more existing policies to attach to the role:

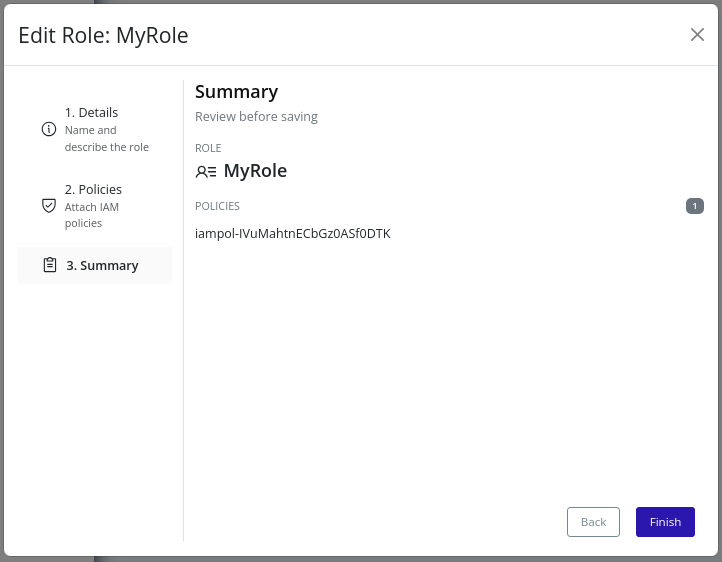

- Review the summary and click Finish to finalize:

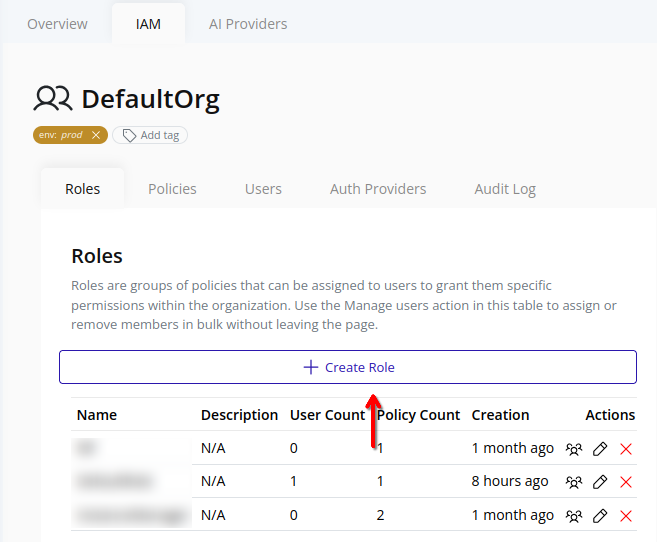

Assign Users to a Role

Important

Role assignment triggers an organization policy update. Changes take effect immediately and may impact user access.

- Select the organization in the resource tree and view the page on the right. Click on the IAM tab in the right pane. Then, select the Roles sub-tab:

- Click the Assign Users button beside the target role:

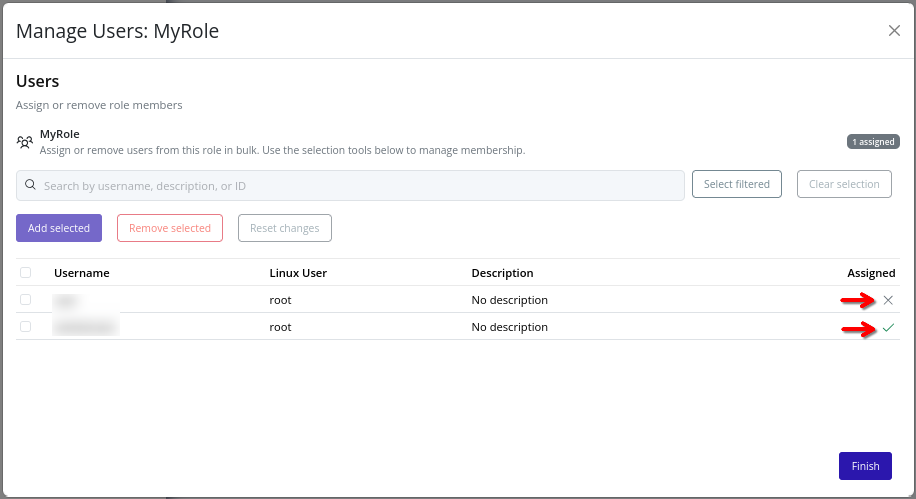

- In the dialog, all users in the organization are listed.

- Select or deselect users, then click Add selected or Remove selected to modify role assignments. The user’s role membership is reflected in the Assigned column. To revert to the original state, click Reset changes:

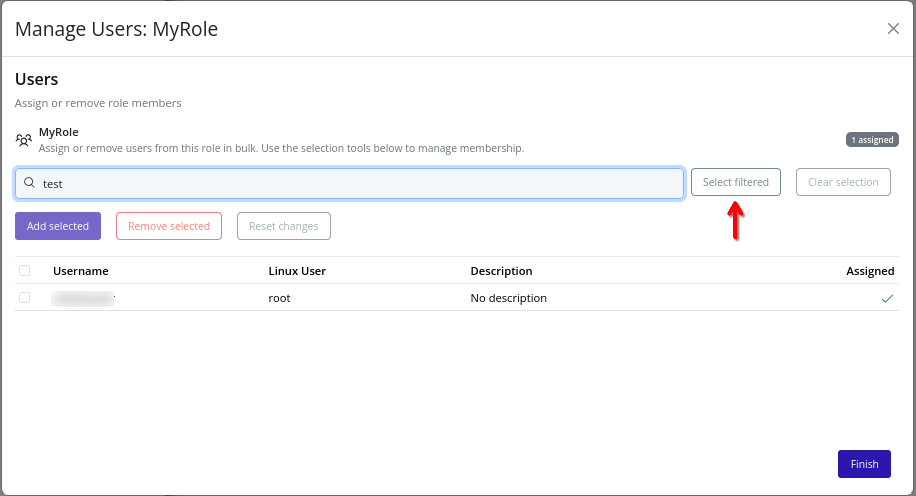

- Search for users (by username, description, or ID) using the search bar at the top of the dialog. Filtered users can be selected by clicking Select filtered:

- To save changes, click Finish. The role’s user assignments are updated accordingly.

Note

The root user is excluded from role assignments, as it has unrestricted access.

Edit Role

Important

Role editing triggers an organization policy update. Changes take effect immediately and may impact user access.

- Select the organization in the resource tree and view the page on the right. Click on the IAM tab in the right pane. Then, select the Roles sub-tab:

- Click the Edit button beside the target role:

- Modify the fields as described in the Create Role section.

- Review the summary and click Finish to save changes:

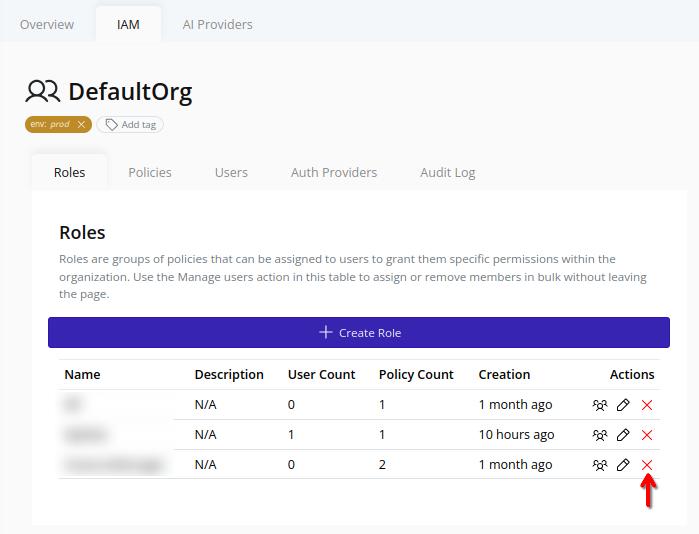

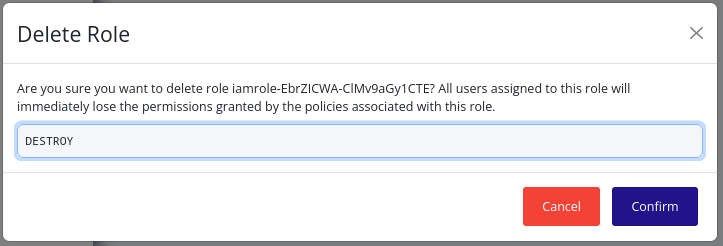

Delete Role

Important

Role deletion triggers an organization policy update. Changes take effect immediately and may impact user access.

- Select the organization in the resource tree and view the page on the right. Click on the IAM tab in the right pane. Then, select the Roles sub-tab:

- Click the Delete button beside the target role:

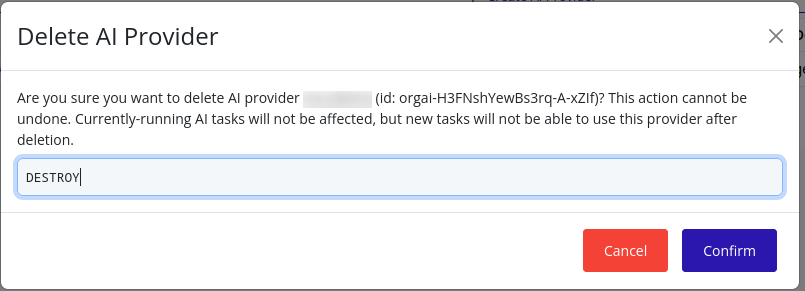

- In the confirmation dialog, type “DESTROY” and click Confirm to confirm the deletion of the role:

Policies

Policies define what actions can be performed within an organization. Each policy contains an ordered list of statements that the IAM engine evaluates during authorization.

The ID prefix for policies is iampol-1.

Notes

-

Resources in Pextra CloudEnvironment® are identified by unique IDs. Policy IDs have the prefix

iampol-, followed by a unique identifier (e.g.,iampol-YtR8FqvL29sKjb7WxD3Zn). ↩

Model

Pextra CloudEnvironment® uses an attribute-based access control (ABAC) model for defining and enforcing permissions. Policies consist of one or more statements that specify which actions are allowed or denied on which resources, optionally refined by rules based on user or resource attributes.

Each statement contains the following fields:

| Field | Description |

|---|---|

| Action | A specific API or UI verb (for example, organization.list) or * for all actions. |

| Effect | Choose allow to grant access or deny to block it. Explicit denies always take precedence over allows. |

| Resource | Resource identifiers (such as org-*, cls-*) or * for all resources. |

| Rules | A string expression that refines the scope of the statement using ABAC conditions. Requires the IAM_ABAC_RULES feature flag. |

Important

The root user bypasses all policy evaluations and is not subject to these rules. Use the root account only for emergency access and critical operations.

Evaluation Order

- Statements are evaluated from top to bottom.

- If any statement with

denymatches, the request is denied immediately—even if an earlierallowmatched. - If no denies match, the first matching

allowgrants access. Requests with no matches are denied by default. - Root users skip evaluation entirely but policy changes are still logged for auditing.

Notes

- 1024 statements maximum per policy, though best practices recommend fewer than 25 for maintainability.

- Policies can be attached to multiple roles to streamline permission management.

- Policy changes apply instantly to all roles and users that reference them.

ABAC Rule Conditions

Note

The

IAM_ABAC_RULESfeature flag must be enabled to create policies with ABAC rules. See the Feature Gating section for details.

When creating policy statements, you can define Attribute-Based Access Control (ABAC) rules in order to further refine when the statement applies. Define conditions using simple expressions with comparison and logical operators. Check the endpoint API documentation to see which resource attributes are available.

Base Schema

The following attributes are always available for use in ABAC rules:

id: stringenv:day_of_week: number (0 = Sunday, 6 = Saturday)time_of_day: string (hh:mm:ss, UTC, 24-hour format — lexicographic comparisons are valid)

Operators And Constructs

- Comparison:

==,!=,===,!==,<,>,<=,>= - Logical:

&&,||,! - String methods:

startsWith(...),endsWith(...),includes(...) - Regular expressions: use

/pattern/.test(value) - Use parentheses and nested expressions to combine conditions

Examples

- Allow only on weekdays:

env.day_of_week >= 1 && env.day_of_week <= 5

- Allow during business hours (09:00:00–17:00:00), using lexicographic time comparison:

env.time_of_day >= "09:00:00" && env.time_of_day <= "17:00:00"

- Combine checks (during business hours on weekdays):

env.day_of_week >= 1 && env.day_of_week <= 5 && env.time_of_day >= "09:00:00" && env.time_of_day <= "17:00:00"

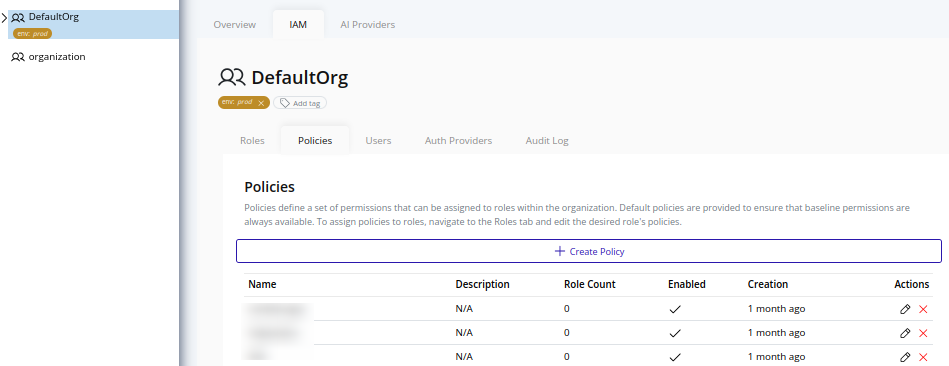

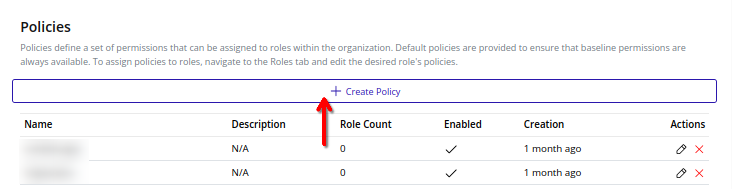

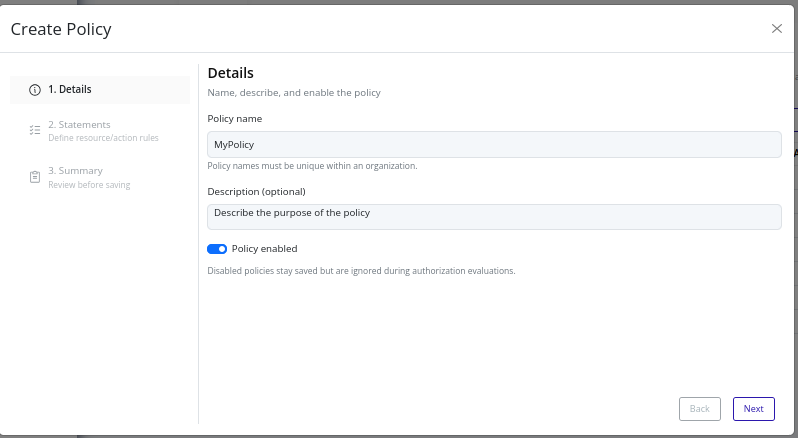

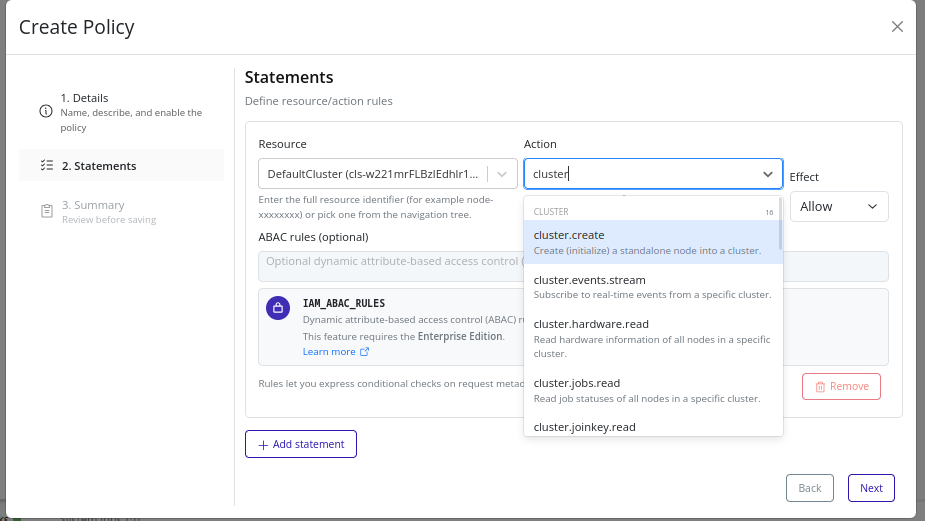

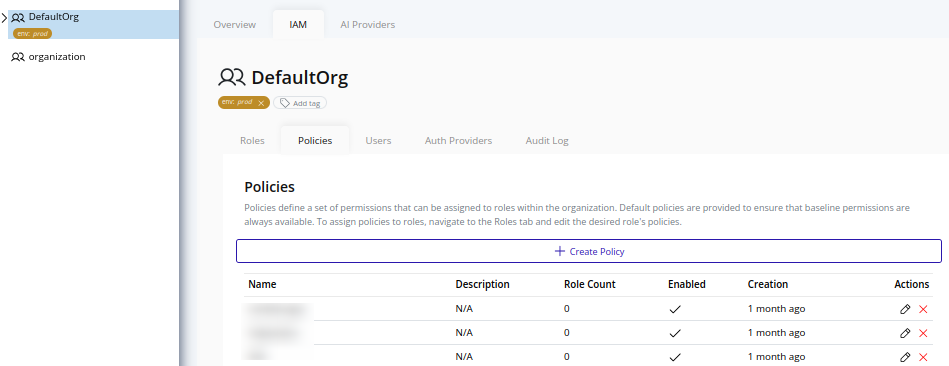

Create Policy

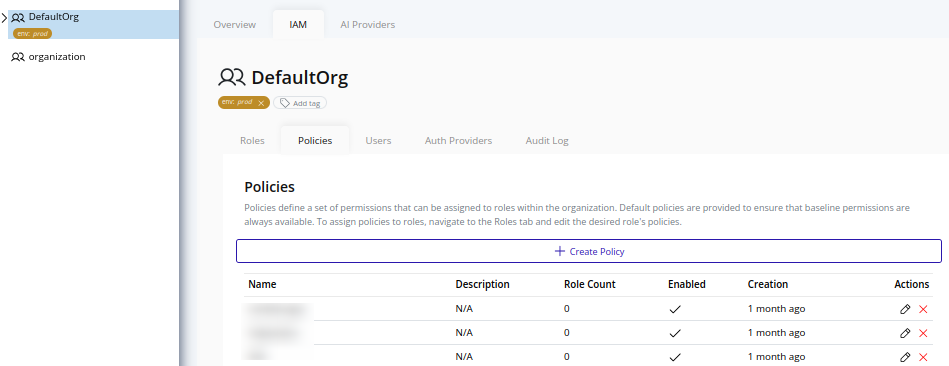

- Select the organization in the resource tree and view the page on the right. Click on the IAM tab in the right pane. Then, select the Policies sub-tab:

- Click the Create Policy button at the top:

- In the Details step, provide basic information:

- In the Statements step, define the policy statements. See the Statement Builder section below for details.

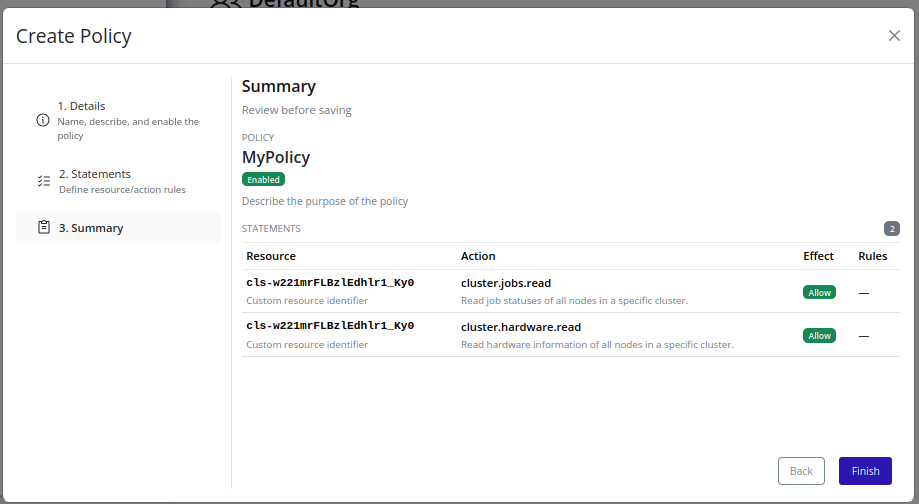

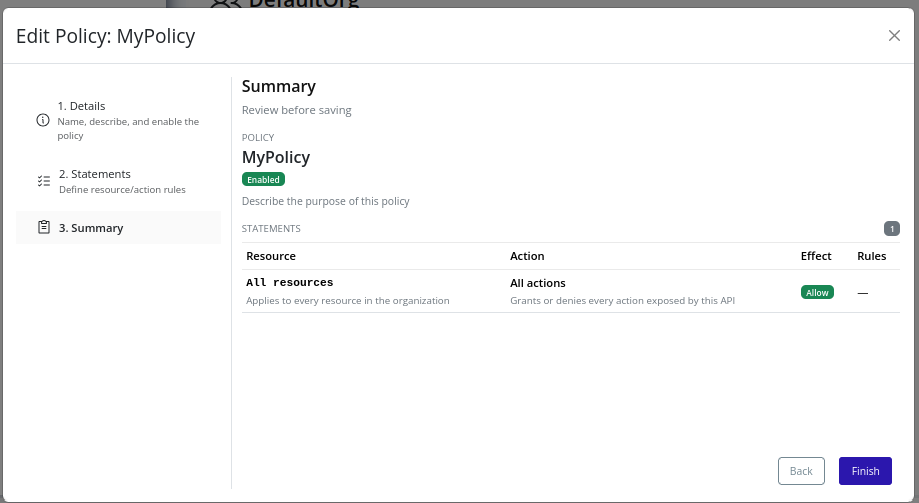

- Review the summary and click Finish to finalize:

Statement Builder

Use the statement builder to define individual policy statements. Each statement includes the following fields:

- Effect: Choose either

allowordenyto specify whether the statement grants or restricts access.denystatements take precedence overallowstatements. - Actions: Select one or more actions that the statement applies to. Use the dropdown to search and select actions.

- Resources: Specify the resources the statement applies to. You can use wildcards (

*) for broader scopes. - Conditions: (Optional) Add ABAC rules to further refine when the statement applies. See the ABAC Rules section for details.

Edit Policy

Important

Policy editing triggers an organization policy update. Changes take effect immediately and may impact user access.

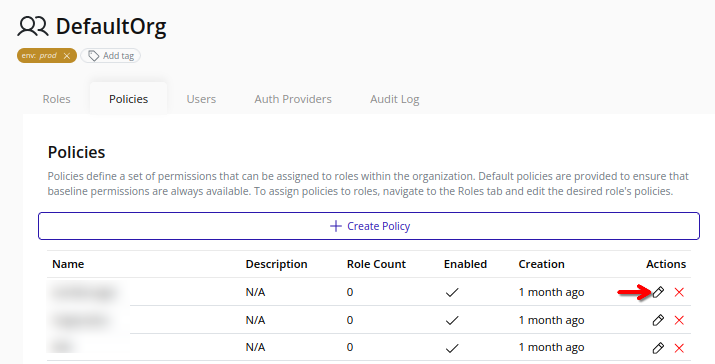

- Select the organization in the resource tree and view the page on the right. Click on the IAM tab in the right pane. Then, select the Policies sub-tab:

- Click the Edit button beside the target policy:

- Modify the fields as described in the Create Policy section.

- Review the summary and click Finish to save changes:

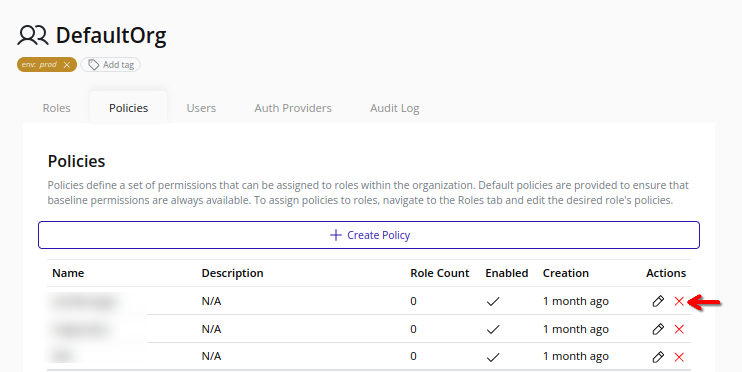

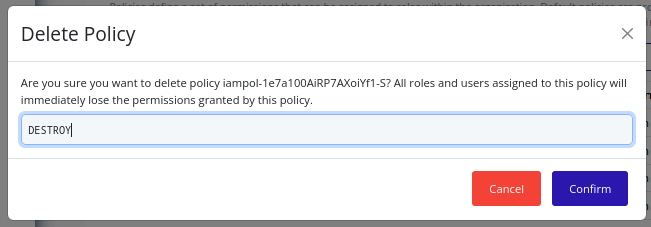

Delete Policy

Important

Policy deletion triggers an organization policy update. Changes take effect immediately and may impact user access.

-

Select the organization in the resource tree and view the page on the right. Click on the IAM tab in the right pane. Then, select the Policies sub-tab:

-

Click the Delete button beside the target policy:

-

In the confirmation dialog, type “DESTROY” and click Confirm to confirm the deletion of the policy:

Users

Users are individual accounts created within an organization to allow access to resources based on assigned roles and policies. Each user has a unique username and can be assigned one or more roles that define their permissions.

In order for the user to access the web interface, they must have at least the minimum permissions outlined in Web Interface Permissions.

The ID prefix for users is user-1.

Notes

-

Resources in Pextra CloudEnvironment® are identified by unique IDs. User IDs have the prefix

user-, followed by a unique identifier (e.g.,user-YtR8FqvL29sKjb7WxD3Zn). ↩

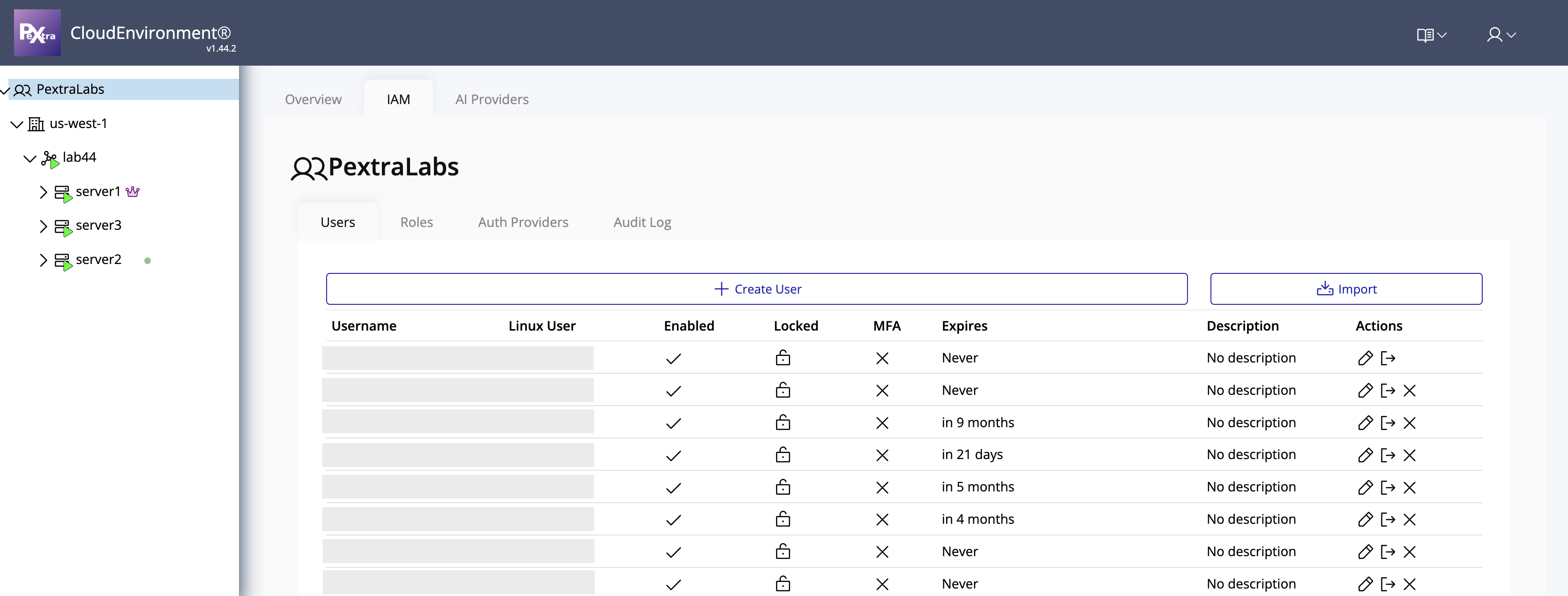

View Users

To view users within your organization:

- In the left navigation panel, click your organization name.

- Select IAM from the options.

The Users page displays a table listing all users in the organization.

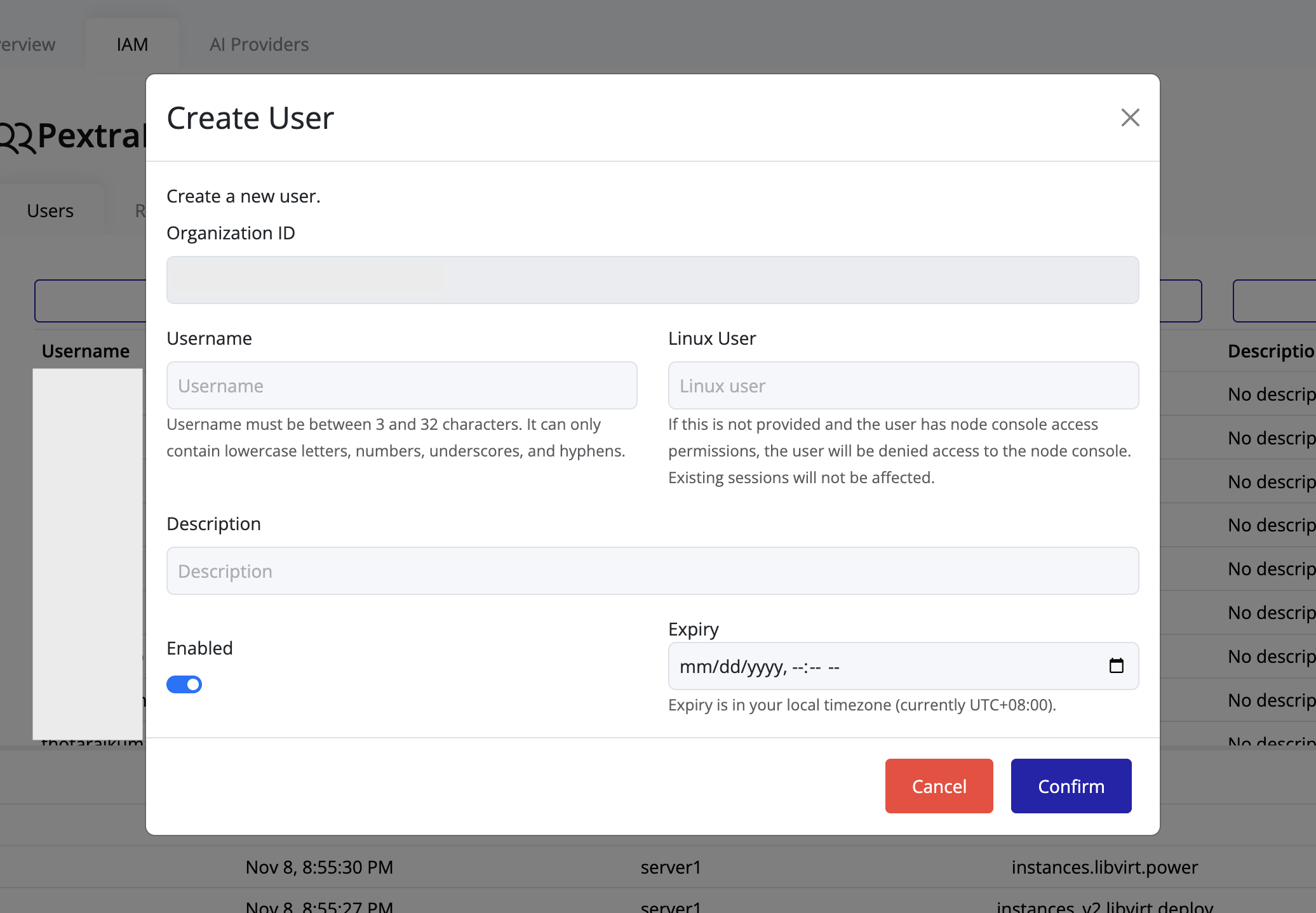

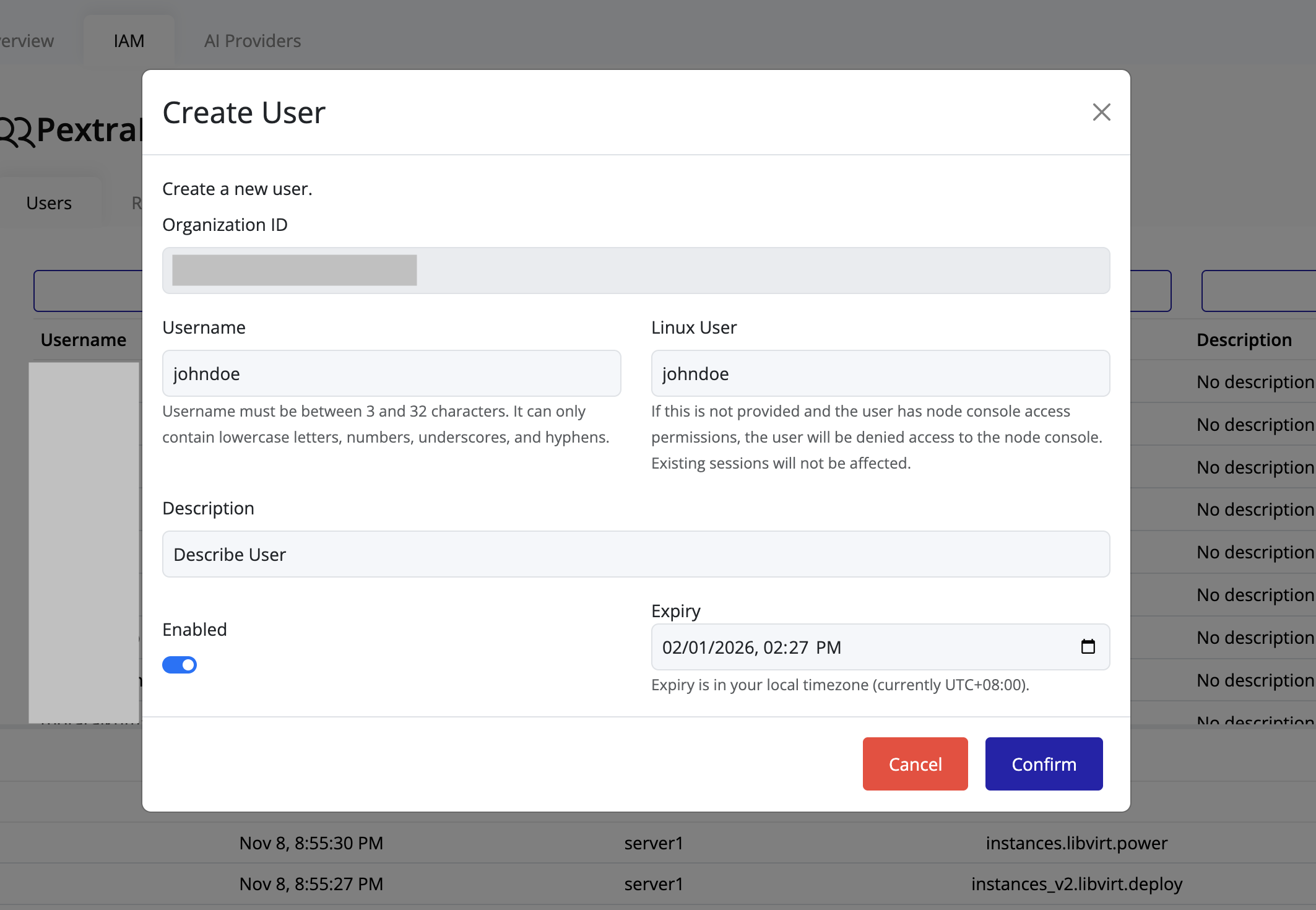

Create a User

Administrators can create new users directly from the IAM interface.

-

Navigate to IAM under your organization view.

-

Click Create User at the top of the Users page.

-

The Create User dialog will appear:

-

Fill in user details:

- Username is mandatory and must be unique within the organization.

- Linux user is optional; if provided, it determines Linux user mapping when accessing node consoles.

- Description is optional; use it to document the user’s purpose or role.

- Enable is on by default; disable it to create a user in a disabled state.

- Expiry is optional; assign an expiration date for the user if needed.

-

Click Confirm to create the user.

-

The new user will appear in the users list:

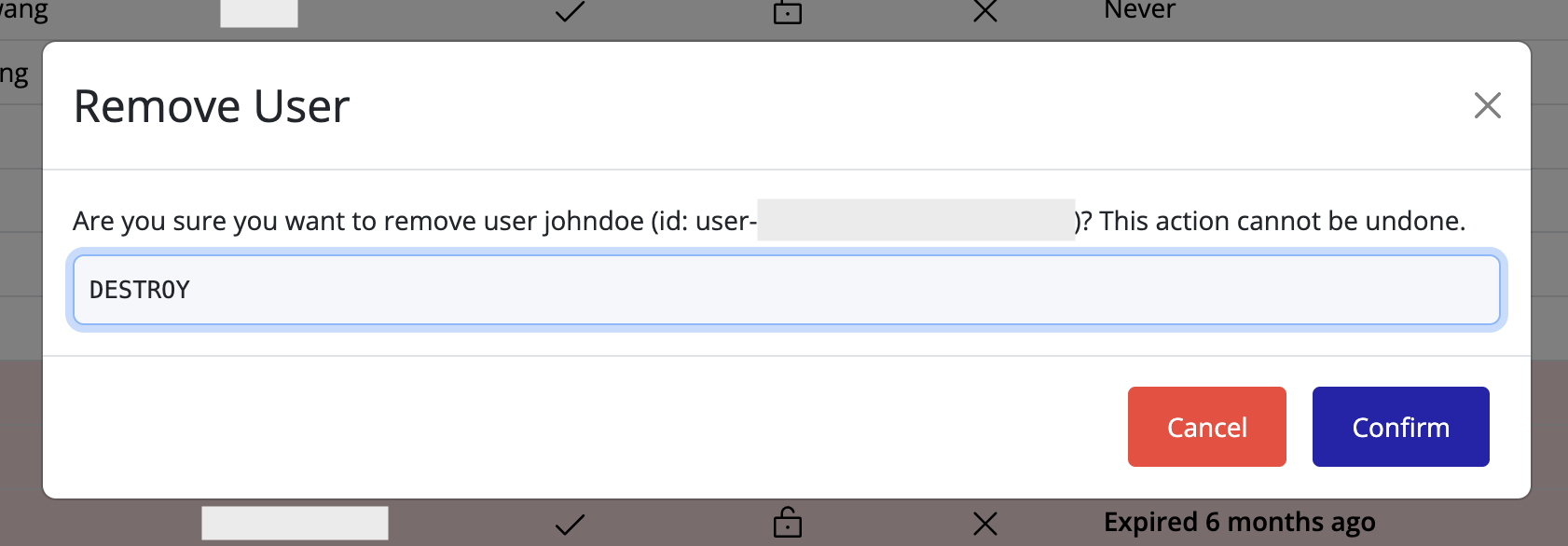

Delete a User

To remove a user from the IAM interface:

- Locate the user in the Users list.

- Click the delete icon on the user’s row.

- A confirmation dialog will appear. Type in “DESTROY” and click Confirm to confirm the deletion of the user.

Auditing and Compliance

To monitor user activities and ensure compliance, Pextra CloudEnvironment® provides an audit trail feature that logs all actions taken by users within an organization. User lockout tracking is also available to help maintain security and compliance standards.

More advanced auditing features are planned for future releases.

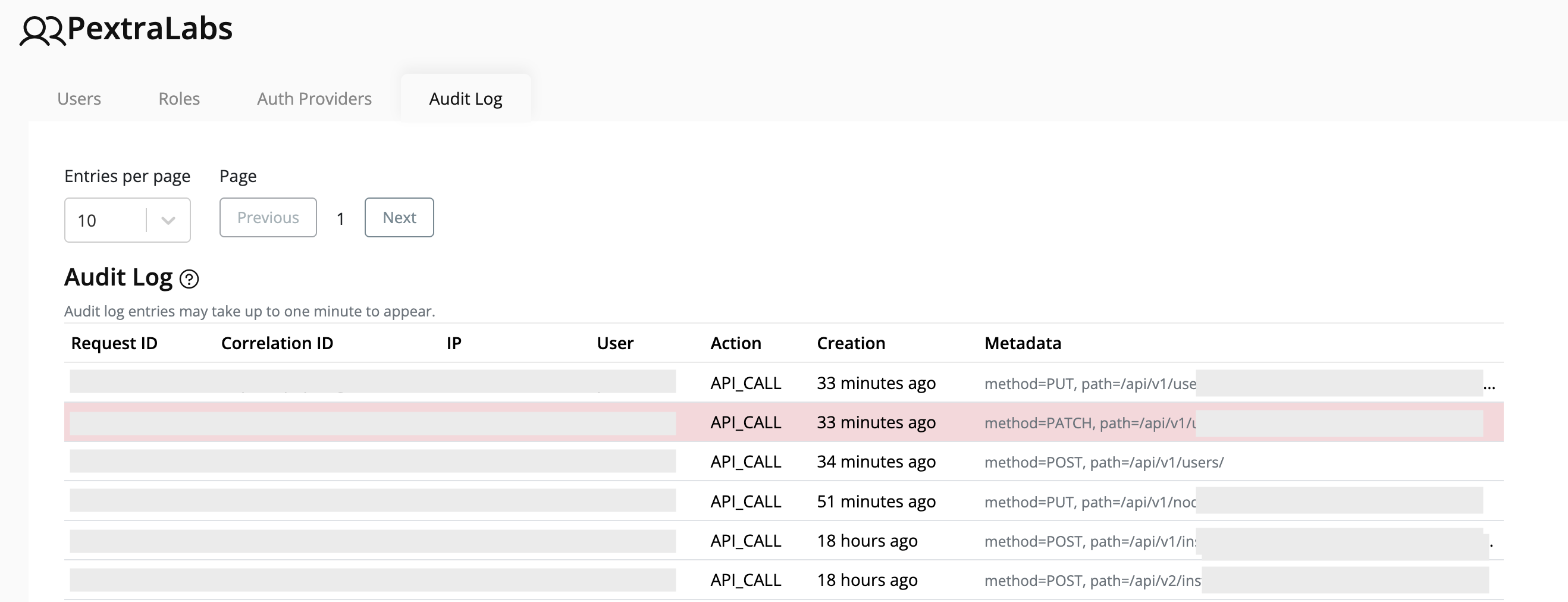

Audit Trail

To view audit logs:

- From the left navigation panel, click your Organization Name.

- On the right, click Audit Log.

- The Audit Log section displays actions performed by users, providing a full audit trail.

Note

Audit log entries may take up to one minute to appear after an action is performed.

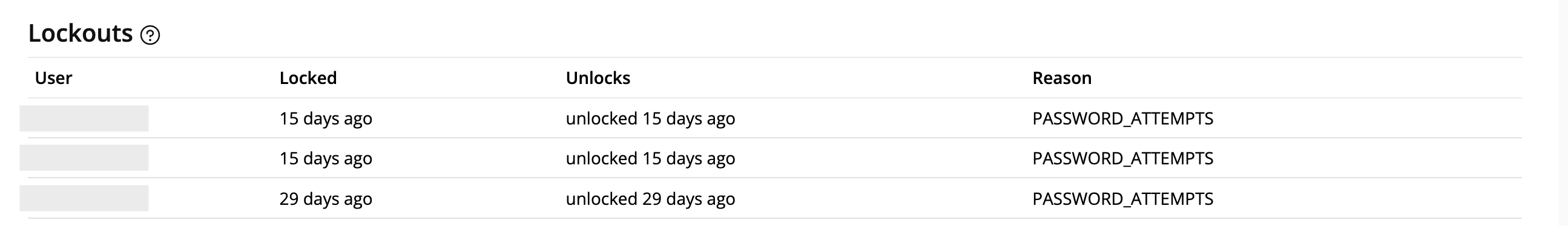

User Lockouts

After multiple failed login attempts, user accounts may be locked out to enhance security. You can monitor and review these lockouts through the user lockout log.

To view user lockouts:

- From the left navigation panel, click your Organization Name.

- On the right, click Audit.

- Scroll down to the Users Lockouts section to see details of any locked-out users.

Best Practices

Follow these guidelines to keep Identity and Access Management (IAM) secure, auditable, and maintainable within each organization.

Organization IAM Lifecycle

View sourceCore Principles

- Least Privilege: Start with view-only policies (

organization.list,organization.read) and add permissions gradually. Avoid assigning wildcard actions unless you have a dedicated break-glass role. - Separation of Duties: Keep roles focused. Create discrete roles for cluster operations, billing, and compliance tasks instead of bundling them together.

- Consistent Naming: Adopt a naming scheme such as

Team-Function(e.g.,SRE-ClusterOps). Use the role description to document escalation paths or ticket references.

Policy Hygiene

- Limit Statement Count: Keep policies under 25 statements when possible. Split large policies into topical units.

- Prefer Explicit Actions: Avoid

*unless the action set is genuinely broad. Explicit lists make audits faster. - Test Before Production: Validate new policies before attaching them to roles. Use a staging organization if available.

Role Management

- Rotation: Review role memberships quarterly. Remove users who changed teams or no longer need access.

- Temporary Access: For short-term tasks, create time-bound roles or apply expiration dates to the assigned users.

User Lifecycle

- Strong Authentication: Use strong passwords and consider MFA.

- Monitor Activity: Feed Audit Trail logs into your SIEM for anomaly detection.

- Offboarding: Immediately disable or delete users who leave the organization. Follow your internal data retention policies.

- Disable vs. Delete: Disable users when access is temporarily paused; delete only when you are sure data retention requirements are met.

Incident Response

- Use the root account only to unblock incidents.

- Document every root login in your incident ticket.

- After remediation, rotate any affected credentials and export updated policies for review.

Change Management Checklist

- Create or update a policy in staging first.

- Capture an export or screenshot prior to editing roles/policies.

- Communicate the change to impacted teams (email, chat, ticket comment).

- Apply the change in production during a maintenance window if it affects many users.

- Monitor audit logs for at least one hour after the change.

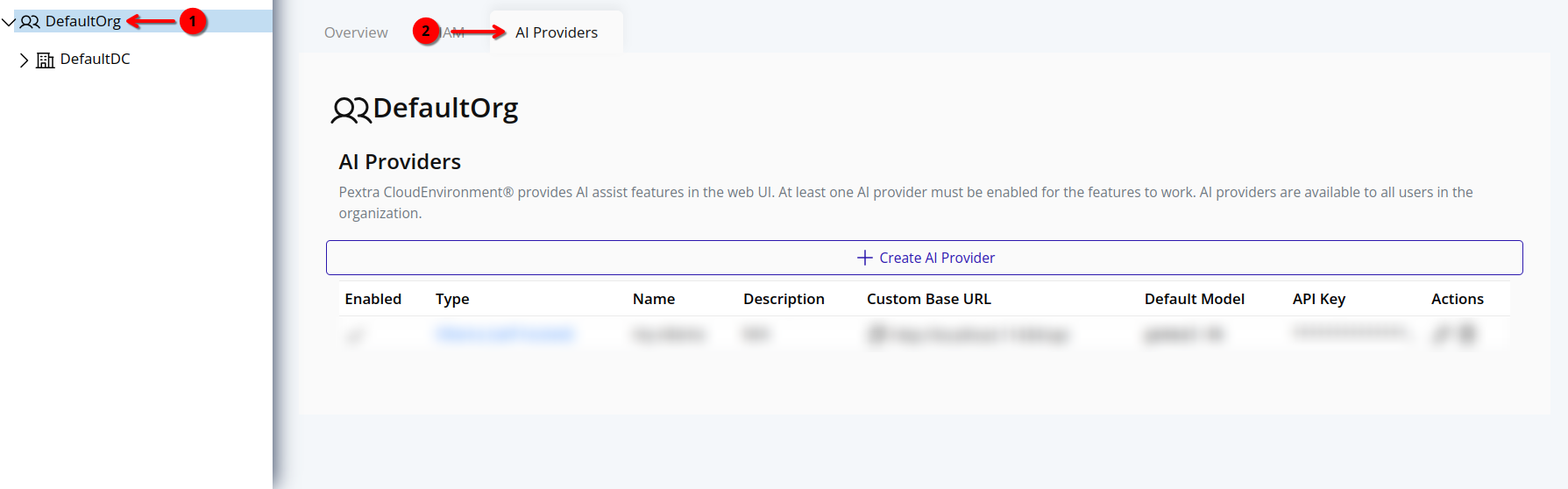

AI Providers

AI providers are organization-wide connections to cloud or self-hosted AI services. These providers power various AI features, enabling users to use natural language to interact with the Pextra CloudEnvironment® web interface.

At least one AI provider must be configured and enabled for AI features to function. If no AI providers are configured, AI features will not be available in the web interface. For a list of supported AI providers, see the Supported AI Providers section.

The ID prefix for AI providers is orgai-1.

Notes

-

Resources in Pextra CloudEnvironment® are identified by unique IDs. AI providers will have the prefix

orgai-, followed by a unique identifier (e.g.,orgai-qthm_iLrflJ_DtSS1l4Gx). ↩

Supported AI Providers

The following AI providers are supported in Pextra CloudEnvironment®:

| Name | ID | Cloud-Hosted |

|---|---|---|

| OpenAI | openai | ✅ |

| Anthropic | anthropic | ✅ |

google | ✅ | |

| xAI | xai | ✅ |

| Mistral | mistral | ✅ |

| DeepInfra | deepinfra | ✅ |

| DeepSeek | deepseek | ✅ |

| Cerebras | cerebras | ✅ |

| Groq | groq | ✅ |

| Perplexity | perplexity | ✅ |

| Cohere | cohere | ✅ |

| Ollama | ollama | ❌ (self-hosted) |

| LM Studio | lmstudio | ❌ (self-hosted) |

OpenAI-Compatible Providers

For AI providers that are OpenAI-compatible but are not explicitly listed above, use the openai provider type and configure a custom base URL. For more information, refer to the Add AI Provider section.

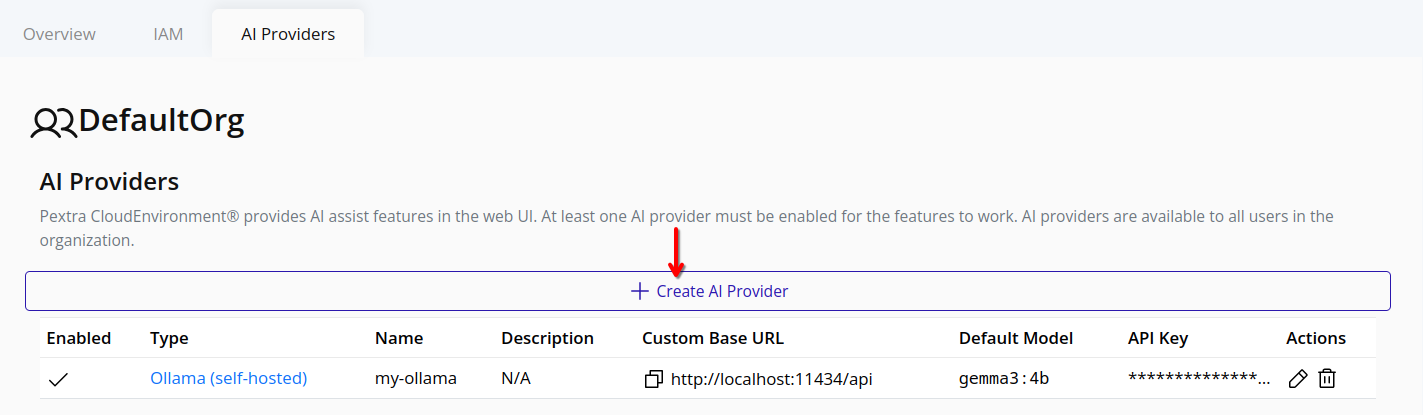

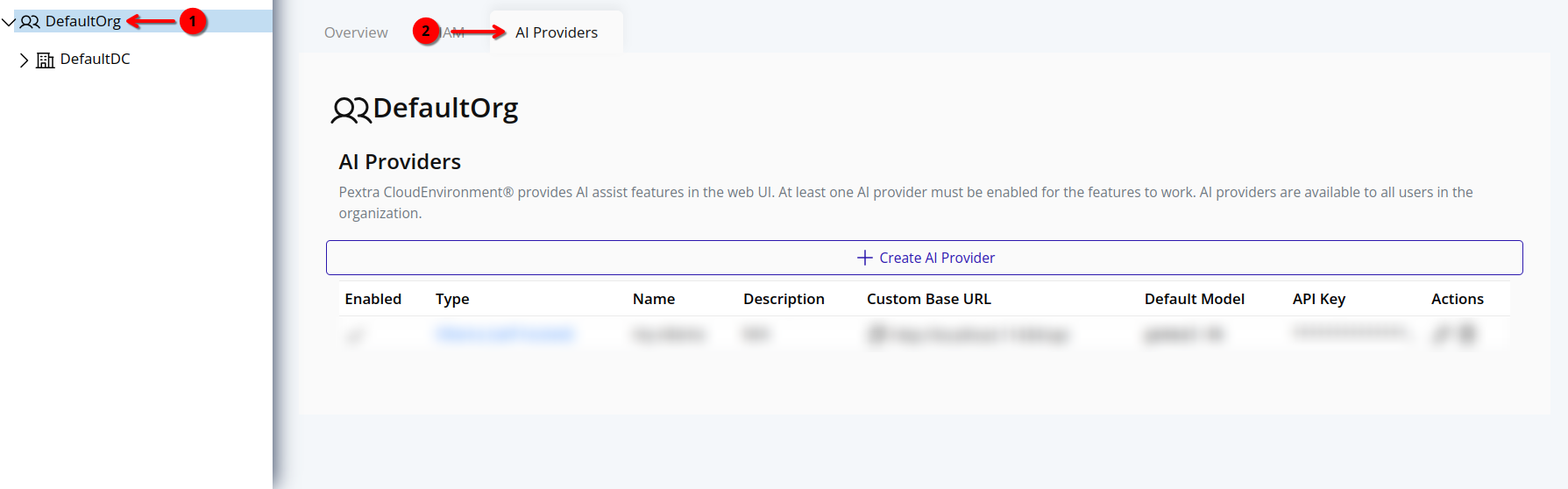

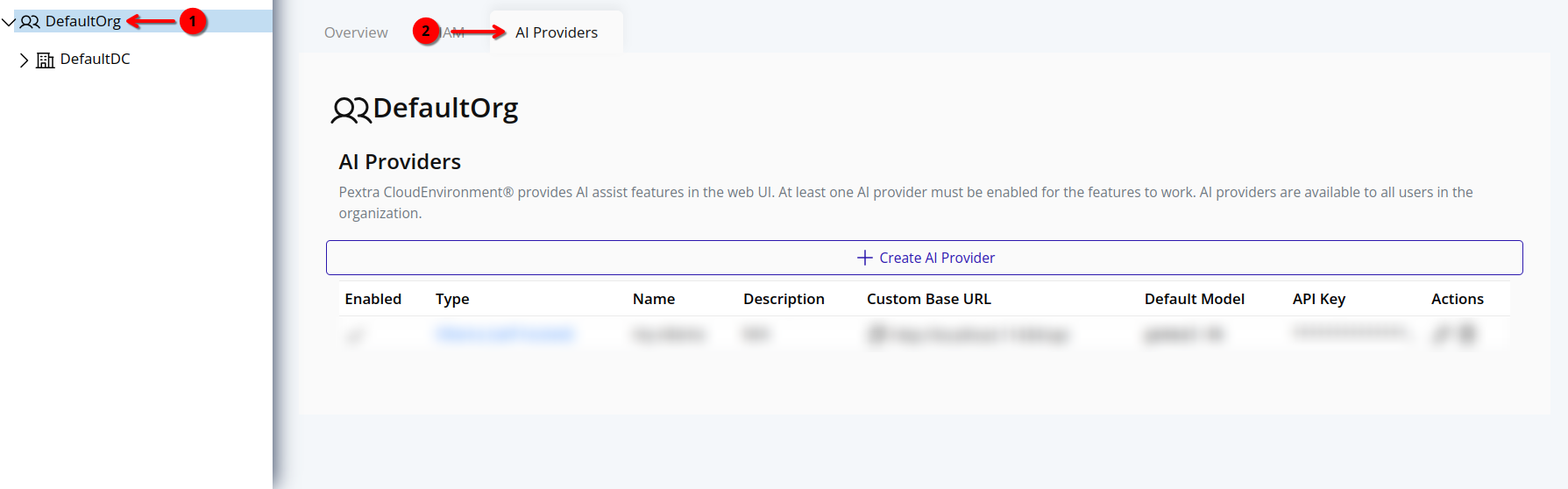

List AI Providers

List AI providers to ensure that Pextra CloudEnvironment® AI features are properly configured and available for use.

Web Interface

- Select the organization in the resource tree and view the page on the right. Click on the AI Providers tab in the right pane. The AI providers will be listed:

Note

For security reasons, the API keys for AI providers are not displayed in the web interface. API keys cannot be retrieved once set. Store your API keys securely.

To edit properties of an AI provider, refer to the Edit AI Provider section.

Add AI Provider

Add an AI provider to your organization to enable AI features in the Pextra CloudEnvironment® web interface. At least one AI provider must be configured and enabled for AI features to function. If no AI providers are configured, the AI features will not be available in the web interface.

Note

For security reasons, the API keys for AI providers are not displayed in the web interface. API keys cannot be retrieved once set. Store your API keys securely.

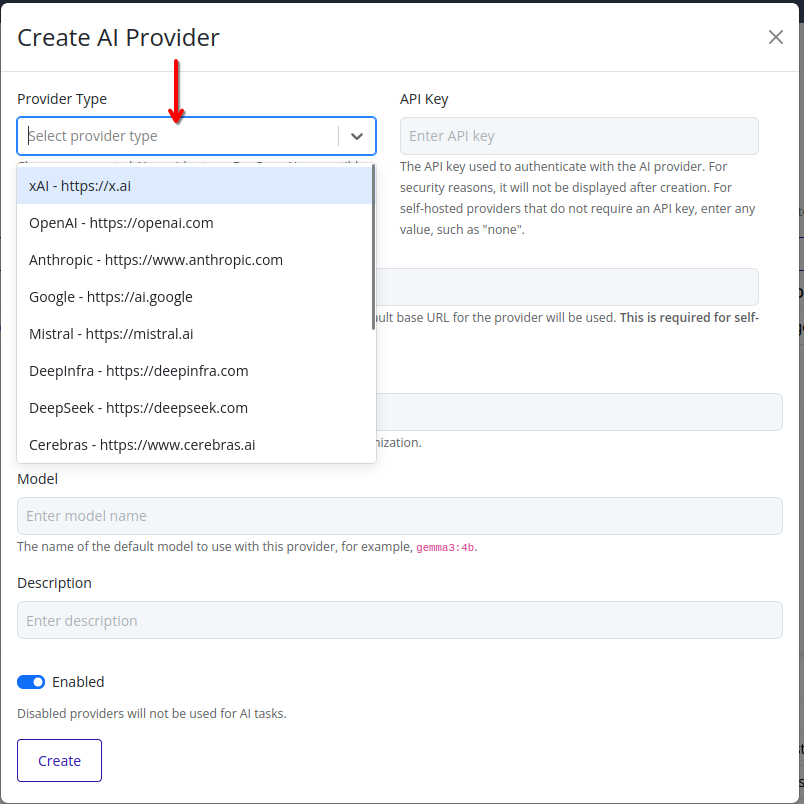

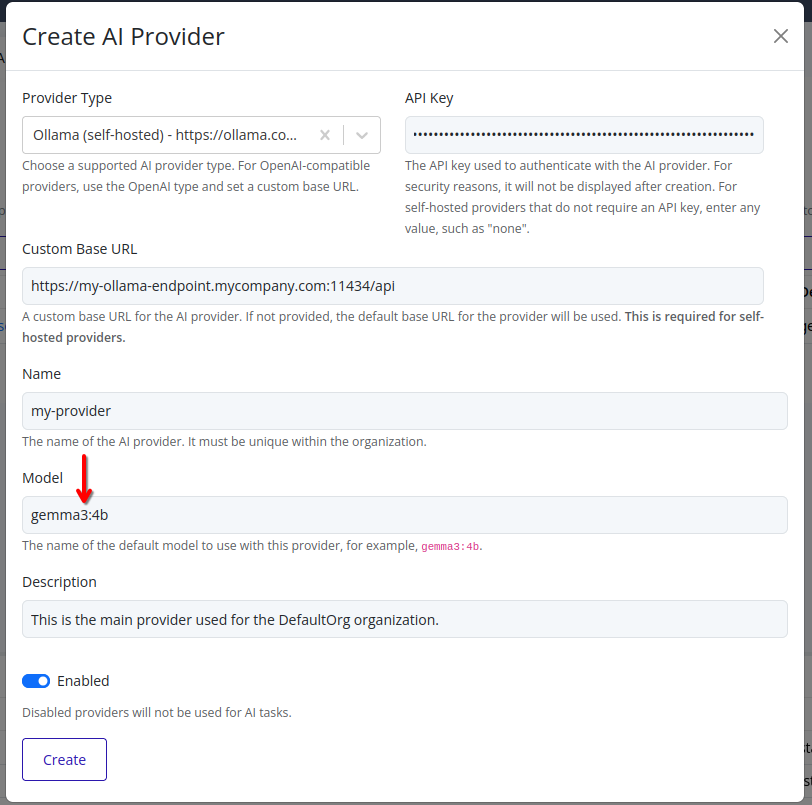

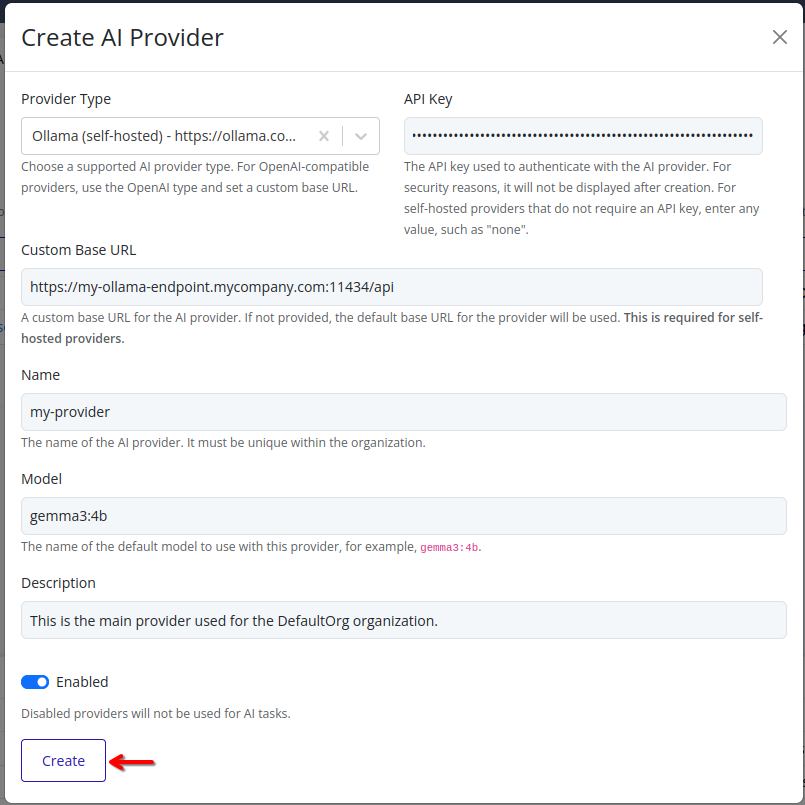

Web Interface

-

Select the organization in the resource tree and view the page on the right. Click on the AI Providers tab in the right pane.

-

Click the Add AI Provider button.

-

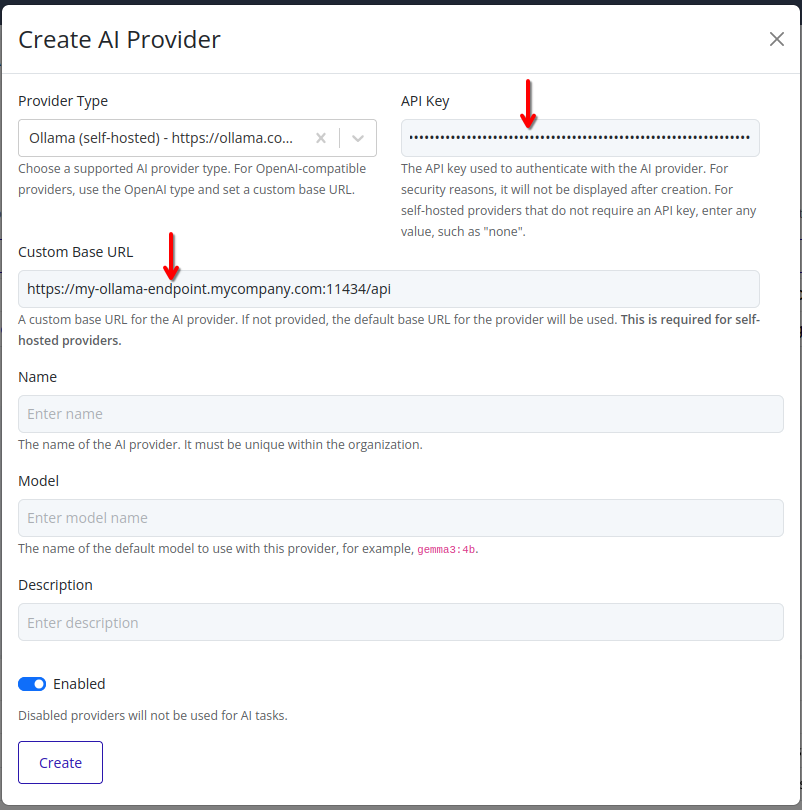

Choose the AI provider type from the dropdown list. A list of supported AI providers is available in the Supported AI Providers section.

-

Enter the API key and custom base URL (if applicable) for the selected AI provider.

Important

When using a self-hosted AI provider (such as

ollamaorlmstudio), a custom base URL must be specified. For cloud-hosted providers, the base URL is pre-configured and does not need to be changed.

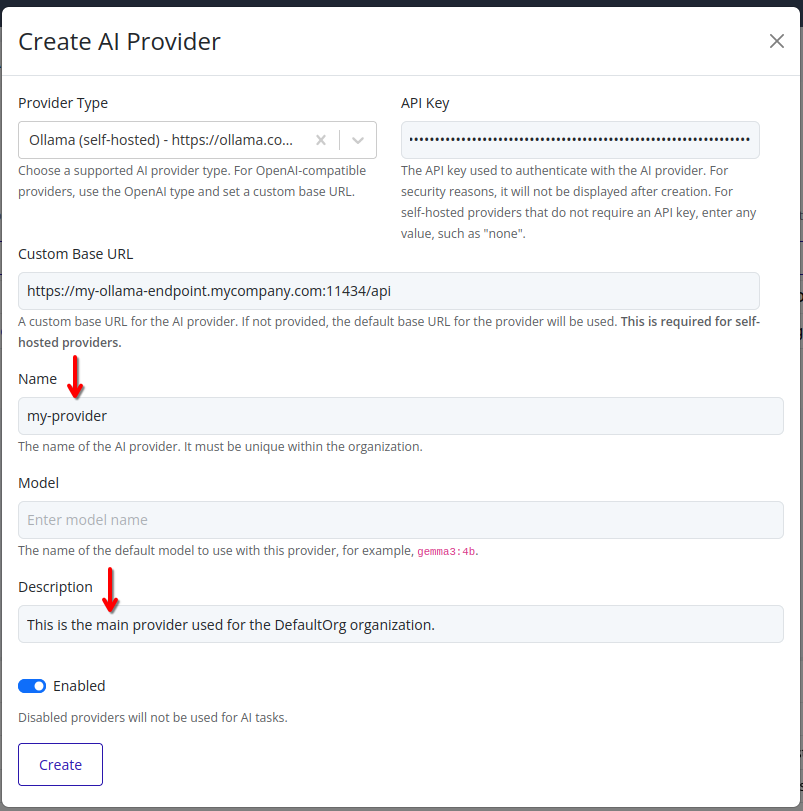

-

Enter a name for the AI provider, and an optional description. Disable the provider if you do not want it to be available for use immediately.

-

Enter the name of the model to use with this provider. This model will be used for all AI features unless overridden in specific configurations.

-

Click Create to add the AI provider to your organization. The new AI provider will be listed on the AI Providers page.

Adding OpenAI-Compatible Providers

For AI providers that are OpenAI-compatible but are not explicitly listed above, use the openai provider type and configure a custom base URL. This allows you to connect to any service that implements the OpenAI API specification.

When configuring an OpenAI-compatible provider:

- Select

openaias the provider type - Set the custom base URL to point to your provider’s API endpoint

- Use the appropriate API key for your chosen provider

This approach works with many third-party AI services and self-hosted solutions that implement OpenAI-compatible APIs.

Edit AI Provider

Note

For security reasons, the API keys for AI providers are not displayed in the web interface. API keys cannot be retrieved once set. Store your API keys securely.

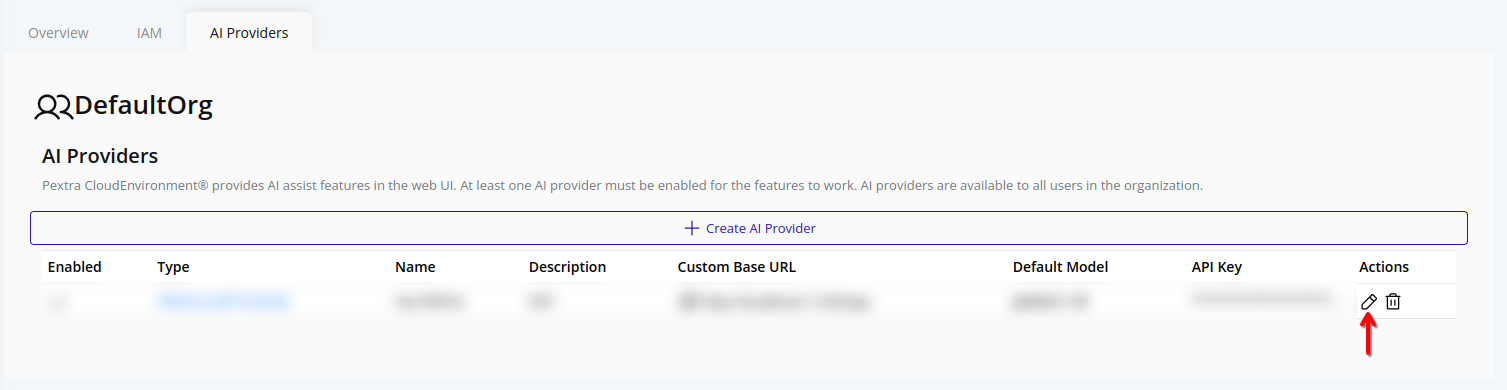

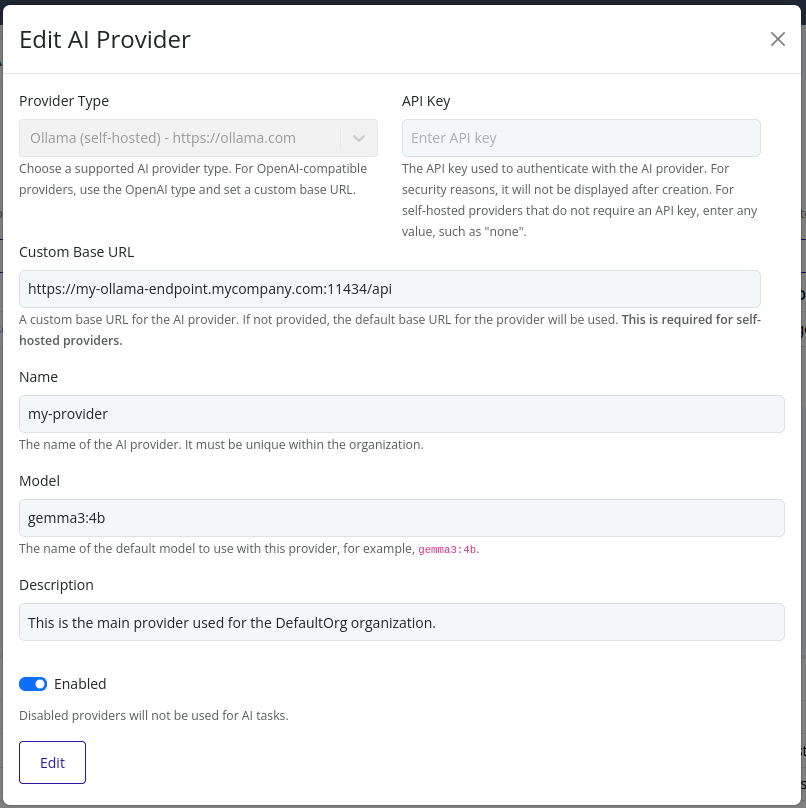

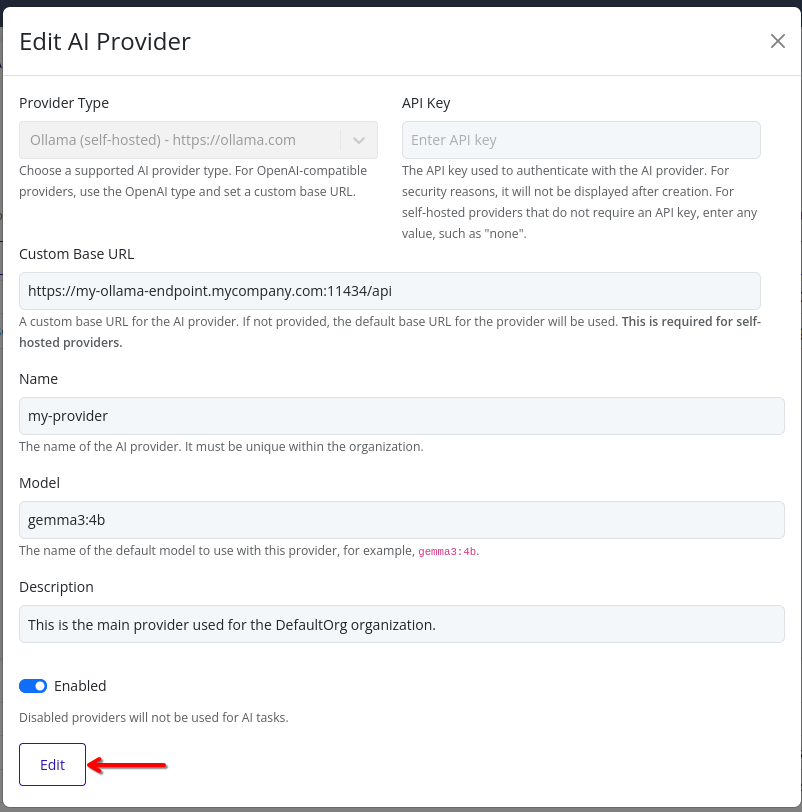

Web Interface

-

Select the organization in the resource tree and view the page on the right. Click on the AI Providers tab in the right pane. The AI providers will be listed.

-

Click the pencil icon next to the AI provider you want to edit.

-

Update any fields as needed.

Note

The API key field will be empty for security reasons. If you need to change the API key, you must enter the new key in this field. The previous key will not be displayed.

- Click Edit to save your changes. The AI provider will be updated with the new configuration.

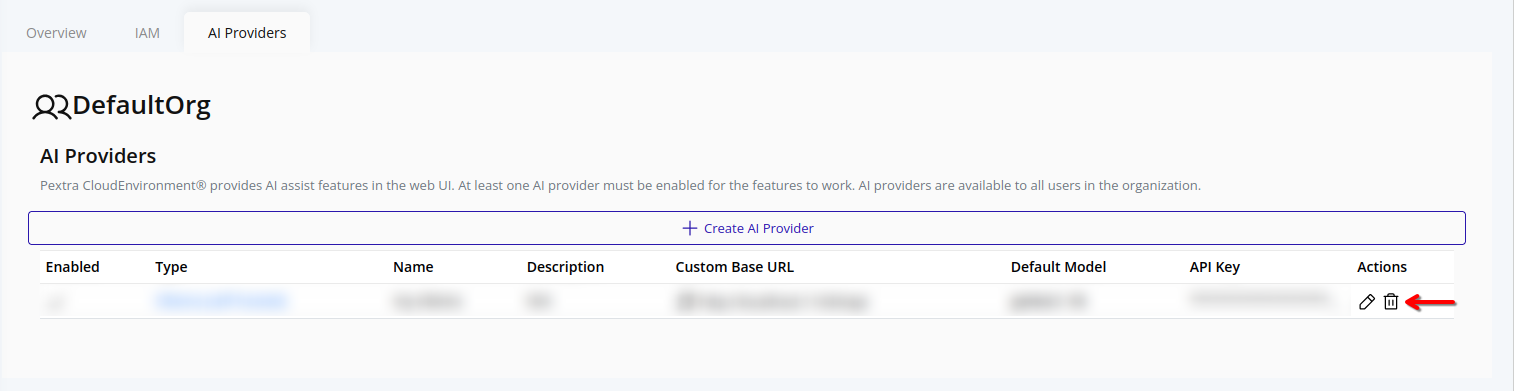

Delete AI Provider

Warning

If you delete the last AI provider in your organization, AI features will no longer be available in the web interface. At least one AI provider must be configured and enabled for AI features to function.

Web Interface

-

Select the organization in the resource tree and view the page on the right. Click on the AI Providers tab in the right pane. The AI providers will be listed:

-

Click the trash can icon next to the AI provider you want to delete:

-

A confirmation dialog will appear. Type in “DESTROY” and click Confirm to confirm the deletion of the AI provider:

Network Management

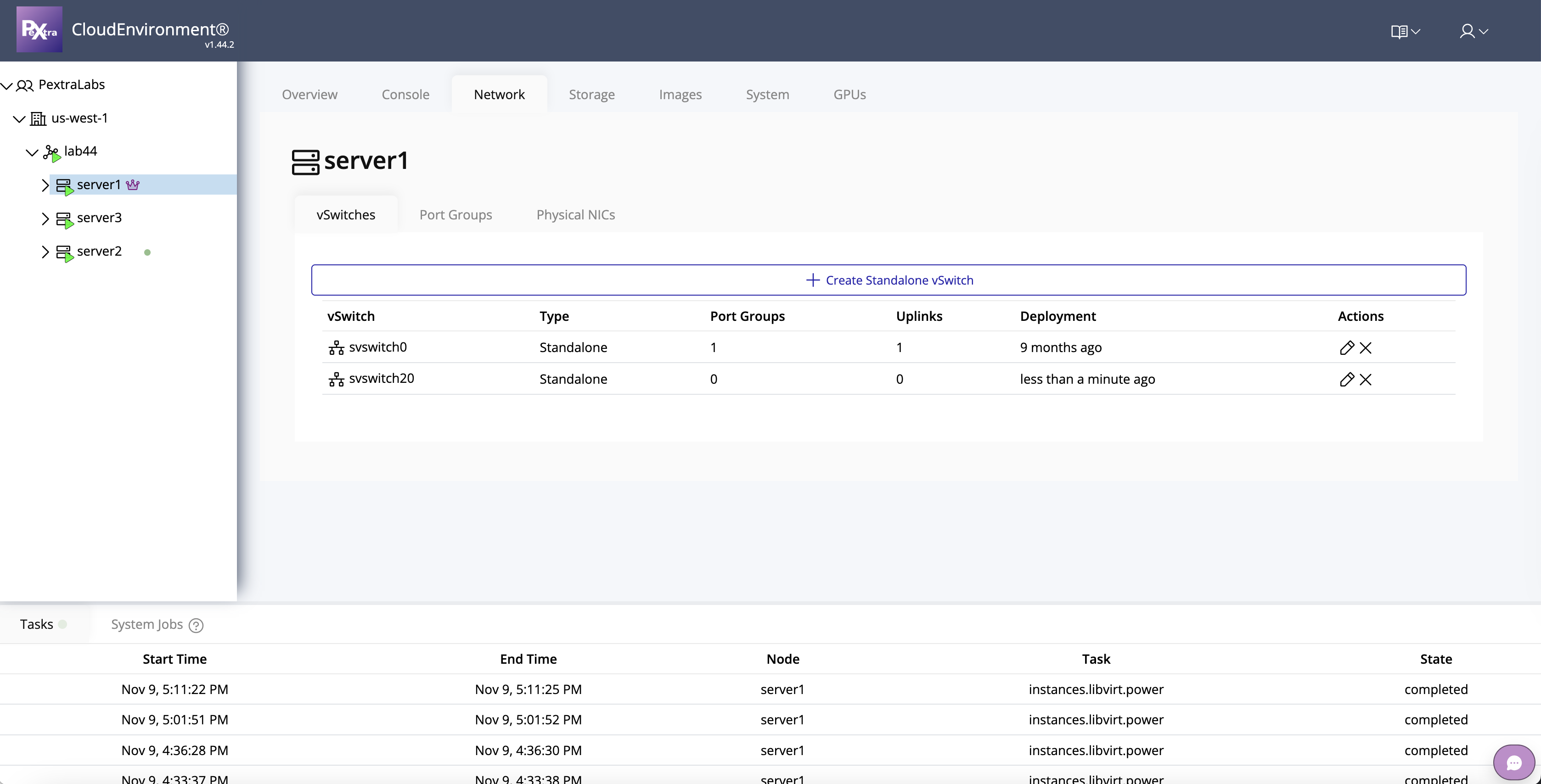

Networking in Pextra CloudEnvironment® is configured at the node (server) level. Each node has physical NICs that can be virtualized to create virtual networks for your instances.

To set up networking:

-

Click on the node in the left navigation panel.

-

On the right, select the Network tab.

From this tab, you can manage:

- vSwitches – Create and configure virtual switches to connect virtual machines.

- Port Groups – Define network segments and VLANs for instance traffic.

- NICs – Assign physical NICs to virtual switches or manage virtual NICs.

Tip

Plan your network layout carefully, including VLANs and port groups, before assigning NICs to virtual switches. This ensures proper isolation and connectivity between instances.

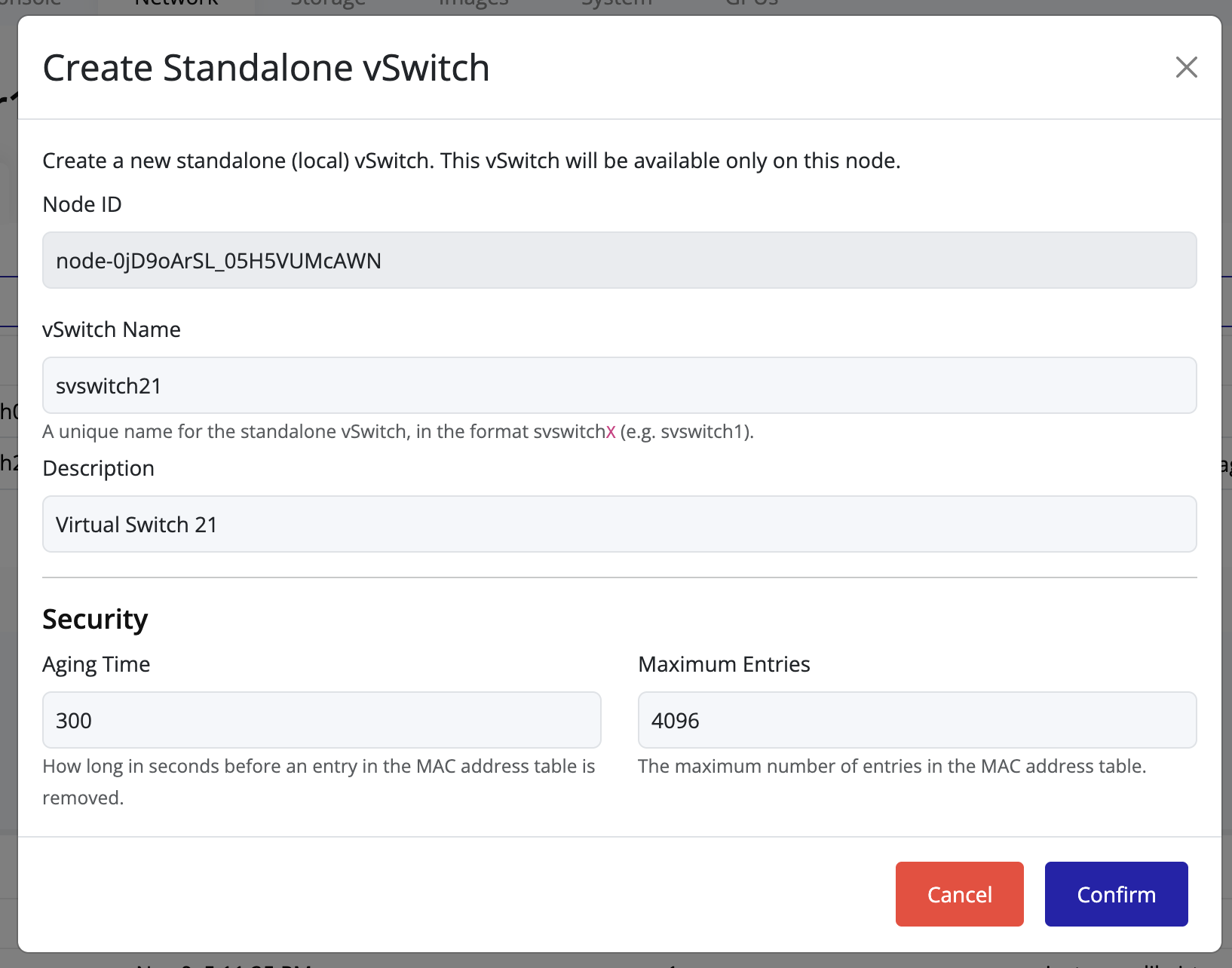

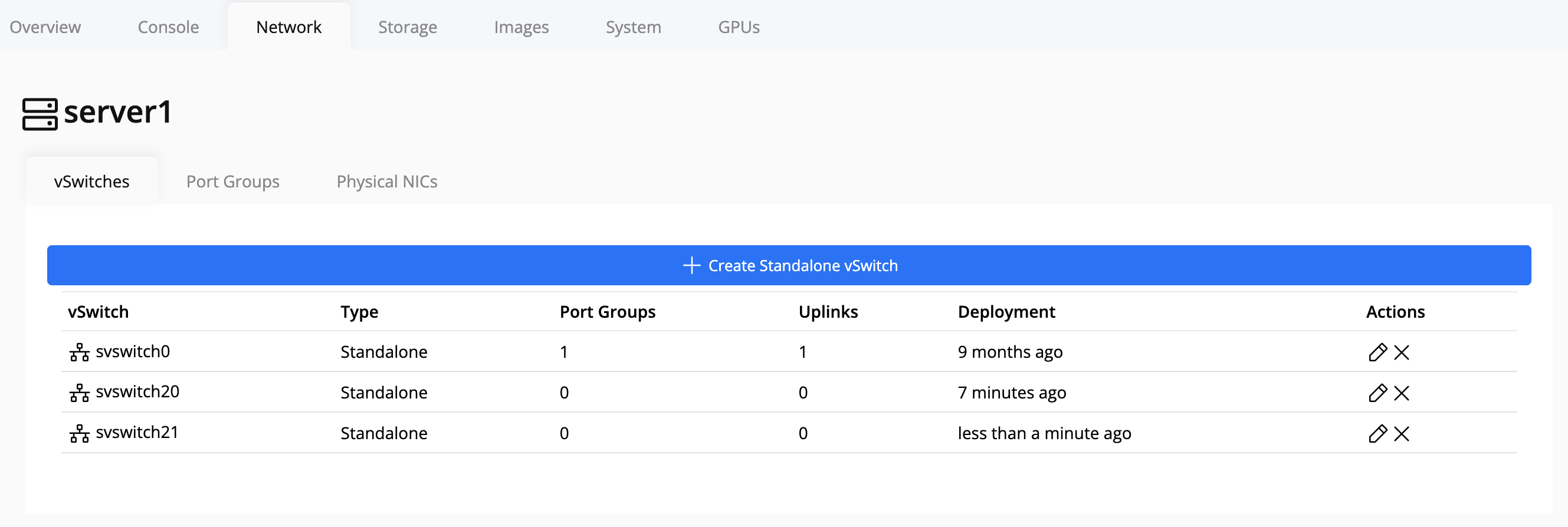

vSwitch Create

Creating a vSwitch

A vSwitch (virtual switch) connects virtual machines and network interfaces within a node. Each vSwitch acts as a bridge between virtual and physical networks.

To create a new vSwitch:

-

Click on the node in the left navigation panel.

-

Select the Network tab on the right.

-

Click on vSwitches.

-

Click Create vSwitch.

-

Enter a name for the vSwitch. The name must follow the required format:

Here, svswitch is the prefix and X is a positive integer.

Example: svswitch10, svswitch21.

-

Complete the configuration and click Create.

-

After creation, the new vSwitch will appear in the vSwitch list.

Tip

Use a consistent naming convention (e.g.,

svswitch10,svswitch20, etc.) to easily identify network mappings across multiple nodes.

Warning

Ensure the vSwitch name is unique cluster-wide to prevent conflicts.

The vSwitch will not be created if a name conflict is detected within the cluster.

vSwitch Management

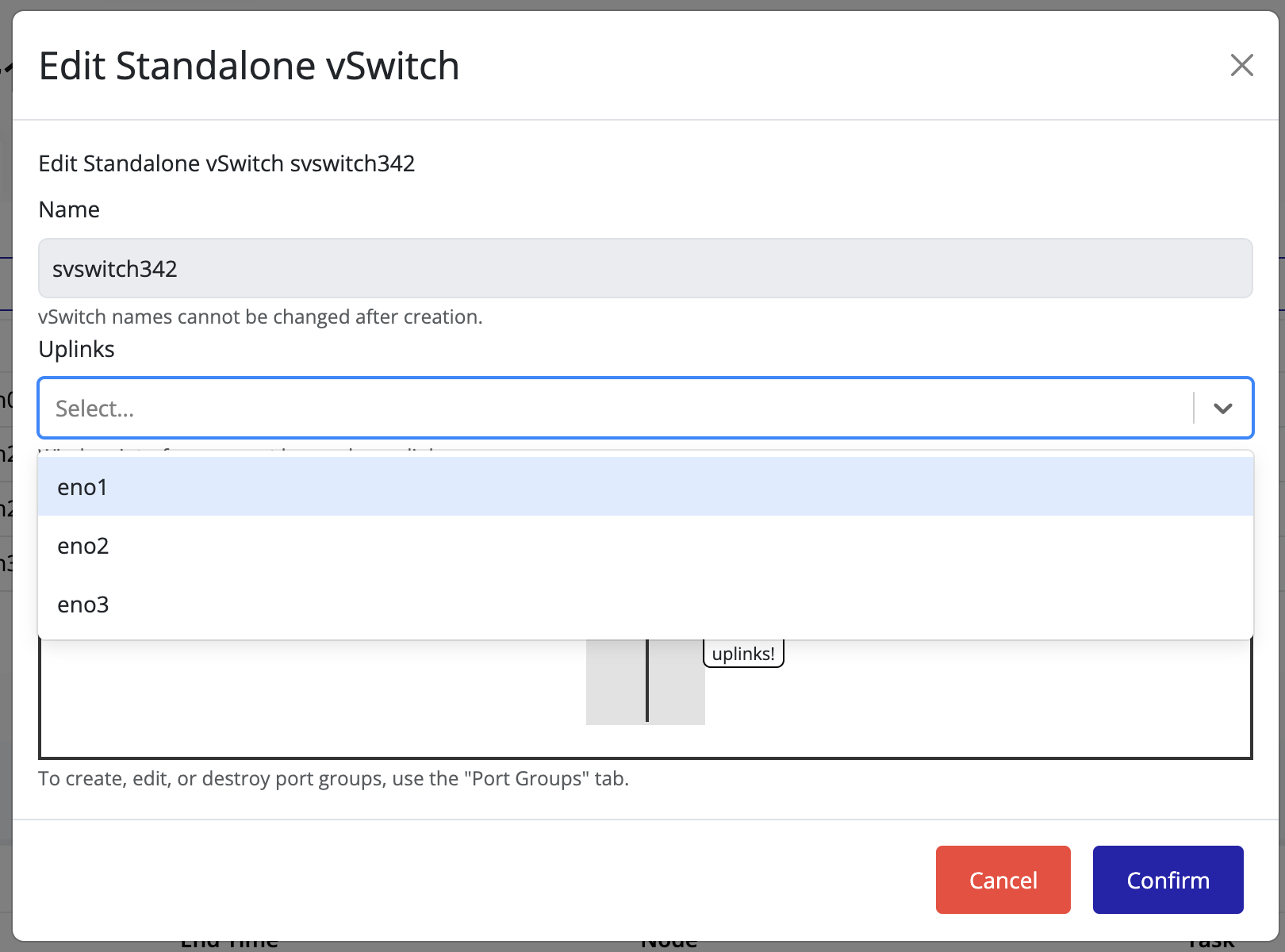

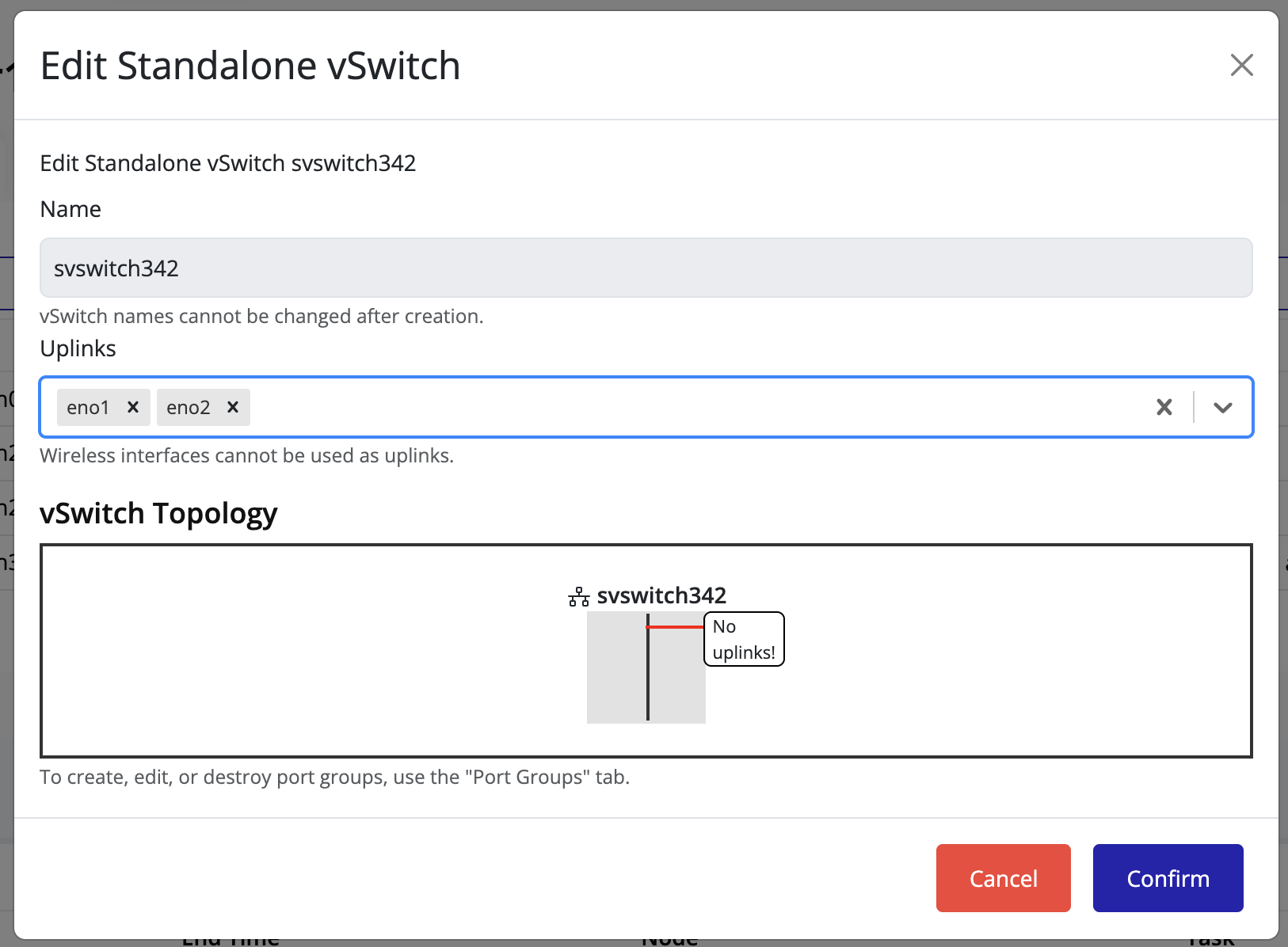

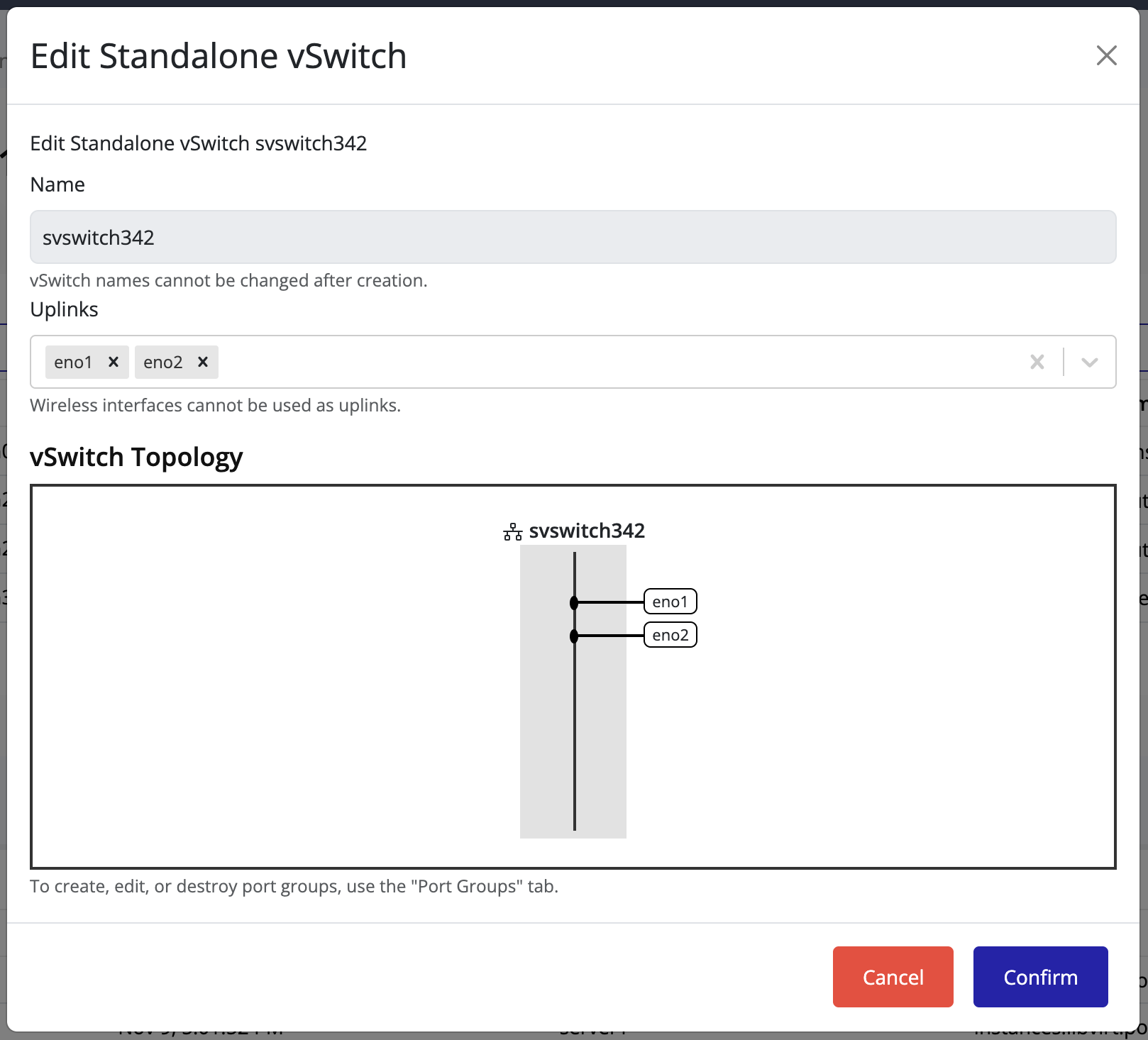

Editing a vSwitch to Add Uplinks

You can add one or more uplinks to a vSwitch to connect it to physical network interfaces on a node. Adding multiple uplinks improves redundancy and fault tolerance, ensuring higher network availability.

Steps to Add Uplinks

-

Select the node in the left navigation panel.

-

Click the Network tab on the right.

-

Click on vSwitches.

-

Locate the vSwitch you want to modify and click the Edit icon.

-

From the Uplinks drop-down menu, select one or more network interfaces to attach as uplinks.

You can select multiple interfaces to enable redundancy.

Tip

The image preview may not immediately reflect uplink changes.

-

Click Confirm to apply the changes.

-

The Uplink Count column for the vSwitch updates to reflect the new configuration.

-

To verify or visualize the connected uplinks, click the Edit icon again.

The connected uplinks are listed in the vSwitch details view.

Tip

Adding multiple uplinks provides network redundancy.

If one uplink fails, the remaining uplinks continue handling network traffic seamlessly.

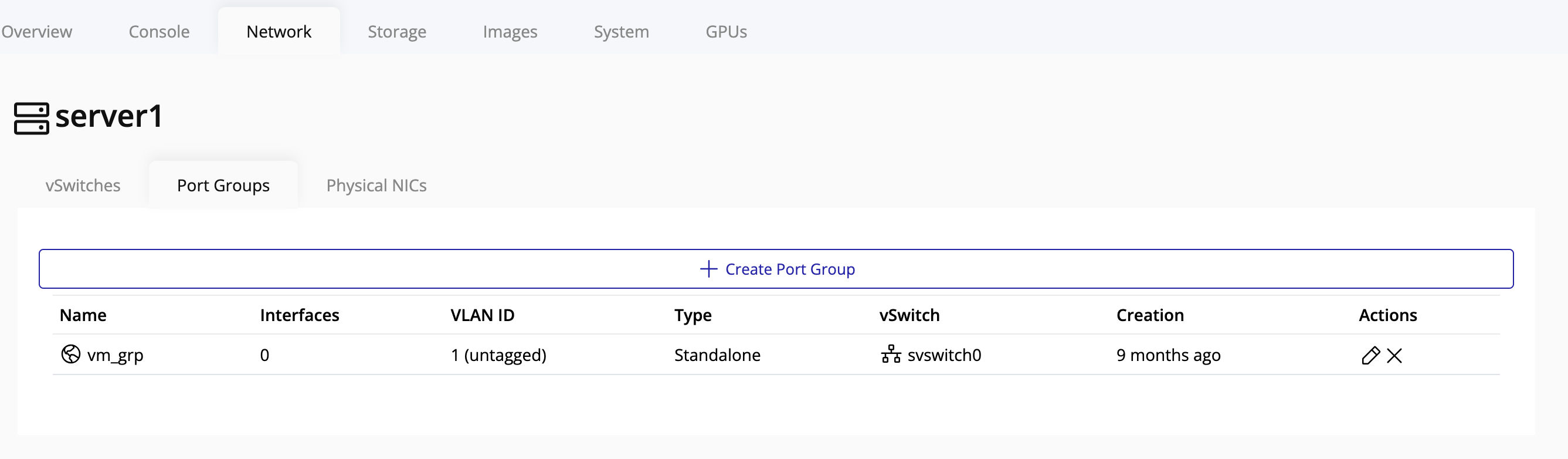

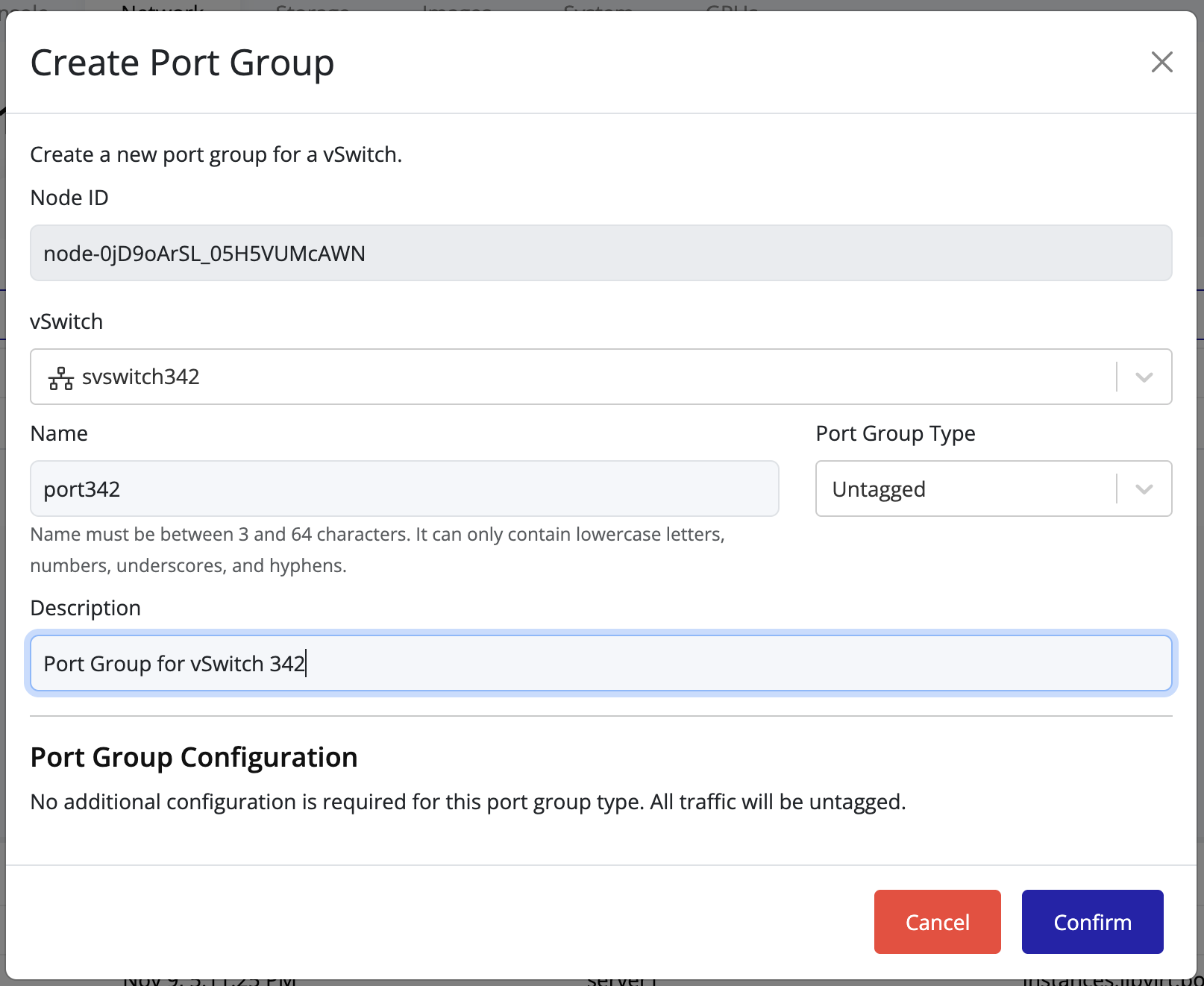

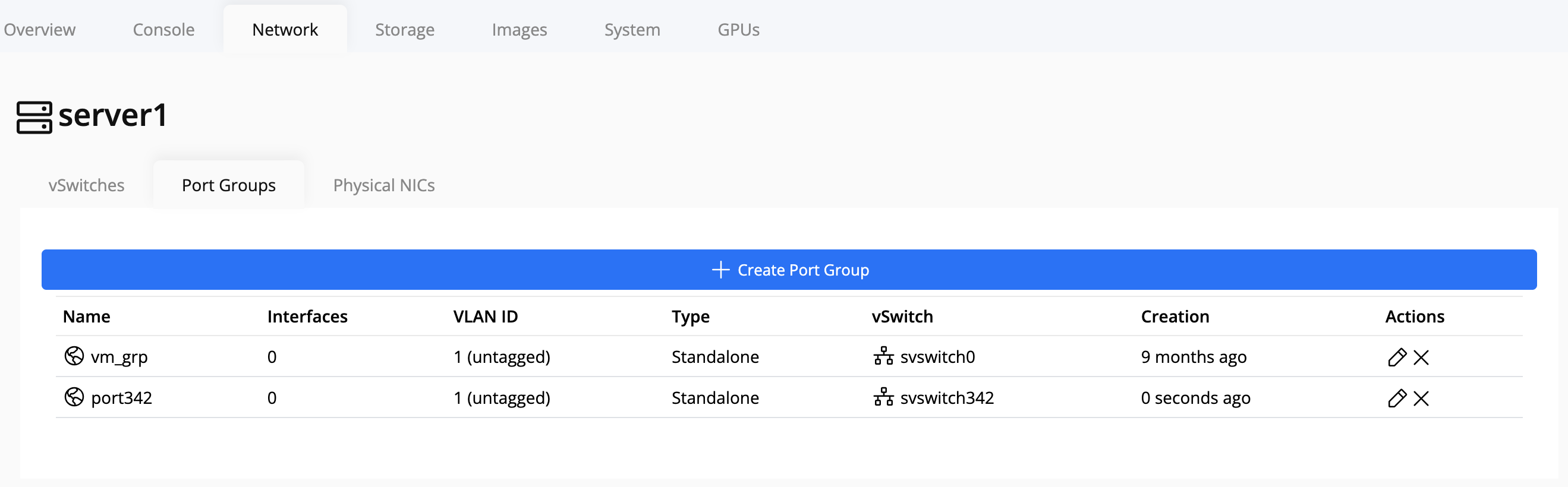

Create Port Group

Creating and Managing Port Groups

Port groups define how virtual machines connect to a vSwitch and access the physical network.

Each port group is associated with a specific vSwitch and can include custom network configurations.

Creating a Port Group

-

Select the node in the left navigation panel.

-

Click the Network tab on the right.

-

Open the Ports tab to view existing port groups.

-

Click the Create Port Group button at the top of the page.

-

In the creation form:

- Select the vSwitch to associate the new port group with.

- Fill in the remaining configuration fields as needed (for example: VLAN ID, description, or security settings).

- Click Confirm to save.

Tip

When managing multiple networks, naming port groups clearly (e.g., Production, Backup, DMZ) makes maintenance and troubleshooting much easier.

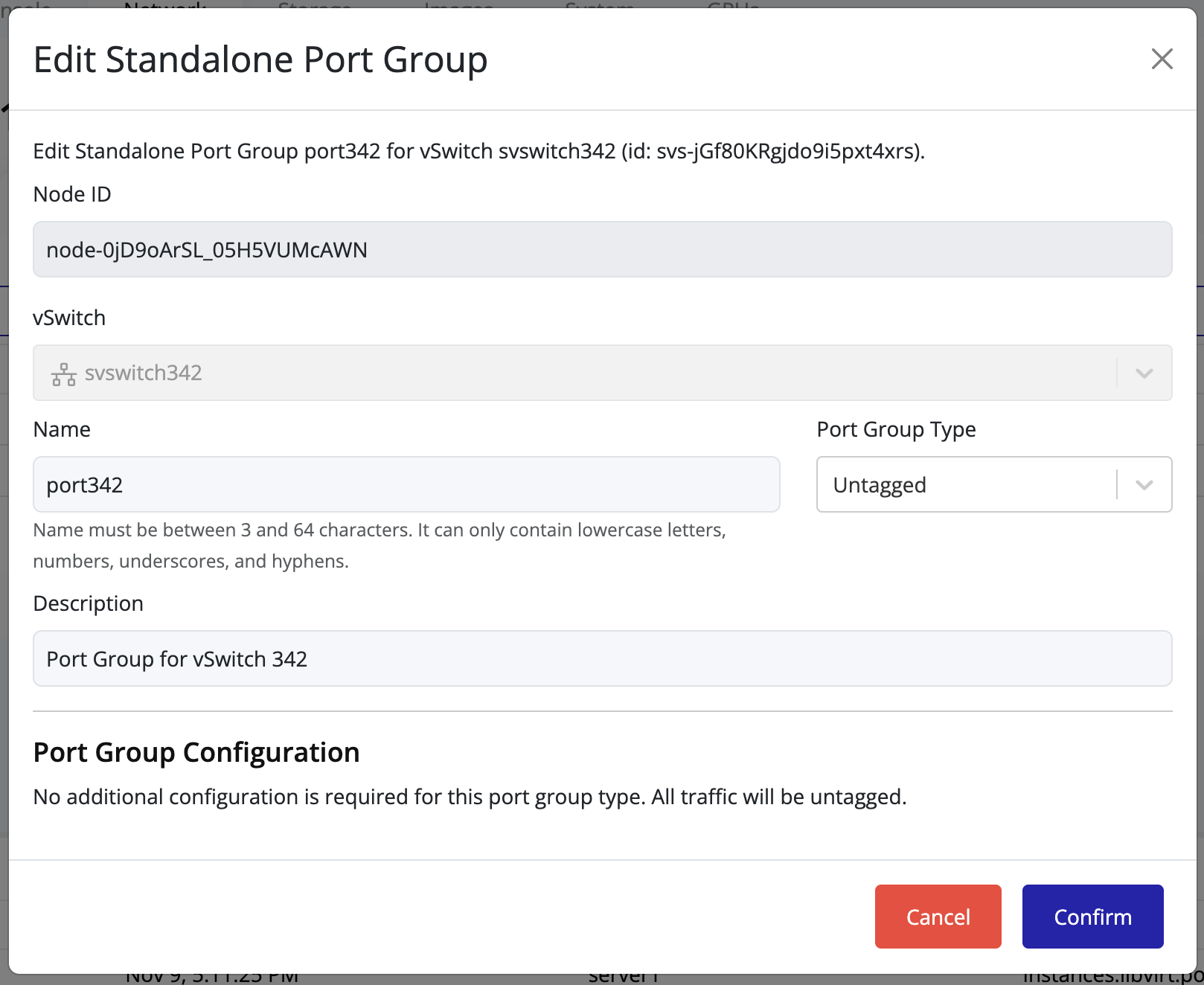

Edit Port Group

Editing a Port Group

To modify an existing port group:

- Locate the desired port group in the list.

- Click the Edit icon in the same row.

- Adjust the configuration fields as needed.

- Click Confirm to apply the changes.

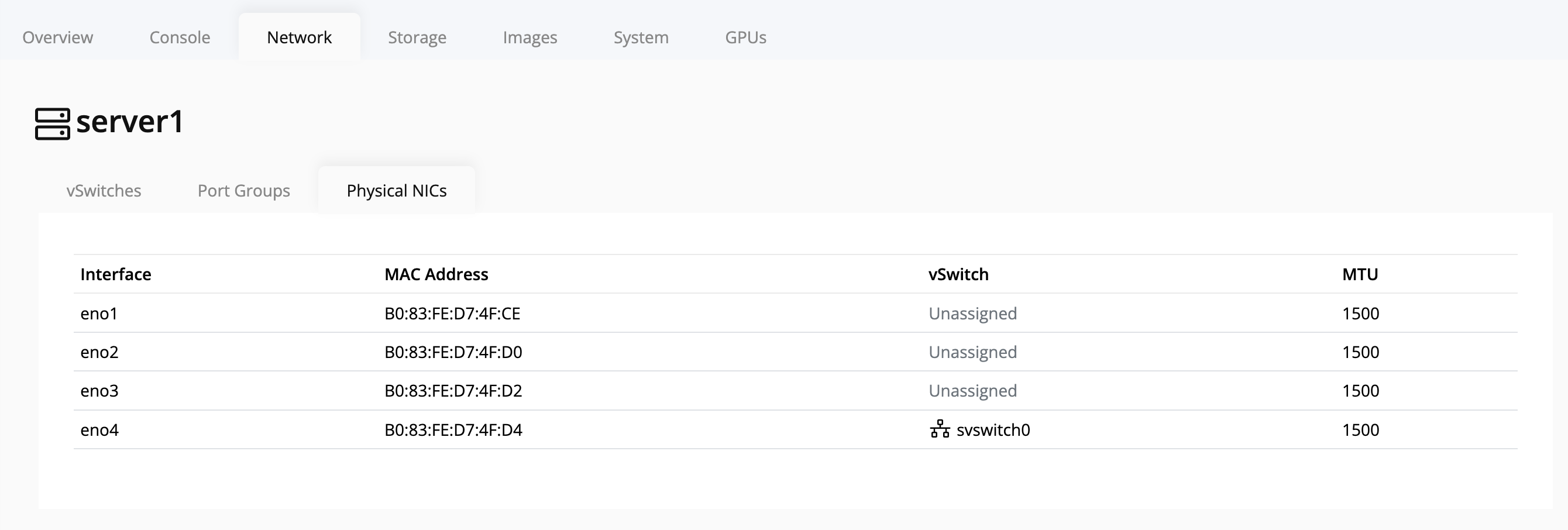

Viewing Available NICs on a Node

To view the network interface cards (NICs) available on a node:

-

In the left navigation panel, click on the node you want to inspect.

-

Select the Network tab on the node’s details page.

-

Click on NICs.

-

The page will display all available NICs for the selected node.

Storage Management

This section provides a guide to managing storage within your deployment. The Pextra CloudEnvironment® storage engine supports a variety of storage technologies, from local disks to distributed storage systems.

Storage Pools

Storage pools are software-defined storage resources. Storage pools are configured on clusters and propagated to nodes. One configuration can be used across multiple nodes, allowing for flexible storage management.

The ID prefix for storage pools is pool-1.

Storage Pool Types

The following storage pool configurations are supported in Pextra CloudEnvironment®:

| Pool Type | Volume Backing2 | Networked | Description | Notes |

|---|---|---|---|---|

Directory (directory) | File | ❌ | Uses a directory on the node. | The default local storage pool is a directory pool. |

NetFS (netfs) | File | ✅ | Mounts a network filesystem (NFS or CIFS/SMB) on the node as a storage pool. Similar to a directory pool, but allows for networked storage. | Target path must not conflict with other netfs or directory pools. |

iSCSI (iscsi) | Block | ✅ | Uses an iSCSI target. | N/A |

Ceph RBD (rbd) | Block | ✅ | Uses a Ceph RBD pool. | N/A |

ZFS (zfs) | Block | ❌ | Uses a ZFS pool. | A ZFS pool with the same name as the storage pool must exist on each enabled node. |

LVM (lvm) | Block | ❌ | Uses an LVM volume group. | An LVM volume group with the same name as the storage pool must exist on each enabled node. |

Notes

-

Resources in Pextra CloudEnvironment® are identified by unique IDs. Storage pool IDs will have the prefix

pool-, followed by a unique identifier (e.g.,pool-qthm_iLrflJ_DtSS1l4Gx). ↩ -

A storage pool can back volumes using either file-based or block-based storage. File-based storage pools use files to store data, while block-based storage pools use raw data blocks. Block-based storage pools typically provide better performance, while file-based storage pools are easier to manage. ↩

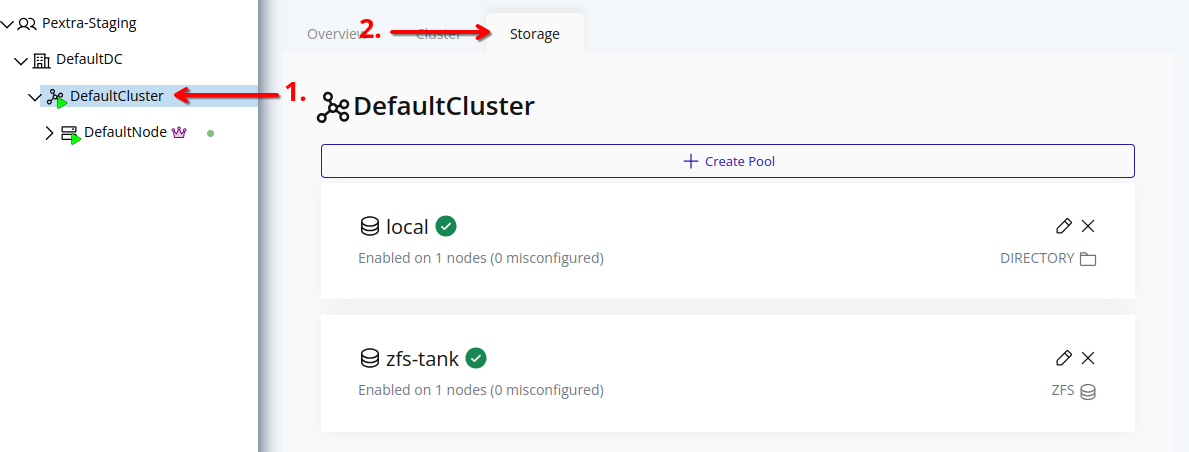

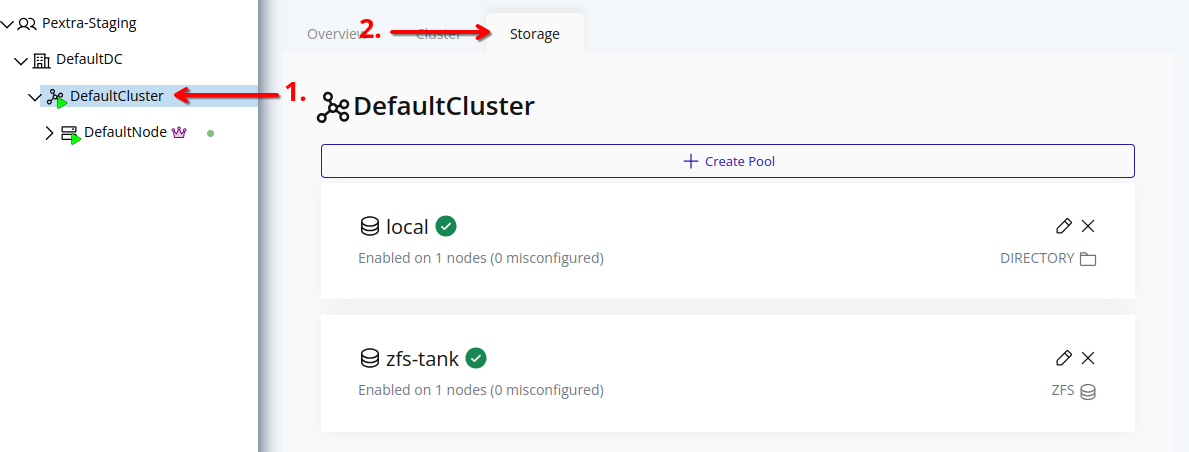

List Storage Pools

Storage pools can be listed to view the current storage configuration in the cluster. This includes details about the storage pools, their status, and the nodes they are associated with.

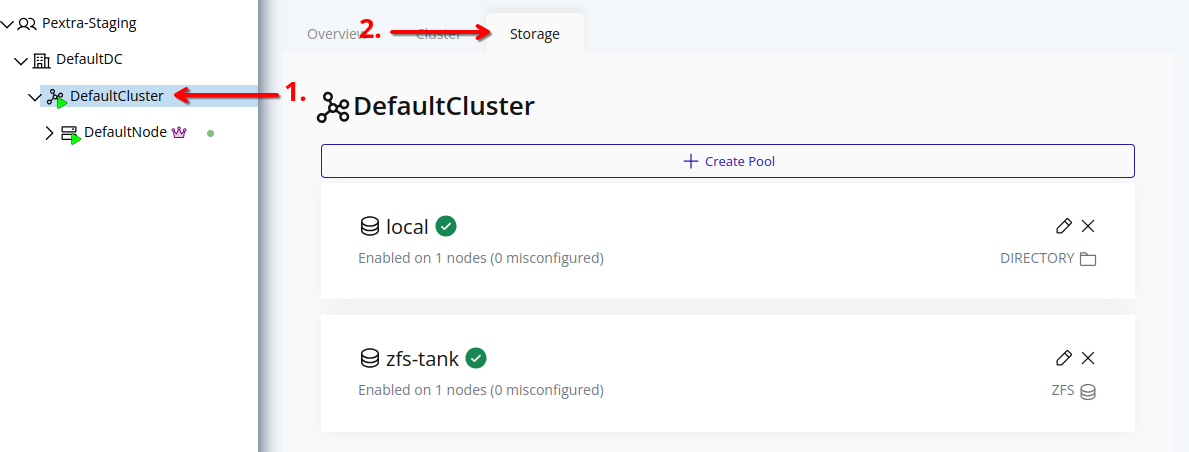

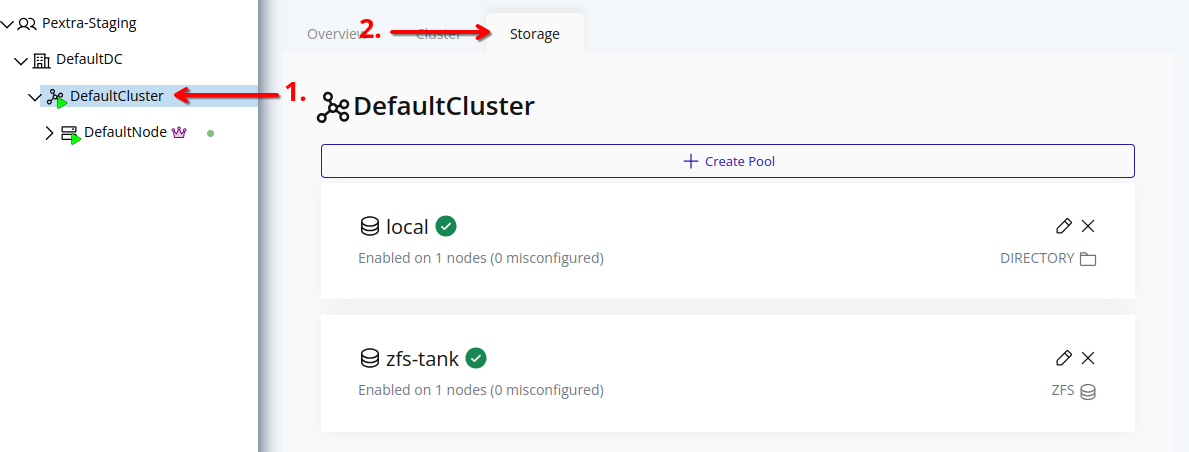

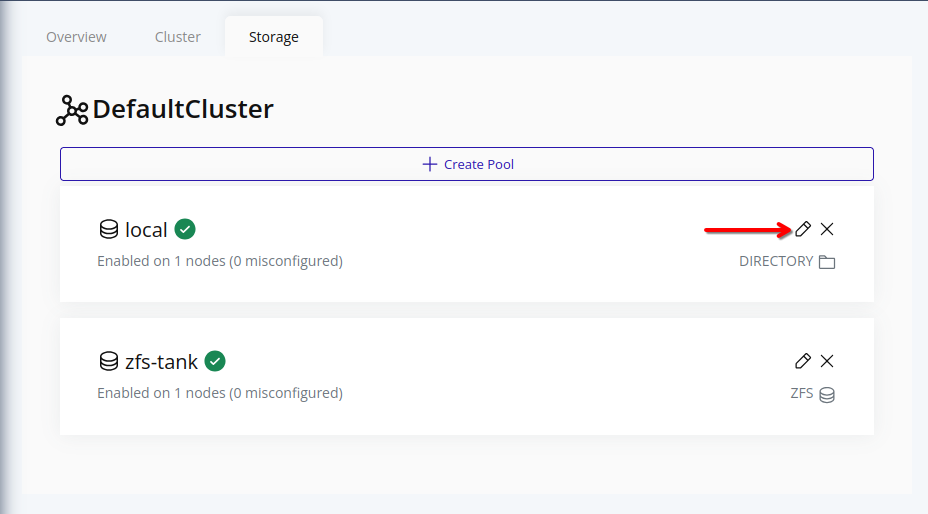

Web Interface

- Select the cluster in the resource tree and view the page on the right. Click on the Storage tab in the right pane. The storage pools will be listed:

To edit associated nodes of a storage pool, refer to the Edit Storage Pool section.

Storage Pool Status

Each storage pool has a status indicator that provides information about its availability and configuration across the nodes in the cluster. The status can be one of the following:

The storage pool has not been enabled on any nodes.

The storage pool is available on all enabled nodes.

An error has occurred while propagating the storage pool configuration to enabled nodes. Manual intervention may be required to resolve the issue.

Create Storage Pool

Web Interface

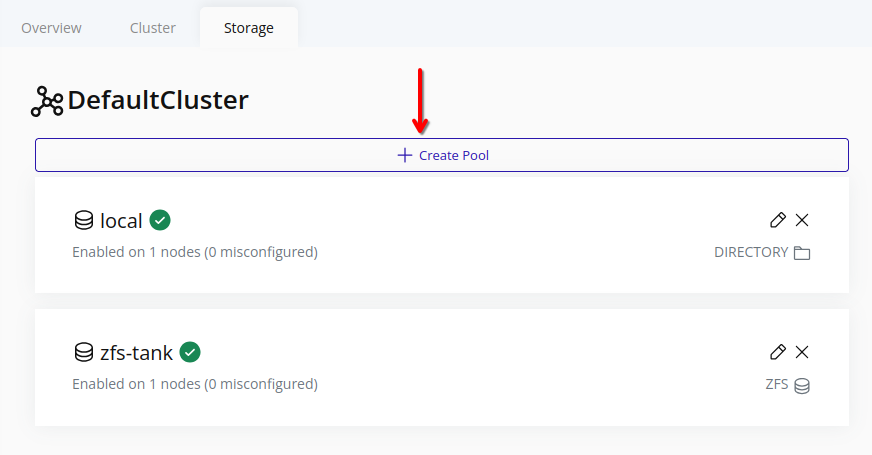

-

Select the cluster in the resource tree and view the page on the right. Click on the Storage tab in the right pane.

-

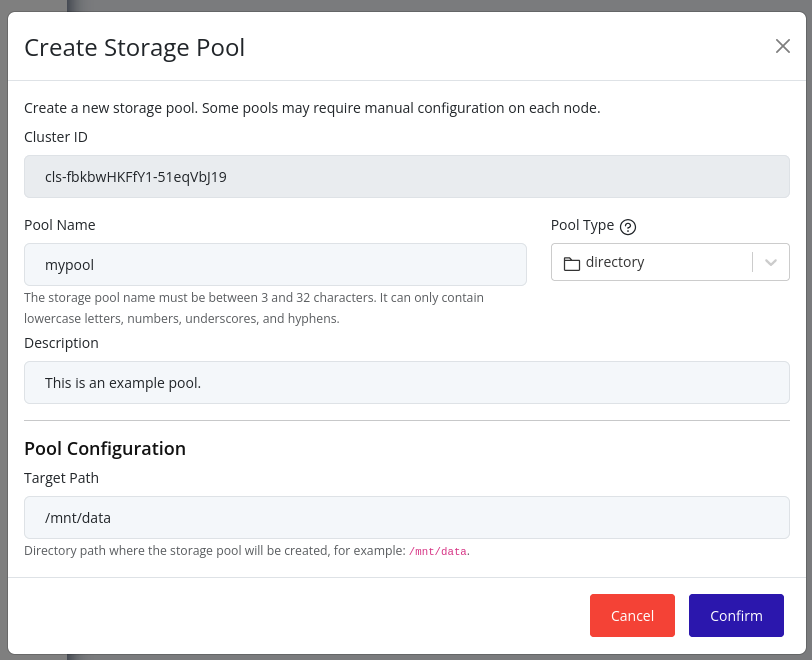

Click the Create Pool button.

-

Choose the storage pool type, enter a name, and enter the required configuration metadata.

-

Click Create to create the storage pool. Initially, the new storage pool will not be enabled on any nodes. To enable the new storage pool on nodes, refer to the Edit Storage Pool section.

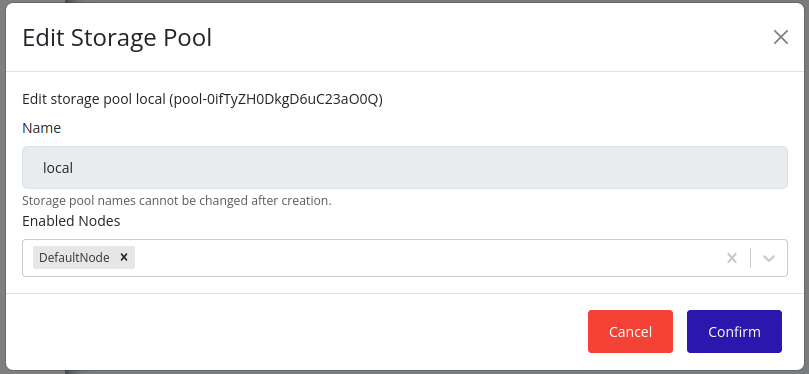

Edit Storage Pool

Currently, only node associations with storage pools can be modified. The name of a storage pool cannot be changed after creation.

Web Interface

-

Select the cluster in the resource tree and view the page on the right. Click on the Storage tab in the right pane.

-

Click on the pencil icon in the card of the storage pool you want to edit.

-

In the edit form, you can select the nodes on which this storage pool should be enabled. The nodes that are already associated with this storage pool will be selected by default.

-

Click Save to apply the changes. The storage pool will be enabled on the selected nodes, and the changes will be propagated according to the storage pool propagation algorithm. This may take some time.

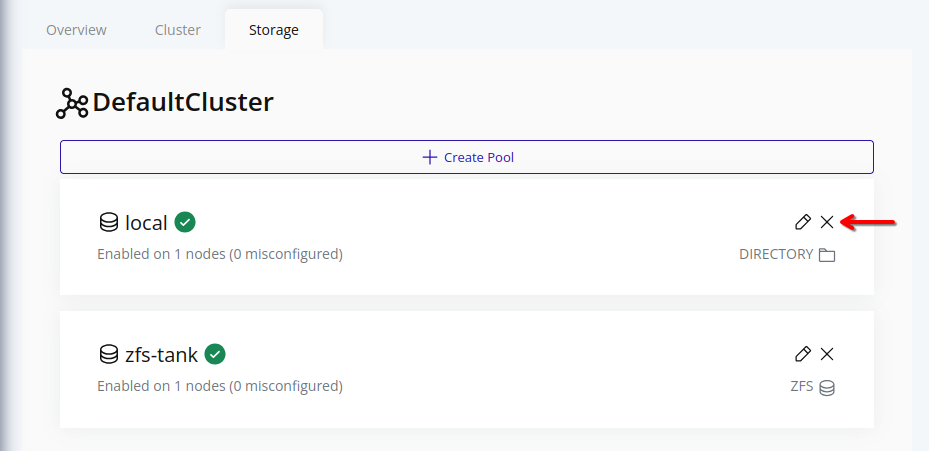

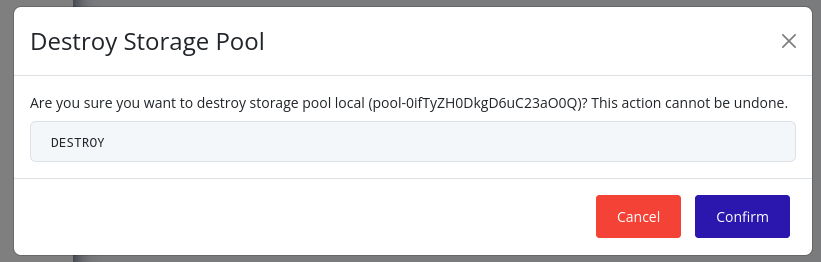

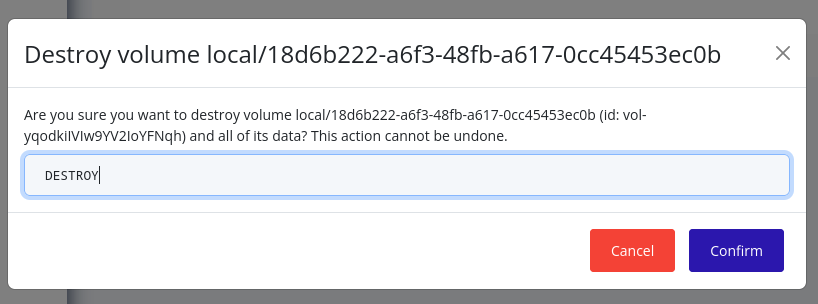

Destroy Storage Pool

Note

Storage pools cannot be destroyed if there are volumes on enabled nodes. All volumes must be destroyed, or all enabled nodes with volumes must have their associations removed. This limitation will be addressed in the future.

Web Interface

-

Select the cluster in the resource tree and view the page on the right. Click on the Storage tab in the right pane.

-

Click on the X icon in the card of the storage pool you want to destroy.

-

A confirmation dialog will appear. Type in “DESTROY” and click Confirm to confirm the destruction of the storage pool.

-

The storage pool will be marked for destruction, and will be cleaned up according to the storage pool propagation algorithm. This may take some time. During this time, the storage pool’s name will be unavailable for reuse.

Storage Pool Propagation

Storage pools are propagated across the cluster at regular intervals by a system job, ensuring that all nodes have the latest configuration and state. This propagation process is crucial for maintaining consistency and availability of storage resources.

Note

Creating a storage pool with the same name as a storage pool that is marked for deletion is not allowed. If you need to reuse the name, you must wait for the storage pool to be fully cleaned up.

Propagation Algorithm

The propagation algorithm is illustrated in the following diagram:

View sourceStorage Volumes

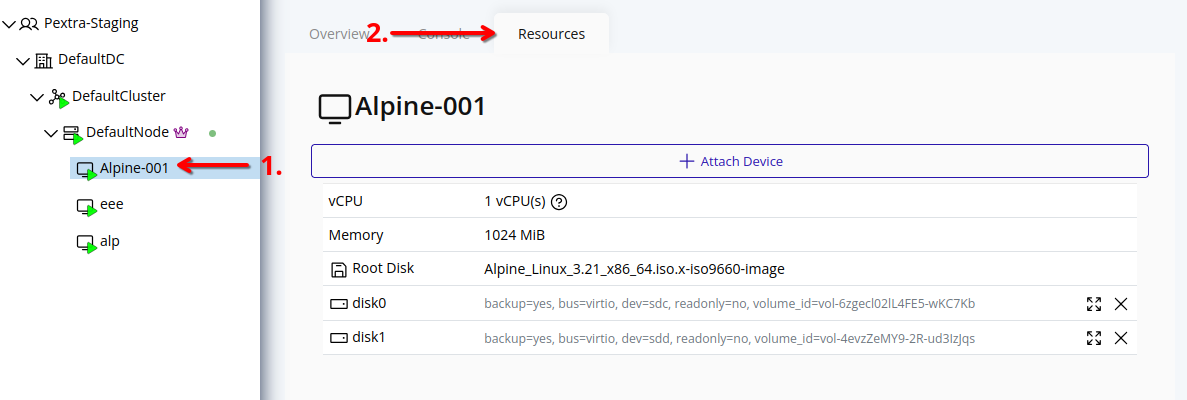

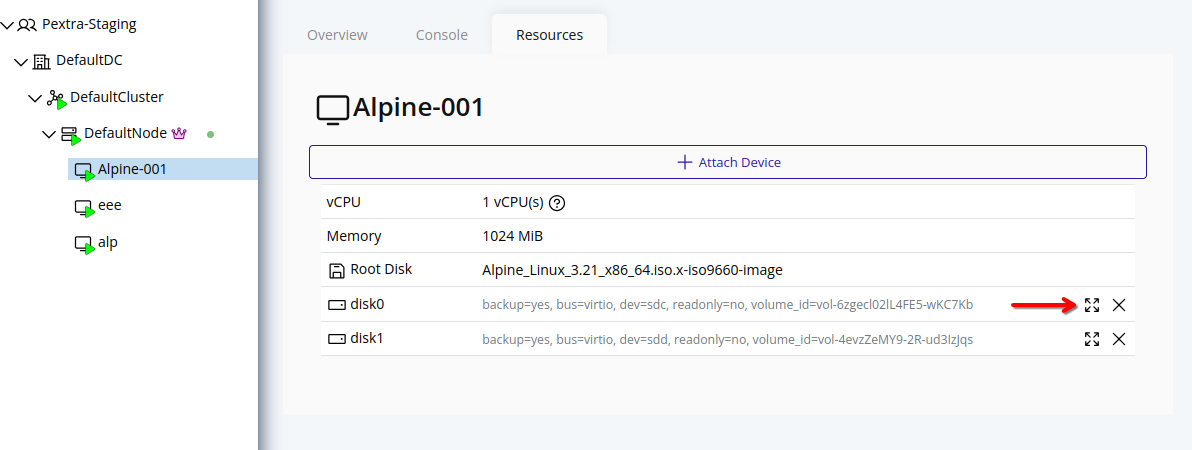

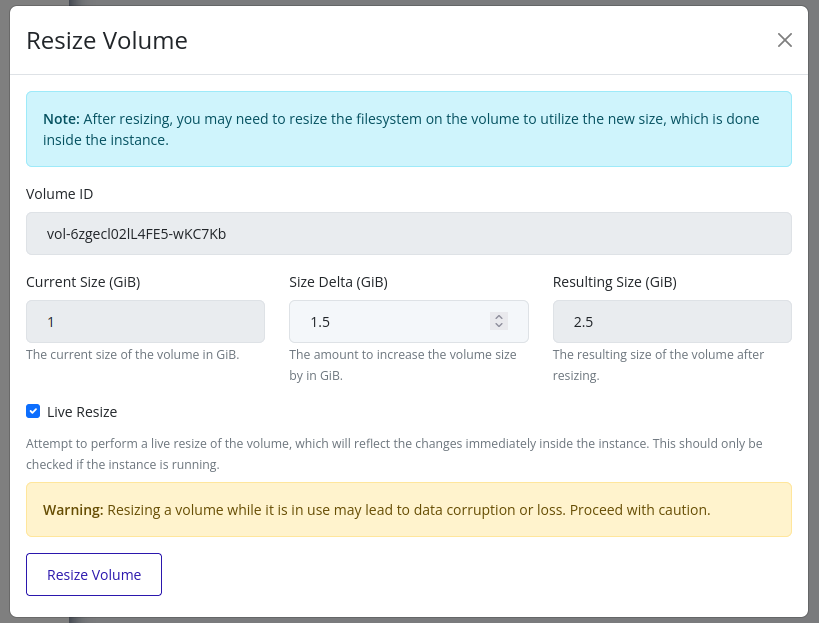

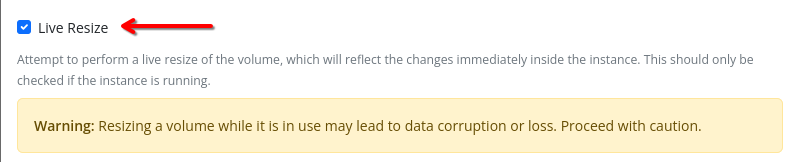

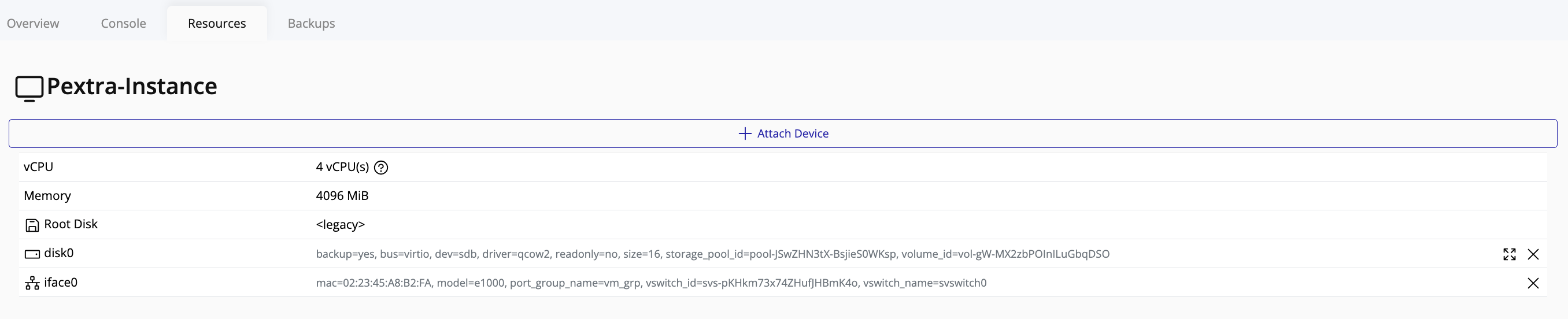

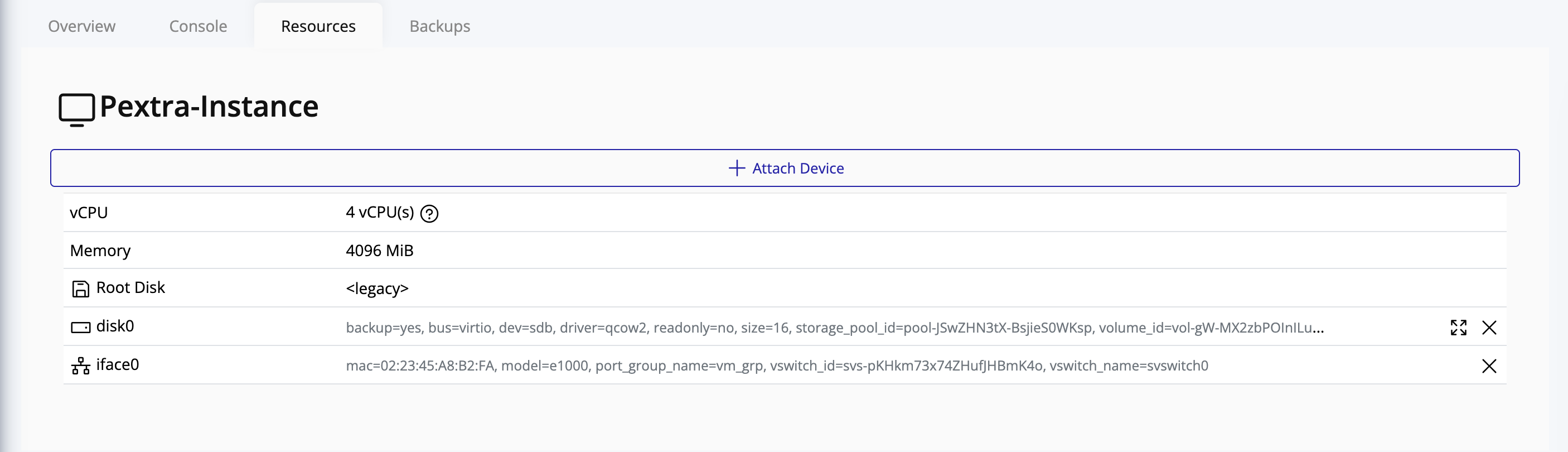

Storage volumes (or just “volumes”) are virtual storage devices that can be attached to instances. Volumes are allocated from storage pools, stored on individual nodes, and are used to store instance disks, snapshots, and other data.

Volumes can be attached to instances to provide additional storage capacity. Volumes can be resized, detached, and destroyed as needed.

The ID prefix for volumes is vol-1.

Notes

-

Resources in Pextra CloudEnvironment® are identified by unique IDs. Storage volume IDs will have the prefix

vol-, followed by a unique identifier (e.g.,vol-qthm_iLrflJ_DtSS1l4Gx). ↩

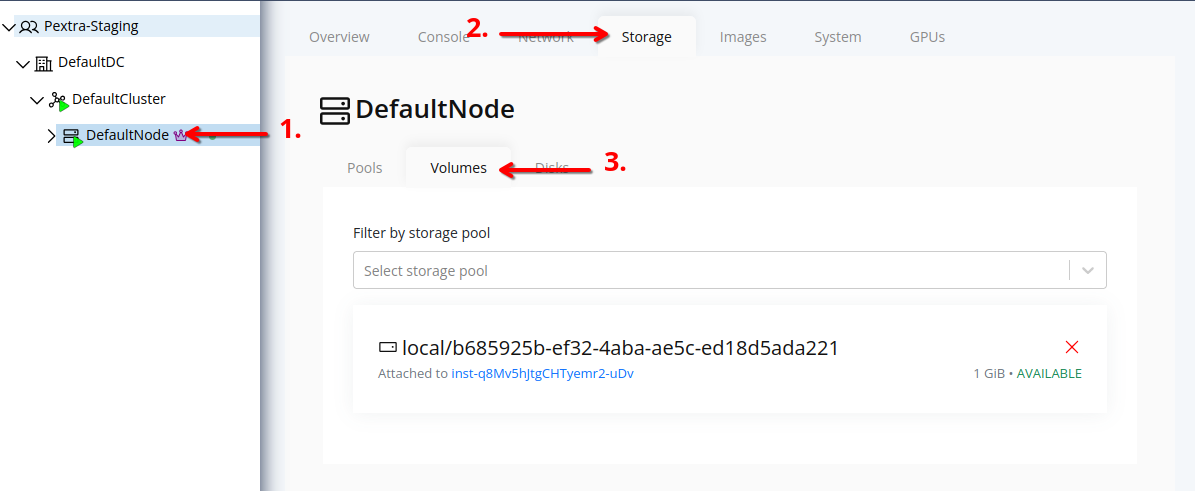

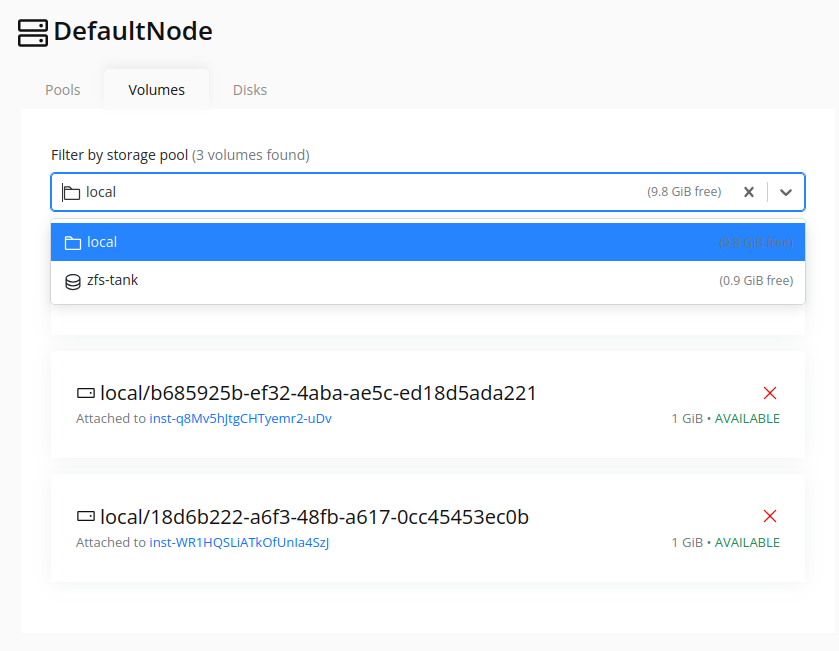

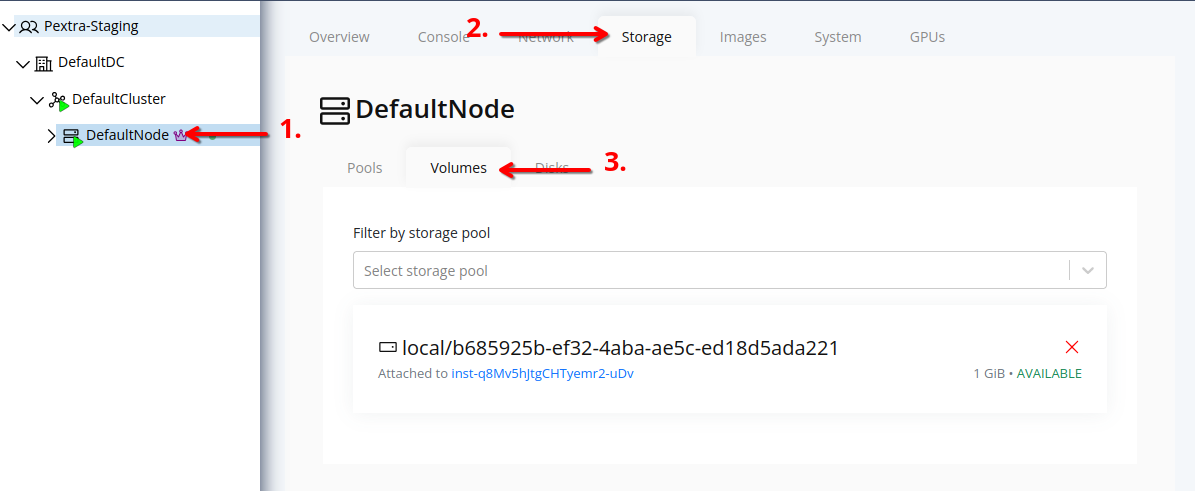

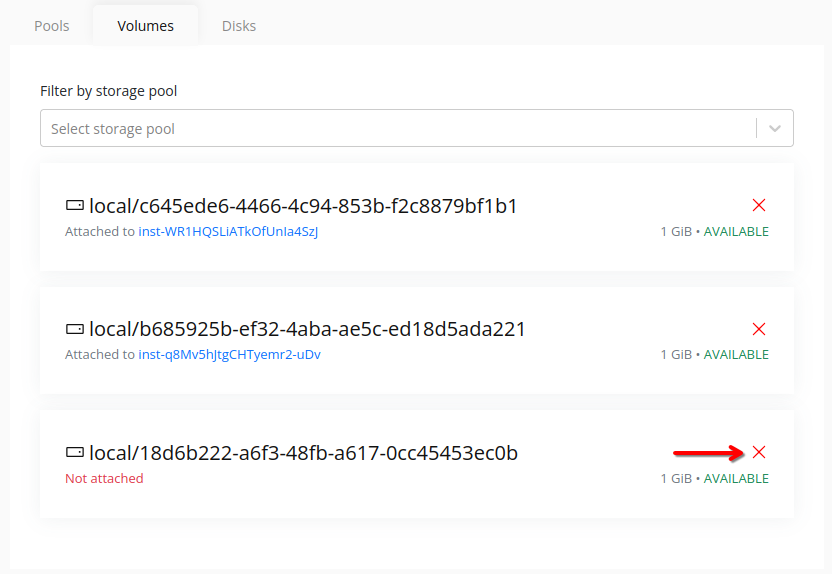

List Volumes

Web Interface

-

Select the node in the resource tree and view the page on the right. Click on the Storage tab in the right pane.

-

Click on the Volumes tab to view the list of volumes associated with the node.

-

The list displays all volumes associated with the node. To filter by storage pool, use the Storage Pool dropdown at the top of the list.

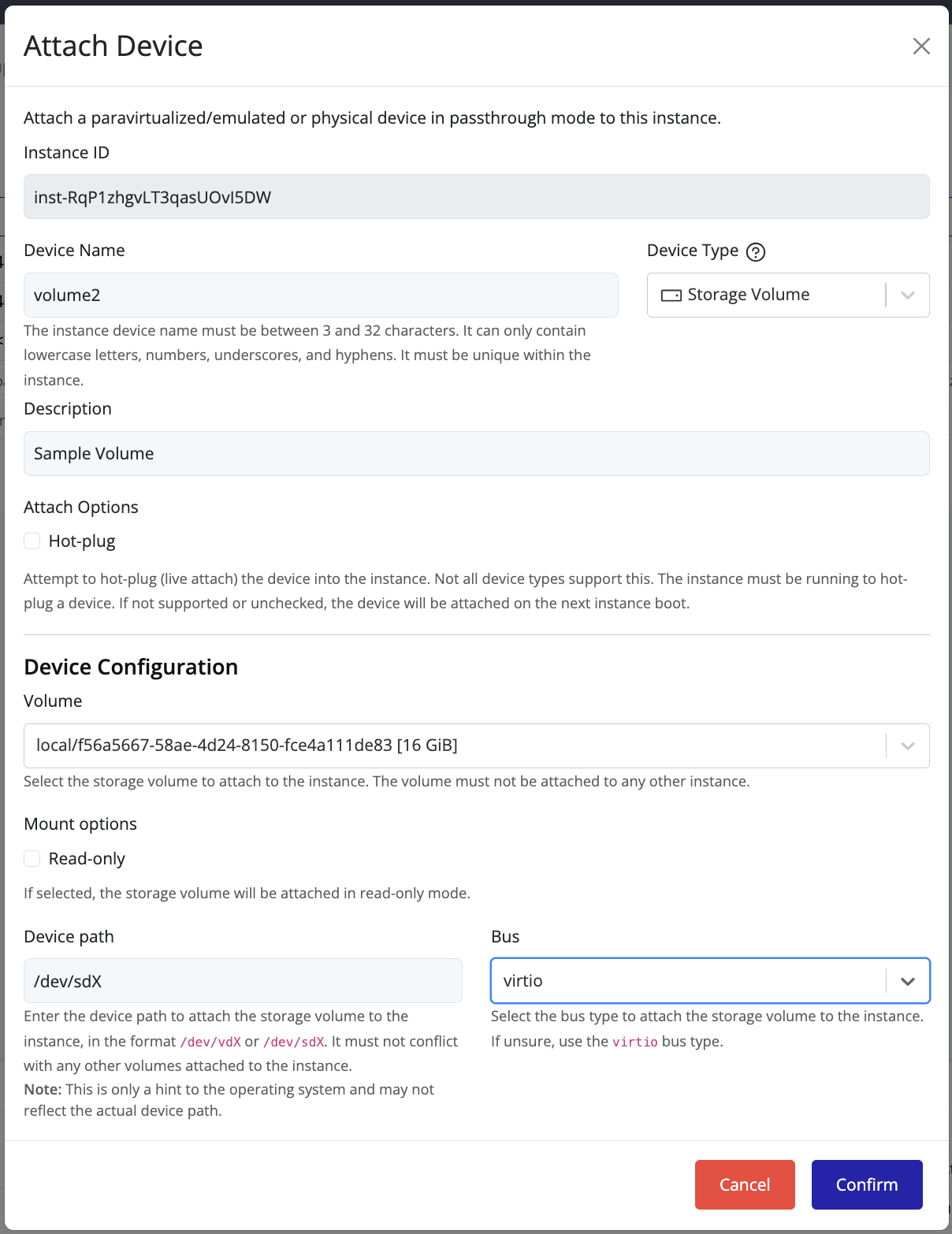

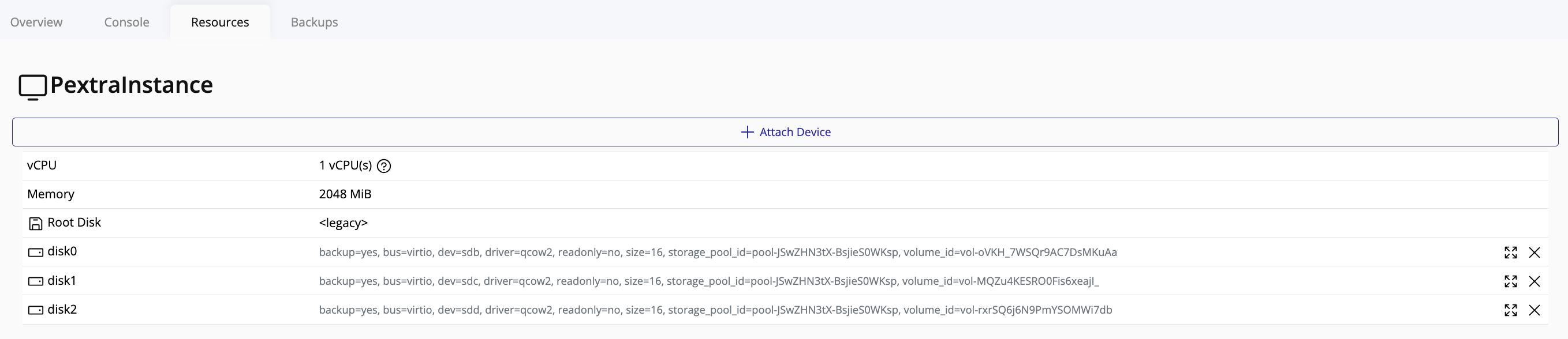

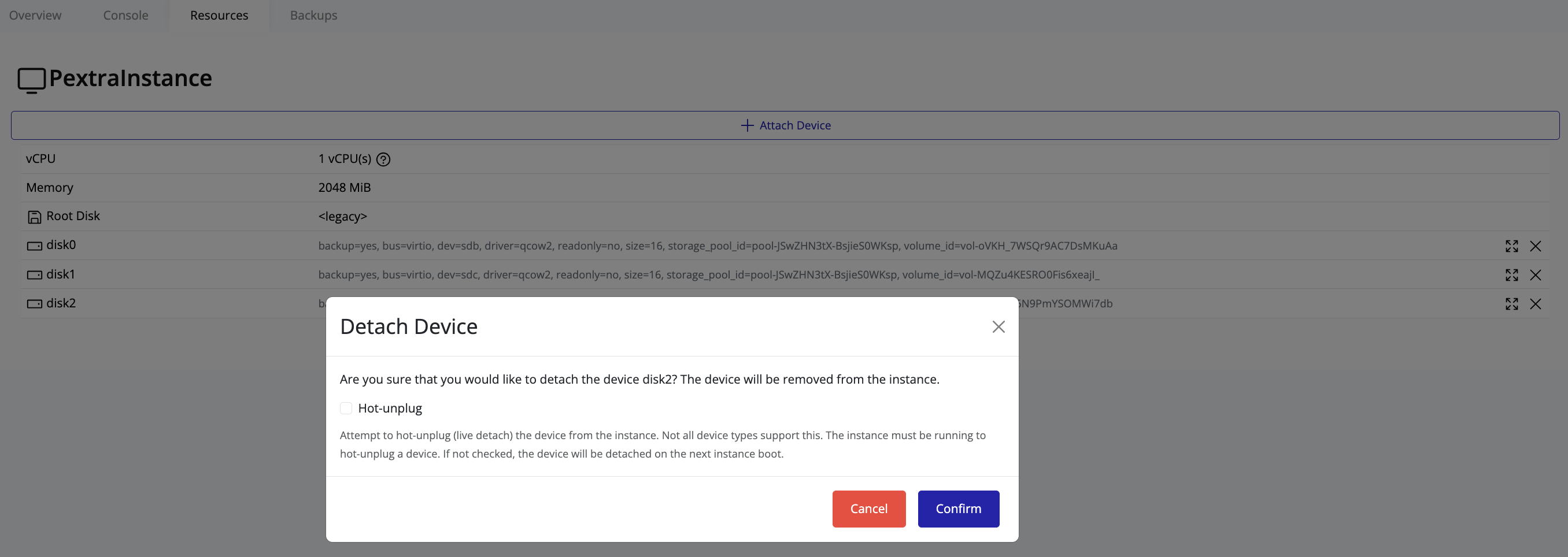

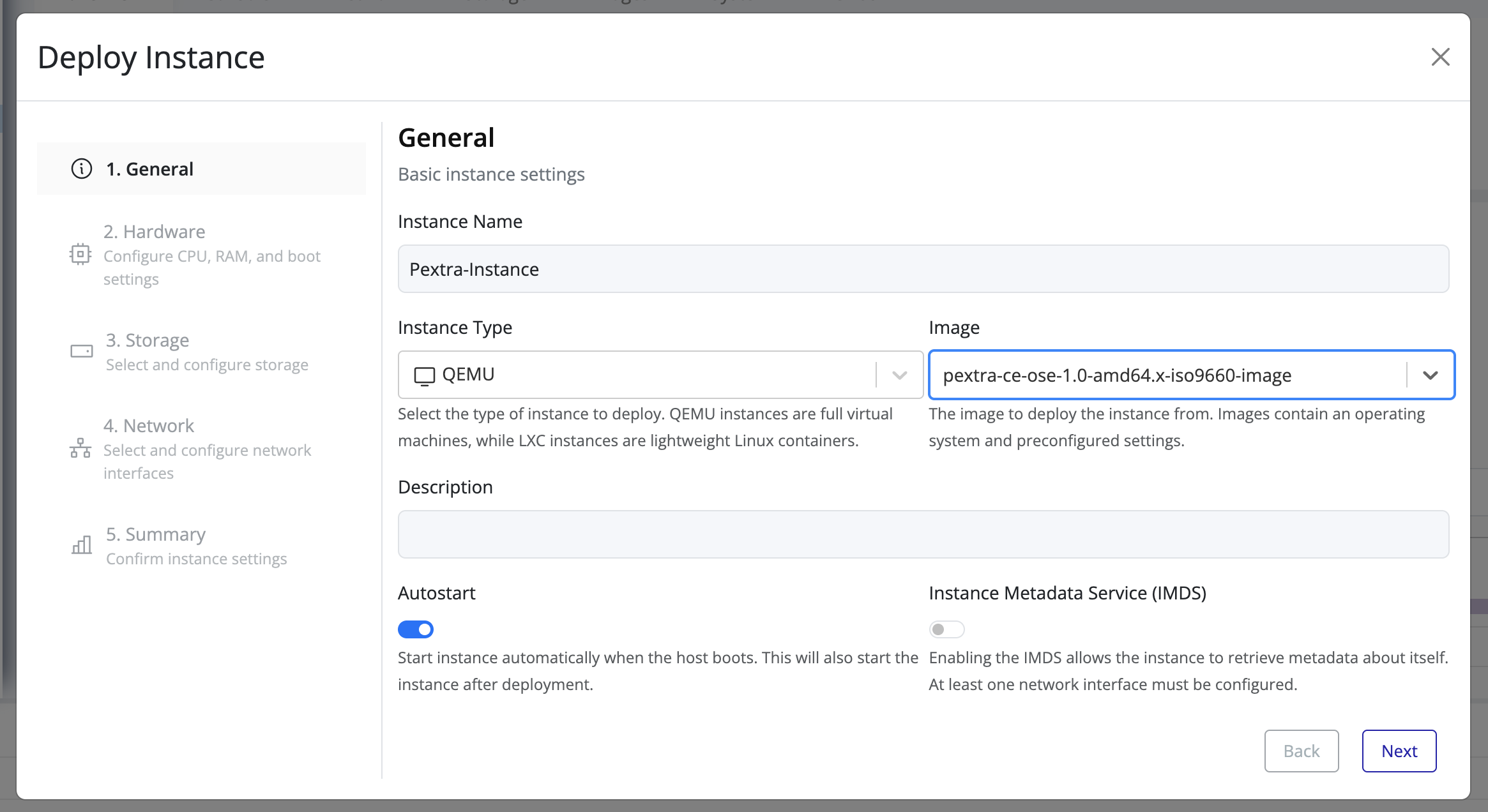

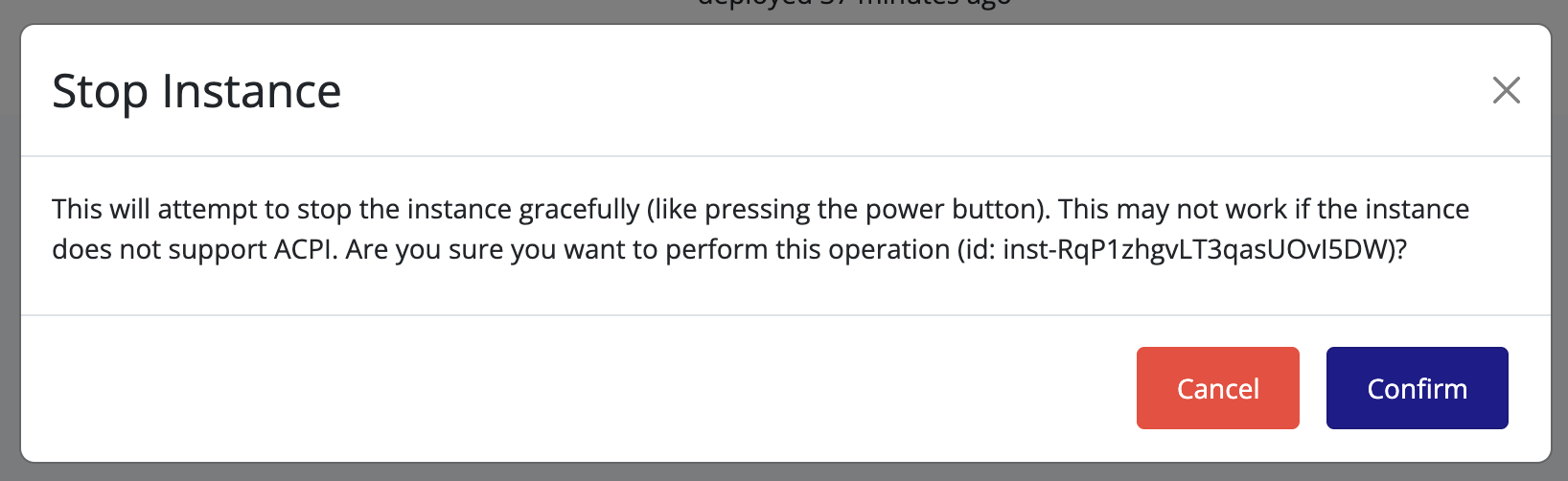

Create Volume

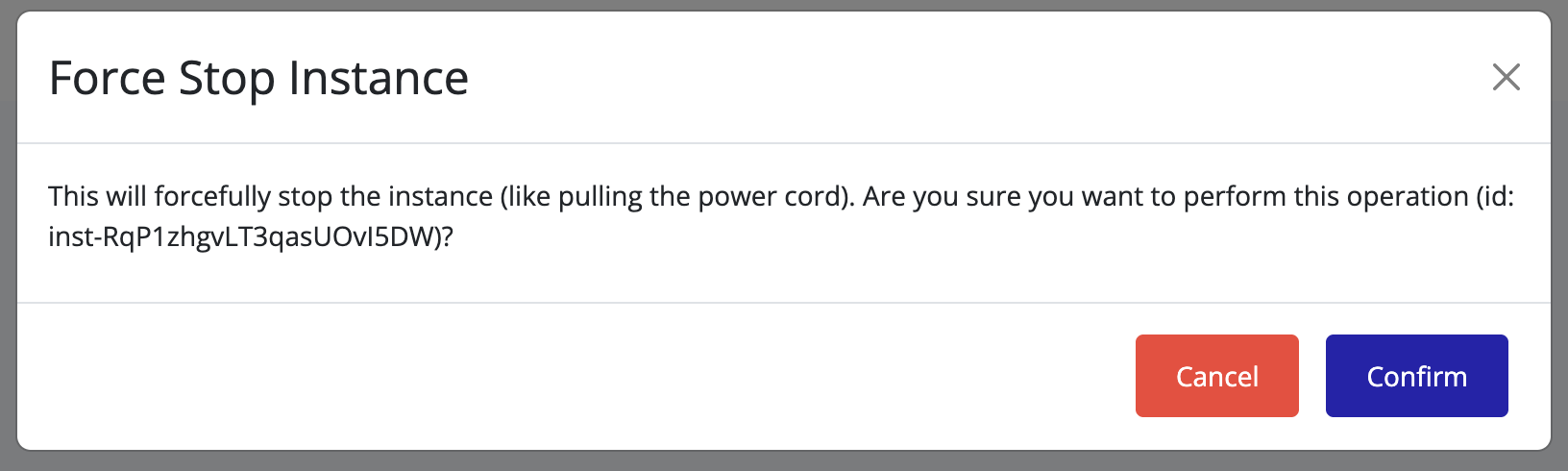

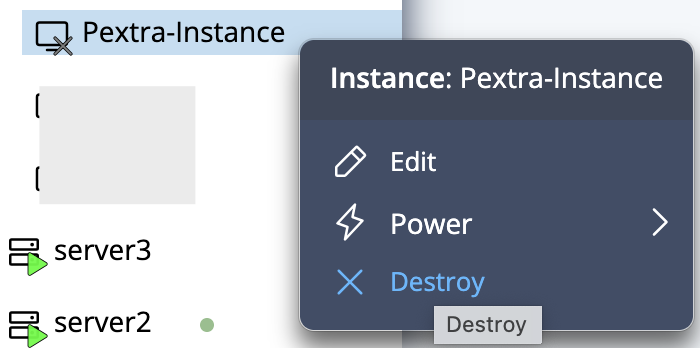

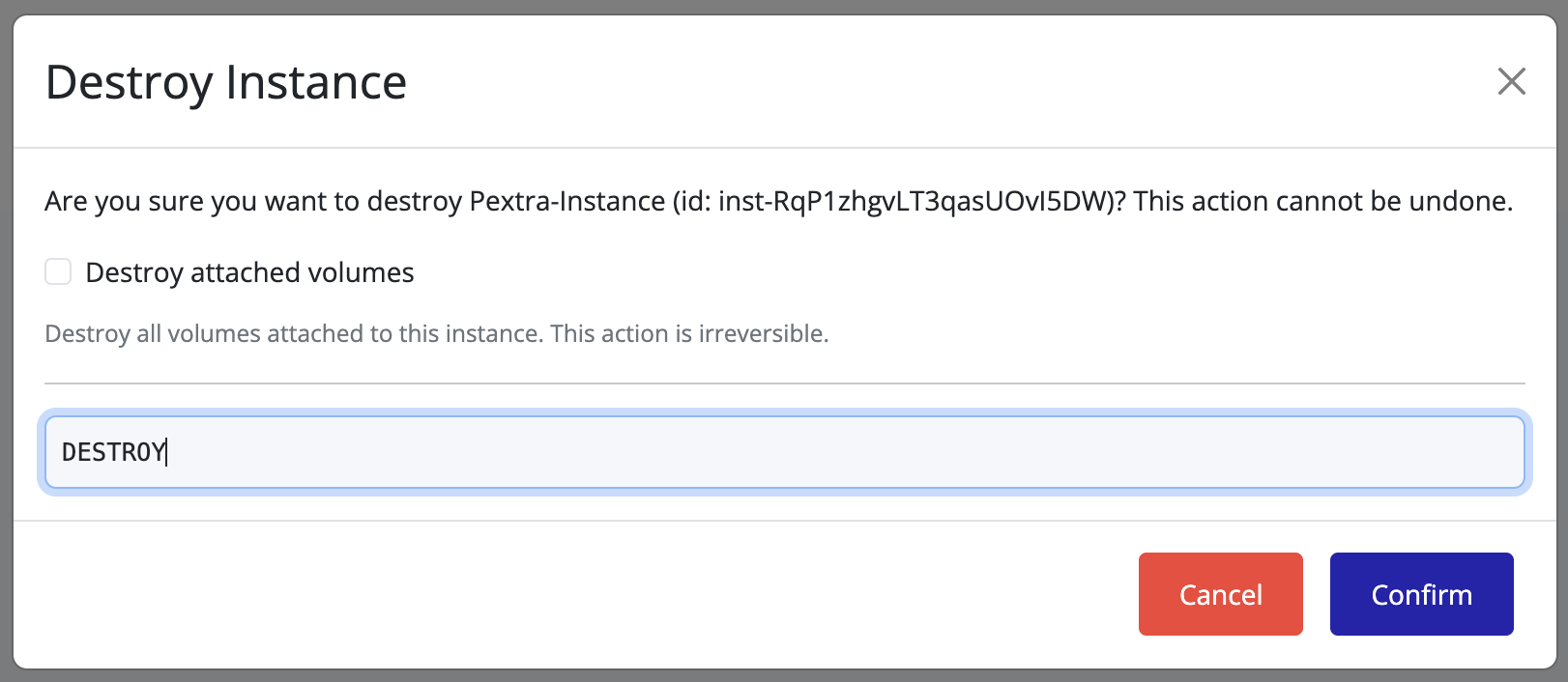

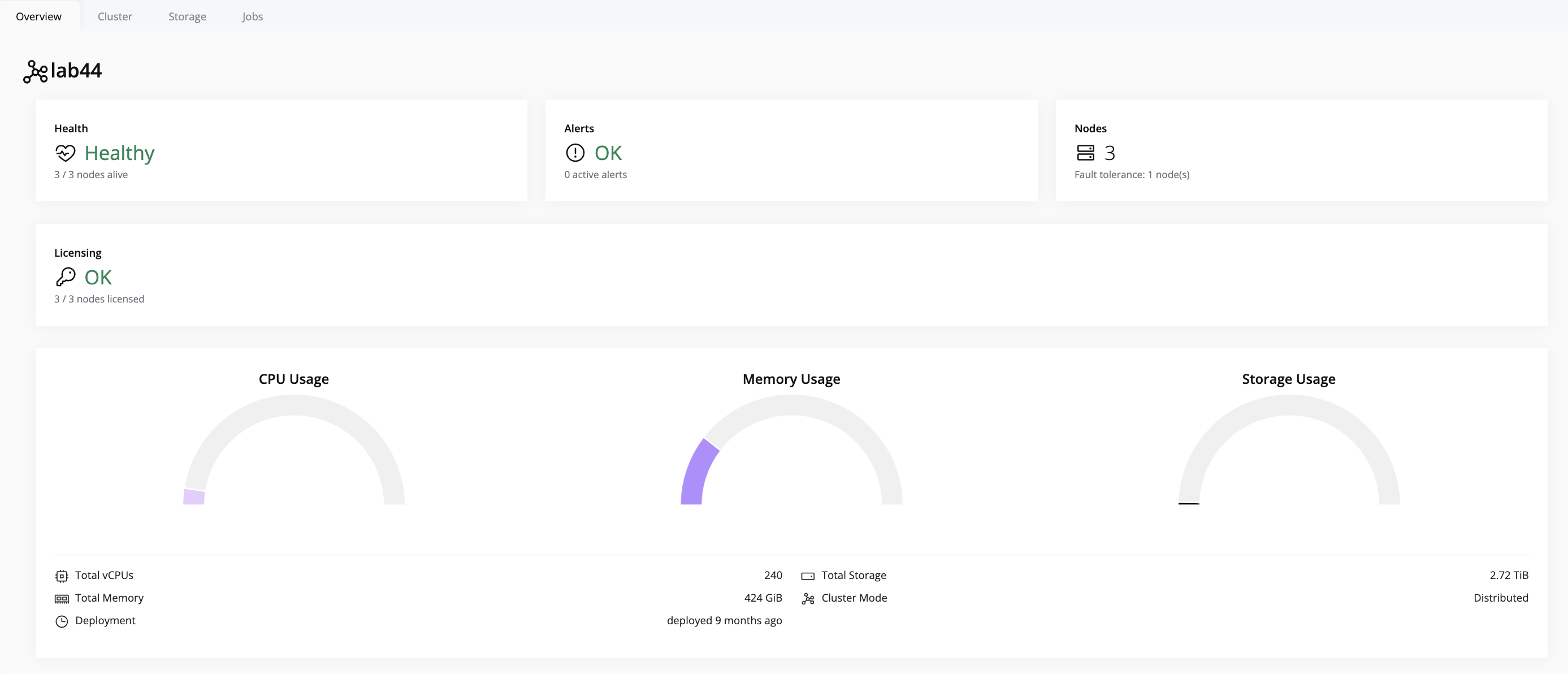

Web Interface